AI Updates from the Past Week: Anthropic Launches Claude 4 Models, OpenAI Adds New Tools, and More

The world of artificial intelligence continues its rapid evolution, with numerous significant announcements surfacing over the past week. Key players in the AI landscape, including Anthropic, OpenAI, Mistral, Google, Microsoft, Shopify, HeyMarvin, and Zencoder, unveiled new models, tools, and features designed to enhance capabilities, improve developer workflows, and push the boundaries of what AI can achieve. These updates span from powerful new foundational models and specialized AI agents to developer platforms and innovative research tools, signaling a dynamic phase in AI development and deployment.

Each announcement brings fresh potential for developers and businesses looking to integrate advanced AI into their operations or build new applications. From improved coding assistants and enhanced reasoning capabilities to novel ways of conducting research and automating development tasks, these advancements highlight the diverse applications and increasing sophistication of AI technologies. Let’s delve into the specifics of these important updates and explore what they mean for the future of AI.

Anthropic Introduces Powerful New Claude 4 Models

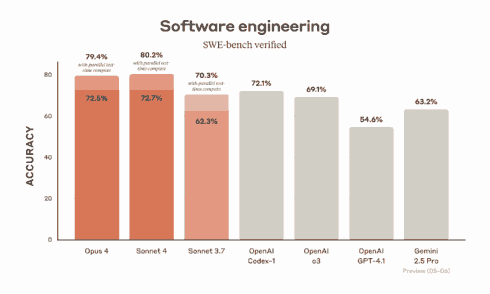

Anthropic has expanded its suite of large language models with the introduction of the Claude 4 series, featuring Claude Opus 4 and Claude Sonnet 4. These models represent a significant leap forward, particularly in handling complex, long-duration tasks. Both models are engineered to work continuously for several hours, demonstrating enhanced robustness and consistency over extended interactions.

Claude Opus 4 is positioned as the flagship model, specifically designed for demanding applications requiring advanced reasoning and problem-solving skills. Its strengths lie in tackling complex coding challenges and intricate logical puzzles. This makes it a valuable tool for developers, researchers, and professionals who need an AI capable of understanding nuanced instructions and executing multi-step processes accurately. The model’s proficiency in coding suggests potential applications in automated code generation, debugging assistance, and understanding large, complex codebases. Its ability to solve complex problems extends beyond coding, promising improvements in areas like scientific research analysis, financial modeling, and strategic decision-making. The focus on long-running tasks means users can rely on Opus 4 for sustained analytical work or creative projects without frequent restarts or context loss. This capability is crucial for multi-part queries, detailed report generation, or managing ongoing simulations, where maintaining conversational flow and retaining context over extended periods is essential for productivity and coherence.

Complementing Opus 4 is Claude Sonnet 4. This model builds upon the capabilities of its predecessor, Sonnet 3.7, aiming for a balance between high performance and computational efficiency. Sonnet models are typically designed for broader applications where speed and cost-effectiveness are key considerations alongside strong performance. Sonnet 4 likely offers improvements in areas such as text generation, summarization, and general conversational abilities while being more accessible for a wider range of use cases compared to the more specialized Opus 4. This balance makes Sonnet 4 suitable for tasks like customer support automation, content creation, educational tutoring, and general information retrieval, where timely and accurate responses are required without the need for the extreme reasoning depth of Opus 4. The enhancements in Sonnet 4 aim to make AI integration more practical and scalable for businesses of varying sizes and needs, providing a powerful yet efficient option for everyday AI tasks. The ability to process and generate text efficiently is paramount for applications that handle large volumes of data or interact with numerous users concurrently.

Beyond the core models, Anthropic also announced several beta features and general availability updates. A notable beta feature is extended thinking with tool use. This capability allows the models to break down complex problems into smaller, manageable steps and utilize external tools or APIs to gather information, perform calculations, or interact with other systems. This is a significant step towards enabling more sophisticated agentic AI, where models can autonomously perform multi-stage tasks by orchestrating various resources. The beta also includes the ability to use tools in parallel, allowing the AI to execute multiple tool calls simultaneously. This parallel processing can dramatically speed up workflows, enabling the AI to gather information from different sources or perform concurrent operations more efficiently. For instance, an AI agent could simultaneously search a knowledge base, query a database, and interact with a web service to answer a complex question, reducing latency and improving overall responsiveness.

Another significant announcement is the general availability of Claude Code. This suggests a dedicated or optimized version of Claude’s capabilities focused specifically on coding tasks. While Opus 4 excels at coding, having a generally available “Code” offering might indicate a refined or more accessible interface and feature set tailored for developers. This could include improved code completion, suggestion quality, language support, and integration capabilities with standard development environments, making it easier for developers to leverage Claude’s coding prowess in their daily workflows.

The Anthropic API also saw the addition of four new capabilities, further empowering developers integrating Claude into their applications:

- Code Execution Tool: This tool allows the AI to write and run code snippets directly within the API environment. This is invaluable for tasks requiring calculations, data manipulation, or testing code logic, enabling the AI to verify its own output or perform computations it cannot do intrinsically.

- MCP Connector: The Model Context Protocol (MCP) is emerging as a standard for AI agents to understand and interact with their environment. The inclusion of an MCP connector means Claude models can more easily discover and utilize tools and resources available in an MCP-compliant ecosystem, fostering greater interoperability between AI agents and applications.

- Files API: This API provides functionality for the model to interact with files. This is critical for tasks involving document analysis, processing large datasets, or generating reports, allowing the AI to ingest information from files and output results into new files, streamlining document-centric workflows.

- Prompt Caching: The ability to cache prompts for up to one hour allows developers to reuse previous prompts or context without resending the full query, improving performance and potentially reducing costs for repetitive tasks or multi-turn interactions within a short timeframe. This feature is particularly useful in applications where users might refine their requests or engage in follow-up questions based on previous AI responses, ensuring a faster and more seamless experience.

These updates collectively position Anthropic’s Claude models as increasingly powerful and versatile tools for developers and businesses. The introduction of Claude 4 models with enhanced capabilities for long-running and complex tasks, combined with improved tool use and developer-friendly API additions, underscores Anthropic’s commitment to advancing the state of the art in conversational and agentic AI. The differentiation between Opus 4 and Sonnet 4 provides options catering to different needs and resource constraints, expanding the accessibility and applicability of the Claude family of models across a broader range of industries and use cases. The beta features around extended thinking and parallel tool use hint at a future where AI agents can undertake even more sophisticated, autonomous tasks by intelligently interacting with their digital environment.

OpenAI Enhances Responses API with New Tools and Features

OpenAI has rolled out several significant enhancements to its Responses API, aiming to make its models more capable and versatile for developers. The updates introduce new tools and features that extend the API’s functionality and improve the performance and user experience of AI-powered applications.

Among the notable additions is remote MCP server support. Similar to Anthropic’s announcement, this signifies growing support for the Model Context Protocol across major AI platforms. By supporting remote MCP servers, OpenAI’s models can now potentially discover and interact with a wider range of external tools, data sources, and services that adhere to the MCP standard. This capability is crucial for building sophisticated AI agents that need to access and process information from various locations or execute actions in different systems, moving beyond relying solely on internally provided tools. The ability to connect to remote servers through MCP opens up possibilities for integrating OpenAI models with enterprise systems, cloud services, and specialized APIs, enabling the AI to become a more powerful orchestrator of digital tasks.

The Responses API now also includes support for the latest image generation model. While not explicitly named in the source text, this likely refers to DALL-E or a successor model that offers improved image quality, flexibility, or new features. Integrating image generation capabilities directly into the Responses API allows developers to build applications that combine text-based interactions with visual outputs seamlessly. Potential use cases include generating illustrations for content, creating product mockups based on descriptions, designing marketing materials, or even enabling creative tools that allow users to visualize ideas generated through text prompts. The latest model likely offers better coherence, detail, and adherence to user prompts compared to previous iterations, enhancing the quality and utility of generated images.

Two specific tools have also been added to the API, enhancing the capabilities of OpenAI’s reasoning models:

- Code Interpreter Tool: This is a powerful addition that allows the AI model to execute code (typically Python) within a sandboxed environment. This tool is invaluable for tasks that require precise calculations, data analysis, statistical modeling, or working with structured data. The AI can generate code to perform these operations, run it, and then use the results to inform its response or decision-making process. This significantly boosts the model’s ability to handle quantitative tasks and verify logical steps, making it more reliable for applications requiring numerical accuracy or complex data manipulation. Developers can leverage this to build applications that perform detailed analysis, generate charts and graphs, or process complex datasets based on user queries.

- File Search Tool: The inclusion of a file search tool allows the AI to search through provided files or documents to retrieve relevant information. This is critical for applications that need to ground their responses in specific knowledge bases, user-provided documents, or proprietary data. Instead of relying solely on its pre-trained knowledge, the model can use the file search tool to find exact information, excerpts, or data points from designated files, ensuring more accurate, contextually relevant, and up-to-date responses. This is particularly useful for applications like document analysis, research assistants, question answering systems based on specific corpora, or processing user uploads.

OpenAI also announced features focused on improving the efficiency and usability of the API for complex workflows:

- Background Mode: This new mode allows the model to execute complex reasoning tasks asynchronously. For tasks that might take a significant amount of time, such as extensive analysis, complex problem-solving, or multi-step processes, the AI can perform the work in the background without blocking the user interface or requiring the client application to wait synchronously. This enhances the user experience by providing responsiveness while the AI works on resource-intensive tasks, with the results becoming available once the background process is complete. This is essential for building scalable and responsive applications where users shouldn’t have to wait for potentially long AI computations.

- Reasoning Summaries: This feature likely provides a summary or trace of the steps the AI model took to arrive at its conclusion or generate its response, especially for complex reasoning tasks. Understanding the AI’s thought process can be crucial for debugging, verifying results, and building trust in AI outputs. Reasoning summaries can help developers and users understand why the AI made certain decisions, what information it used, and how it applied its tools, providing valuable transparency into the AI’s internal operations. This can aid in identifying biases, errors, or limitations in the AI’s approach and improving prompts or underlying data accordingly.

- Ability to Reuse Reasoning Items: This feature allows components or steps from a previous reasoning process to be reused across different API requests. For workflows that involve similar sub-problems or require applying the same analytical steps to different data points, reusing reasoning items can significantly reduce computational overhead and latency. This enhances efficiency and consistency, particularly in applications where users might refine their queries or apply the same logic to different inputs, avoiding the need for the AI to re-compute foundational reasoning steps each time.

These updates to the OpenAI Responses API collectively enhance the platform’s power and flexibility. The support for remote MCP servers and specialized tools like Code Interpreter and File Search equip developers with the means to build more sophisticated and domain-specific AI applications. Features like background mode and reasoning reuse improve the efficiency and usability of the API, making it more practical for handling complex, real-world tasks at scale. These advancements demonstrate OpenAI’s continued focus on providing developers with the necessary building blocks to integrate cutting-edge AI capabilities into a wide range of products and services.

Mistral Unveils Devstral: A Compact LLM for Coding Agents

Mistral AI has introduced Devstral, a new open-source Large Language Model (LLM) specifically engineered for agentic coding tasks. Agentic AI refers to systems that can break down complex goals into smaller steps, plan actions, and execute them to achieve the desired outcome. Devstral focuses on this agentic approach within the software development domain.

Mistral highlights that while general-purpose LLMs are proficient at isolated coding tasks, such as generating standalone functions or assisting with code completion, they often struggle with the complexities of real-world software engineering problems. These challenges involve understanding code within the context of a large codebase, identifying dependencies between disparate software components, and pinpointing subtle bugs hidden within intricate functions. Devstral was explicitly trained to address these real-world scenarios, aiming to solve problems that mirror those found in actual GitHub issues.

A key characteristic of Devstral is its lightweight design. This model is intentionally smaller than many other state-of-the-art LLMs, which offers significant advantages. Notably, its size allows it to run on relatively accessible hardware, such as a single RTX 4090 graphics card or a Mac with 32GB of RAM. This capability makes Devstral particularly well-suited for local, on-device use. Running the model locally can offer benefits such as reduced latency, enhanced data privacy (as code doesn’t need to be sent to external servers), and the ability to work offline. For developers working with sensitive code or in environments with limited connectivity, a local coding AI agent can be immensely valuable.

Mistral presented benchmark results comparing Devstral’s performance on the SWE-Bench Verified benchmark, a challenging test designed to evaluate models on real-world software engineering tasks extracted from GitHub. According to the benchmark, Devstral outperformed two other prominent models: GPT-4.1-mini (likely a smaller, more efficient variant of OpenAI’s GPT-4) and Claude 3.5 Haiku (Anthropic’s fast and affordable model).

Here’s a comparison of their performance on the SWE-Bench Verified benchmark, as presented by Mistral:

| Model | SWE-Bench Verified Score |

|---|---|

| Devstral | Higher |

| GPT-4.1-mini | Lower than Devstral |

| Claude 3.5 Haiku | Lower than Devstral |

Note: Specific numerical scores were not provided in the source text, only the relative performance comparison where Devstral outperformed the other two models on this specific benchmark.

The fact that a lightweight, open-source model like Devstral can surpass larger or commercially focused models on a benchmark specifically designed for real-world coding challenges is noteworthy. It suggests that specialized training and architecture tailored for coding agents can yield highly effective results, even with a smaller model footprint. This could pave the way for more efficient and accessible AI tools for software development, potentially lowering the barrier to entry for developers wanting to leverage AI assistance and enabling integration into a wider range of development workflows and tools.

The focus on solving real GitHub issues implies that Devstral is trained on practical scenarios encountered by developers, such as fixing bugs, adding small features, refactoring code, and understanding error messages within the context of actual projects. This practical training dataset likely contributes to its effectiveness in handling the nuances and interconnectedness of real-world codebases, which is often a stumbling block for more general-purpose models.

Devstral’s release as an open-source model is also a significant contribution to the AI community. Open-source models foster transparency, allow for community-driven improvements, and can be freely integrated and adapted by developers and researchers. This could accelerate innovation in AI-powered coding tools and agents, enabling a wider range of applications and integrations within the software development ecosystem. By providing a powerful, yet accessible, model specifically designed for agentic coding, Mistral is empowering developers to build more sophisticated and context-aware AI assistants that can truly understand and interact with complex code projects. The ability to run locally on moderate hardware further enhances its appeal, making advanced AI coding assistance available to individual developers and smaller teams without requiring expensive cloud infrastructure.

Google’s AI Announcements from Google I/O

Google’s annual developer conference, Google I/O, is typically a major platform for showcasing the company’s advancements, and the latest event was no exception, featuring a wealth of updates across various AI initiatives. The announcements spanned new foundational models, specialized AI variants, and enhancements to developer tools, underscoring Google’s extensive investment in the field.

The event highlighted new models designed for diverse applications and hardware capabilities. Among these were:

- Gemini Diffusion: Described as a new text model, this could potentially relate to diffusion models used in generative AI, possibly for text-to-image generation or other generative text applications, though the name suggests a connection to diffusion processes often used in image synthesis.

- Gemma 3n: This model is a multimodal AI, meaning it can process and understand information from multiple types of data, including audio, text, images, and video. What makes Gemma 3n particularly noteworthy is its design for running on personal devices such as phones, laptops, and tablets. Optimizing a multimodal model to run effectively on edge devices is a significant technical achievement, enabling richer, more interactive AI experiences directly on user hardware without constant reliance on cloud connectivity. This opens up possibilities for on-device processing of multimedia content, real-time multimodal interactions, and enhanced privacy by keeping data processing local.

Google also revealed specialized variants derived from its core Gemma model family:

- MedGemma: This variant is tailored specifically for applications in the healthcare domain. Medical AI requires deep understanding of complex terminology, diagnostic processes, and patient data. MedGemma’s specialization suggests training on vast amounts of medical literature, research papers, and clinical data to provide accurate and relevant responses for medical professionals, researchers, or healthcare applications. Potential use cases include assisting in diagnosing diseases, summarizing medical research, transcribing clinical notes, or providing information on treatments and conditions.

- SignGemma: Focused on accessibility, SignGemma is designed for translating sign language into spoken language text. This multimodal application likely involves processing video input of sign language gestures and movements and converting them into understandable written language. This technology has immense potential for improving communication accessibility for the deaf and hard-of-hearing community, enabling more seamless interactions in various settings, from daily conversations to educational and professional environments.

A major focus of the AI updates at Google I/O was on developer productivity tools, particularly centered around the Gemini family of models. Gemini Code Assist was a highlight, with its general availability announced for both individual developers and for teams using GitHub. Initially introduced as a preview feature, the move to general availability signals its readiness for broader adoption in professional workflows. Gemini Code Assist is powered by Gemini 2.5, indicating that it leverages a powerful and capable version of the Gemini architecture for code understanding and generation tasks.

The general availability release of Gemini Code Assist included several key updates and features:

- Chat History and Threads: This allows developers to maintain context across multiple interactions with the AI assistant, picking up conversations where they left off and keeping related queries organized within threads. This is crucial for complex coding tasks that require multiple steps and clarifications.

- Ability to Specify Rules: Developers can now define specific rules or constraints that the AI should adhere to for every generation within the chat session. This provides granular control over the AI’s behavior, ensuring that generated code or suggestions meet specific project standards, style guides, or architectural requirements.

- Custom Commands: This feature enables developers to create predefined commands or macros for common tasks they perform with the AI. Instead of typing out lengthy prompts, they can use a custom command to trigger a specific action or workflow, streamlining repetitive interactions and tailoring the tool to their individual needs.

- Review and Accept Code Suggestions in Parts: For code suggestions, developers gain the flexibility to review and accept changes incrementally. They can review suggestions piece by piece, across different files affected by the suggestion, or choose to accept all changes at once. This fine-grained control is essential for maintaining code quality and ensuring that automatically generated code integrates seamlessly with existing projects.

Beyond Code Assist, Google unveiled other innovative tools designed to streamline the development process:

- A reimagined version of Colab, Google’s cloud-based Python notebook environment, was announced. While specifics weren’t detailed in the source, a reimagined Colab likely includes deeper integration with Gemini models, enhanced AI-powered coding assistance, improved collaboration features, and potentially new ways to interact with data and models within the notebook environment.

- Stitch is a new tool designed to accelerate the process of creating user interfaces (UIs). It can generate UI components directly from wireframes or text prompts. This represents a significant step towards bridging the gap between design and implementation, allowing designers and developers to quickly prototype and build UI elements by simply sketching or describing the desired layout and components. This could drastically speed up the initial stages of application development.

- New features were added to Firebase Studios, a platform or suite of tools related to Google’s Firebase development platform. One specific new feature mentioned is the ability to translate Figma designs into applications. This integration directly connects the design workflow (using Figma) with the development process (presumably within Firebase Studios or related tools), automating the conversion of design assets and layouts into functional application code or components. This capability promises to reduce the manual effort required to translate design specifications into code, improve consistency between design and implementation, and accelerate the development cycle.

The updates from Google I/O paint a picture of a company deeply integrating AI across its ecosystem, from foundational research and specialized models to developer tooling and cloud platforms. The introduction of multimodal and edge-optimized models like Gemma 3n and specialized variants like MedGemma and SignGemma demonstrate a focus on expanding the types of problems AI can solve and making it more accessible. The significant enhancements to Gemini Code Assist and the introduction of tools like Stitch and the Figma-to-app translation capability highlight a clear strategy to leverage AI to boost developer productivity and streamline the software development lifecycle. These announcements collectively illustrate Google’s efforts to make AI a fundamental layer in its products and services, empowering developers to build more intelligent, accessible, and efficient applications.

Microsoft Build Highlights AI Agents and Development Platforms

Microsoft Build, the company’s developer conference, also featured substantial AI-related announcements, focusing heavily on AI agents, integrated development experiences, and foundational platforms for building and deploying AI solutions. The updates underscore Microsoft’s commitment to embedding AI, particularly through its partnership with OpenAI and its own research initiatives, into its core developer tools and cloud services.

A central theme was the introduction and enhancement of coding agents. A new coding agent was specifically announced as part of GitHub Copilot. This agent activates when a developer assigns it a GitHub issue or interacts with it via a prompt within VS Code, Microsoft’s popular code editor. This deep integration allows the Copilot agent to understand the context of specific issues or code sections and provide targeted assistance. The agent is designed to help developers with a range of common tasks encountered during the software development process, including:

- Adding features: Assisting in writing new code to implement requested functionalities.

- Fixing bugs: Identifying potential issues and suggesting or implementing code corrections.

- Extending tests: Generating or modifying test cases to improve code coverage and reliability.

- Refactoring code: Suggesting and applying improvements to code structure and readability without changing its functionality.

- Improving documentation: Helping generate or update documentation for code.

Crucially, GitHub confirmed that all pull requests generated by this agent require human approval before they are merged. This ensures that developers remain in control of the final code quality and can review AI suggestions for accuracy, security, and adherence to project standards, maintaining a collaborative loop between the AI assistant and the human developer. This approach leverages the AI’s ability to quickly draft solutions while retaining human oversight for critical decisions.

Microsoft also announced Windows AI Foundry, a platform designed to support the end-to-end AI developer lifecycle. This platform aims to provide developers with the tools and infrastructure necessary for training, fine-tuning, and deploying AI models. Windows AI Foundry supports various workflows, including managing and running open-source LLMs locally through Foundry Local. This capability empowers developers to experiment with and utilize models like those from Mistral or other open-source projects directly on their development machines or on local infrastructure. Furthermore, the platform allows developers to bring their own proprietary models, offering capabilities to convert, fine-tune, and deploy these models across different environments, including clients (e.g., Windows devices) and the cloud (e.g., Azure). This flexibility provides developers with a unified platform for managing their AI models regardless of their origin or intended deployment target, streamlining the process of taking models from research to production.

Another significant announcement was the expansion of support for the Model Context Protocol (MCP) across Microsoft’s portfolio. MCP, which facilitates communication and understanding between AI agents and their environment, is now supported across a wide range of Microsoft platforms and services, including:

- GitHub: Integration within GitHub allows AI agents to potentially understand the context of repositories, issues, and pull requests more deeply.

- Copilot Studio: This tool for building custom copilots can now leverage MCP to connect to various data sources and tools.

- Dynamics 365: Business applications within Dynamics 365 can utilize MCP to enable AI agents to access and interact with enterprise data and workflows.

- Azure AI Foundry: The AI development platform itself supports MCP, facilitating the integration of models and tools.

- Semantic Kernel: Microsoft’s open-source SDK for integrating LLMs with conventional programming languages includes MCP support, making it easier for developers to build AI-powered applications that interact with external resources.

- Windows 11: Integrating MCP into the operating system itself suggests a future where AI agents can understand and interact with applications and data directly on the user’s desktop environment in a standardized way.

Widespread support for MCP across these diverse platforms signifies a strategic move by Microsoft to build an interoperable ecosystem for AI agents. It aims to make it easier for developers to create agents that can seamlessly access information and perform actions across different Microsoft services and potentially external systems that also adopt the MCP standard.

Microsoft also introduced a new open-source project called NLWeb. This project is designed to help developers create conversational AI interfaces for their websites. NLWeb allows developers to connect conversational AI experiences to any underlying model or data source they choose, providing flexibility in how they build interactive website features. A key aspect of NLWeb is that its endpoints act as MCP servers. This means that conversational interfaces built using NLWeb can easily make their content and underlying data discoverable and accessible to other AI agents that support the Model Context Protocol. This could enable a future where AI agents browsing the web can understand and interact with websites built with NLWeb in a structured and intelligent manner, facilitating tasks like information extraction, completing forms, or performing actions on behalf of the user through conversational interfaces.

In summary, Microsoft’s announcements at Build reflect a strong push towards operationalizing AI, particularly through the lens of developer productivity and ecosystem integration. The new GitHub Copilot agent provides practical AI assistance directly within the developer workflow, with a focus on human oversight. Windows AI Foundry offers a comprehensive platform for managing the AI lifecycle, supporting both open-source and proprietary models across different deployment targets. The broad adoption of the Model Context Protocol across key Microsoft services aims to create a more connected and capable environment for AI agents. Finally, the NLWeb project contributes to making web content more accessible and interactive for AI agents, potentially reshaping how AI interacts with the vast information available on the internet. These initiatives collectively reinforce Microsoft’s position as a major provider of tools and platforms for AI development and deployment.

Shopify Releases New Developer Tools Focused on AI and Workflow

Shopify, the popular e-commerce platform, has announced new developer tools designed to streamline app development on its ecosystem and integrate AI capabilities into both developer workflows and the merchant experience. These updates focus on unifying development resources, enhancing productivity with AI, and enabling novel applications.

A central theme of Shopify’s announcement is the launch of a new unified developer platform. This platform integrates previously separate resources, specifically the Dev Dashboard and the CLI (Command Line Interface), into a more cohesive experience. A unified platform aims to simplify the developer journey by providing a single entry point for managing apps, accessing tools, and interacting with the Shopify ecosystem. This integration reduces friction, making it easier for developers to build, test, and deploy applications for Shopify stores.

A significant feature introduced within this platform is AI-powered code generation. Leveraging AI to assist with writing code can significantly accelerate the development process. For Shopify developers, this could mean AI suggesting code snippets for common tasks like interacting with the Shopify API, handling store data, or building UI components using Shopify’s design system. AI code generation can help reduce boilerplate code, minimize errors, and allow developers to focus on the unique logic of their applications, leading to faster development cycles and increased productivity.

Shopify has also made a significant change regarding developer testing environments. Developers can now create “dev stores” where they can preview their applications in a test environment. This capability was previously restricted to merchants on Plus plans, Shopify’s enterprise-level offering. By making dev stores available to all developers, Shopify is democratizing access to crucial testing infrastructure. Dev stores provide a safe sandbox environment to install and test apps, simulate merchant workflows, and ensure compatibility and functionality before deploying to live stores. This change is invaluable for developers, enabling more thorough testing and improving the quality and reliability of apps available on the Shopify App Store.

Other new features announced by Shopify further enhance the platform’s development capabilities:

- Declarative Custom Data Definitions: This feature likely provides a more structured and easier way for developers to define custom data fields and types within the Shopify environment. Using a declarative approach means developers describe the desired structure and constraints of their data, and the platform handles the underlying implementation. This simplifies data modeling for apps that need to store specific information not covered by Shopify’s standard data types, making it more straightforward to extend the platform’s data capabilities.

- Unified Polaris UI Toolkit: Polaris is Shopify’s design system and UI framework. A unified toolkit suggests improvements or consolidation of the tools and resources developers use to build user interfaces that align with Shopify’s look and feel. This consistency is vital for creating apps that feel native and intuitive to merchants using the Shopify admin interface. A unified toolkit likely provides improved components, guidelines, and development resources, making it easier and faster to build high-quality, on-brand UIs for apps.

- Storefront MCP: Similar to the announcements from Microsoft and Anthropic, Shopify is also embracing the Model Context Protocol (MCP). The introduction of Storefront MCP specifically allows developers to build AI agents that can act as shopping assistants for stores. By exposing storefront data and functionality through an MCP-compliant interface, developers can create AI agents that can understand product catalogs, answer customer questions about products and orders, provide recommendations, and potentially even guide users through the checkout process conversationally. This opens up exciting possibilities for enhancing the customer shopping experience through AI-powered virtual assistants directly integrated into the online store.

These updates from Shopify demonstrate a clear focus on empowering its developer ecosystem and integrating AI into core platform capabilities. By unifying development tools, offering AI-powered assistance, providing essential testing environments to all developers, and enhancing data and UI tools, Shopify is making it easier to build high-quality applications. The introduction of Storefront MCP is particularly forward-looking, enabling the development of next-generation AI-powered shopping experiences that can potentially transform customer interaction on e-commerce sites. These developments aim to strengthen the Shopify platform by fostering innovation within its developer community and enabling new features that benefit both merchants and their customers.

HeyMarvin Launches AI Moderated Interviewer for Scaled Research

HeyMarvin, a company focused on user research tools, has introduced an innovative application of AI: the AI Moderated Interviewer. This tool is designed to conduct moderated user interviews with a potentially very large number of participants, effectively eliminating the need for a human facilitator in each individual session.

Traditionally, moderated user interviews involve a researcher or moderator interacting directly with a single participant or a small group, asking questions, observing behavior, and guiding the conversation based on responses. While invaluable for gathering rich, qualitative data, this method is time-consuming and expensive, severely limiting the number of interviews that can be conducted within practical constraints.

HeyMarvin’s AI Moderated Interviewer aims to break through this limitation by using AI to automate the moderation process. The tool can conduct interviews with potentially thousands of participants simultaneously or sequentially, a scale previously unimaginable for moderated, qualitative research. Beyond simply conducting the interviews, the AI is also capable of analyzing the interview responses to surface insights and trends. This includes identifying common themes, extracting key opinions, and summarizing findings across the large participant pool.

Prayag Narula, CEO and co-founder of HeyMarvin, emphasized the power of this tool:

“What makes it so powerful is that it enables free-flowing, qualitative, engaging conversations — but on demand and at scale. We’re talking hundreds, even thousands of people, something that was previously only seen at large scale using a small army of volunteers in moments like presidential elections. Now, even a small team can have that same in-depth dialogue with their customers. It’s not just a better survey, and it’s not replacing traditional user interviews. It’s a whole new way of doing research that simply didn’t exist a few months ago.”

Narula’s statement highlights the core value proposition: combining the depth of qualitative interviewing (free-flowing, engaging conversations) with the breadth and efficiency of quantitative methods (on-demand, large-scale participation). This tool is positioned not as a replacement for traditional methods but as a complementary, entirely new approach to research. Surveys can gather quantitative data at scale but lack the depth of conversation. Traditional moderated interviews provide depth but are limited in scale. The AI Moderated Interviewer attempts to capture the best of both worlds.

Potential applications for such a tool are vast, particularly for product teams, marketers, and researchers. Companies could use it to:

- Gather feedback on product prototypes from a large user base quickly.

- Understand customer needs and pain points at scale.

- Conduct market research on preferences and behaviors across diverse demographics.

- Test messaging and positioning with target audiences.

- Collect feedback after product launches to identify common issues or successes.

The ability for the AI to analyze responses automatically is a crucial component. Manually analyzing hundreds or thousands of interview transcripts would be an insurmountable task. The AI’s capability to identify trends and insights makes the large-scale data collection actionable, transforming raw conversational data into summarized, thematic findings that researchers can use for decision-making.

The AI Moderated Interviewer represents a significant innovation in the field of market and user research, leveraging advancements in conversational AI and natural language processing. It offers the potential to gather rich qualitative insights from a scale of participants typically only accessible through quantitative methods like surveys. This could enable faster iteration cycles for product development, deeper understanding of customer segments, and more data-driven decision-making across organizations by making large-scale qualitative feedback practical and cost-effective. The tool’s design to facilitate “free-flowing, qualitative, engaging conversations” suggests that the AI is capable of adapting to participant responses, asking follow-up questions, and maintaining a natural dialogue flow, moving beyond simple scripted questionnaires.

Zencoder Announces Autonomous Zen Agents for CI/CD

Zencoder has introduced a novel application of AI agents within the software development pipeline with its Autonomous Zen Agents for CI/CD. These agents are designed to run directly within Continuous Integration/Continuous Deployment (CI/CD) pipelines, automating various tasks traditionally performed by developers or requiring manual intervention.

The Zen Agents are capable of being triggered by webhooks originating from issue trackers (like Jira or GitHub Issues) or code events (like pull requests or commits). This event-driven architecture allows the agents to react automatically to changes and events occurring within the development workflow, integrating seamlessly into existing CI/CD setups.

Once triggered, these autonomous agents are equipped to perform a range of tasks aimed at accelerating the software development lifecycle and improving code quality and efficiency. Their capabilities include:

- Resolve Issues: Automatically identifying and addressing certain types of issues logged in tracking systems.

- Implement Fixes: Generating and applying code changes to fix detected bugs or problems.

- Improve Code Quality: Analyzing code for stylistic issues, potential bugs, or anti-patterns and suggesting or implementing improvements.

- Generate and Run Tests: Automatically creating new test cases for new code or features and executing existing test suites to ensure code correctness and prevent regressions.

- Create Documentation: Generating or updating documentation based on code changes or new features.

By automating these tasks directly within the CI/CD pipeline, the Zen Agents can potentially reduce the manual effort required from developers for routine or well-defined coding and maintenance activities. This automation can lead to faster feedback loops, quicker issue resolution, and more consistent application of coding standards and testing practices.

Andrew Filev, CEO and founder of Zencoder, articulated the vision behind this offering:

“The next evolution in AI-powered development isn’t just about coding faster – it’s about accelerating the whole software development lifecycle, where coding is just one step. By bringing autonomous agents into CI/CD pipelines, we’re enabling teams to eliminate routine work and accelerate hand-offs, maintaining momentum 24/7, while keeping humans in control of what ultimately ships.”

Filev’s statement emphasizes that the impact of AI in software development extends beyond just assisting with writing code. The full lifecycle, from planning and coding to testing, deployment, and maintenance, can benefit from automation. By placing autonomous agents within the CI/CD pipeline, Zencoder aims to automate the transitions and tasks between these stages. The ability of the agents to work 24/7 ensures continuous momentum in the development process, reducing idle time and accelerating the path from code commit to deployable artifact.

The crucial aspect highlighted by Filev is “keeping humans in control of what ultimately ships.” While the agents are autonomous in performing tasks, the implication is that there are mechanisms in place for human review and approval, similar to the GitHub Copilot agent’s pull request requirement. This ensures that critical decisions and final code changes are vetted by human developers, maintaining quality, security, and alignment with project goals. The agents handle the grunt work and routine tasks, freeing up developers to focus on more complex problem-solving, architectural design, and strategic work.

Integrating autonomous agents into CI/CD pipelines represents a significant step towards fully automating aspects of the software development process. It moves AI assistance from being a developer-invoked tool to an always-on participant in the build, test, and deployment workflow. This could lead to substantial improvements in development efficiency, code quality, and release speed, transforming the CI/CD pipeline from a pure automation engine for builds and deployments into an intelligent system capable of autonomously improving and maintaining the codebase itself. The ability to react automatically to events and execute tasks like bug fixes or test generation holds the promise of a more proactive and self-healing development process, where many issues can be addressed automatically before they even reach a human developer’s attention.

Conclusion

The past week has brought forth a flurry of significant announcements across the artificial intelligence landscape, showcasing rapid advancements and diverse applications of AI technology. From powerful new models like Anthropic’s Claude 4 series, designed for complex tasks and long-running interactions, to specialized, lightweight models like Mistral’s Devstral, tailored for agentic coding tasks, the capabilities of AI continue to expand.

Major platforms are deeply integrating AI into developer workflows, exemplified by OpenAI’s enhanced Responses API with new tools like Code Interpreter and File Search, and Microsoft’s expansive support for the Model Context Protocol across its vast ecosystem of tools and services, including GitHub Copilot and Windows AI Foundry. These developments highlight a growing trend towards creating interoperable environments where AI agents can seamlessly access information and perform actions across different platforms and applications.

Innovations in developer tools, such as Google’s Gemini Code Assist enhancements and new tools like Stitch and Figma-to-app translation, demonstrate a strong focus on leveraging AI to boost programmer productivity and streamline the software development lifecycle. Similarly, Shopify’s unified developer platform and AI-powered code generation aim to make app development faster and more intuitive on its e-commerce platform, while introducing next-generation AI shopping assistants via Storefront MCP.

Beyond development, AI is also transforming research methodologies, as seen with HeyMarvin’s AI Moderated Interviewer, which offers a groundbreaking approach to conducting large-scale qualitative user interviews, blending the depth of conversation with the efficiency of automation. And within the development pipeline itself, Zencoder’s Autonomous Zen Agents for CI/CD are pushing the boundaries by embedding AI agents directly into automated workflows to handle routine tasks, accelerate handoffs, and maintain development momentum around the clock.

Collectively, these updates paint a picture of an AI field that is not only pushing the limits of model capabilities but also actively working to make these powerful technologies more accessible, integrated, and practical for developers, businesses, and researchers. The focus on specialized models, agentic capabilities, developer tools, and workflow automation indicates a maturing ecosystem where AI is moving beyond impressive demos to become an integral part of how we build software, conduct research, and interact with technology. As these technologies continue to evolve, we can anticipate even more innovative applications and significant shifts in how we work and live.

Comments