Upskilling Software Engineering Teams in the Age of AI

The landscape of software engineering is undergoing a significant transformation, largely driven by the rapid advancements and widespread adoption of Artificial Intelligence (AI). Specifically, Generative AI (GenAI) tools and AI code assistants are reshaping the daily work of developers and the skills required for success. This shift presents both challenges and opportunities for organizations looking to maintain competitive advantage through their engineering talent.

A substantial number of software engineering managers anticipate that AI will fundamentally alter the necessary skill sets for engineers within the next few years. This expectation highlights a critical need for proactive strategies to navigate the evolving technical demands. Learning to code is no longer sufficient; the focus is now on learning to code effectively within an AI-augmented environment.

One significant challenge is the “skills-experience paradox.” As junior engineers increasingly rely on AI tools for tasks like code completion or debugging, they may bypass the foundational programming concepts and problem-solving techniques that these tools abstract away. This premature or excessive reliance can hinder the development of deep understanding and critical thinking skills essential for complex software development. Engineering leaders must therefore refine their talent development approaches to cultivate learning environments that strike a crucial balance between integrating AI tools and ensuring robust fundamental skills acquisition.

Navigating the Skills-Experience Paradox

The core difficulty lies in the tension between leveraging AI tools for efficiency and ensuring engineers build a strong, independent understanding of underlying principles. AI assistants can quickly generate code snippets or suggest solutions, which is incredibly efficient for experienced developers. However, for newcomers, simply accepting AI output without understanding the ‘why’ or ‘how’ can create knowledge gaps. They might become proficient at using the tool but lack the foundational expertise to:

- Debug complex issues the AI cannot resolve.

- Optimize code beyond the AI’s suggestions.

- Understand architectural trade-offs.

- Adapt to new problems or technologies where AI assistance is less effective.

- Critically evaluate the quality and security of AI-generated code.

This paradox necessitates a deliberate strategy. Relying solely on traditional methods ignores the reality of modern tooling, while fully embracing AI without structured learning risks creating engineers who are dependent rather than augmented. The goal is to produce engineers who can collaborate effectively with AI, leveraging its strengths while applying their own deep understanding and critical judgment.

Building Proficiency Through Structured Learning Pathways

The most effective way for software engineering leaders to address the challenges posed by AI integration is by implementing structured learning pathways. These pathways should be designed to seamlessly blend the development of fundamental engineering skills with a staged and thoughtful integration of AI tools. The underlying principle is to demonstrate how a solid grasp of core concepts empowers engineers to utilize AI tools more powerfully and intelligently.

These pathways should incorporate a multifaceted approach to learning, recognizing that skill development occurs in various contexts:

- Formal Learning: This involves delivering targeted educational content. Microlearning modules, focusing on specific fundamental concepts (e.g., data structures, algorithms, design patterns) and specific AI tool capabilities (e.g., prompt engineering techniques, understanding AI model limitations), can be delivered precisely when developers need the knowledge for their tasks. Online courses, interactive tutorials, and focused workshops also fall under this category. The key is timing – providing relevant information just-in-time for practical application.

- Social Learning: Creating environments where engineers can learn from each other is crucial. Communities of practice dedicated to AI-augmented development can serve as forums for sharing experiences, discussing effective prompting strategies, building libraries of useful prompts for common tasks, and analyzing the decision-making processes of senior developers when interacting with AI. Pair programming, where one developer codes and the other observes or provides input (potentially with AI assistance), offers direct, real-time learning opportunities. Mentorship programs, especially those explicitly addressing the nuances of AI usage, can guide junior engineers in discerning when and how to effectively leverage or even override AI suggestions.

- On-the-Job Learning: This is where theory meets practice. Hands-on projects should be structured to progressively introduce AI tools. Initially, AI might be used for basic, low-risk tasks like simple code completion, boilerplate generation, or syntax correction. As developers gain proficiency and foundational understanding, they can tackle more complex scenarios, such as using AI for generating alternative architectural patterns, refactoring large codebases with AI assistance, or utilizing AI for automated testing script generation. Embedding learning activities directly within actual development work, rather than as isolated training events, ensures immediate relevance and application. For instance, if a junior developer is building a new feature involving database interactions, their learning pathway would include formal modules on database principles and security, paired programming sessions demonstrating AI-assisted database query optimization, and hands-on experience applying these skills to deliver the feature.

Strategically embedding these learning activities within the context of ongoing development projects is paramount. Learning should not be a separate, abstract track but rather an integral part of the development lifecycle. Mapping specific learning modules and social interactions to the technical challenges and deliverables junior developers face ensures that knowledge is acquired and immediately applied, reinforcing understanding.

Structured learning pathways must also clearly define appropriate use cases and limitations for AI tools at different stages of an engineer’s career. A junior engineer might use AI primarily for understanding existing code or suggesting basic implementations, while a senior engineer might use it for exploring complex architectural options or identifying potential edge cases. This staged access helps prevent over-reliance and encourages the development of independent problem-solving skills before AI becomes a default crutch.

The AI Proficiency Divide: Bridging the Gap

The uneven adoption and effective utilization of AI tools are creating a noticeable divide within engineering teams. Some developers quickly integrate AI into their workflow, experiencing significant productivity boosts and finding new ways to solve problems. Others struggle to move beyond basic interactions or misuse the tools, leading to minimal gains or even the introduction of errors. This disparity highlights that simply providing access to AI tools is not enough; teams need guidance and support to become truly proficient users.

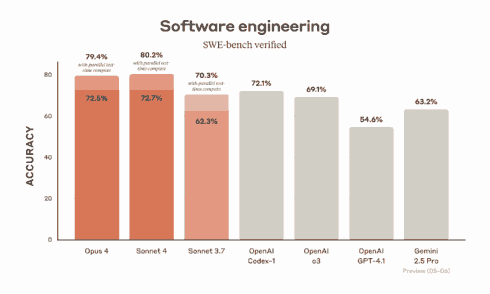

Data suggests this gap is real. While AI tools are widely available, satisfaction with the user experience and reported productivity gains are not uniform.

According to recent surveys:

* Only 29% of software development team members report being satisfied or extremely satisfied with their overall experience using AI tools. * 13% of software development team members report no productivity gains from using AI tools. * 39% report only modest productivity increases (up to 10%).

These figures underscore that a significant portion of the engineering workforce is not yet fully realizing the potential benefits of AI. The discrepancy between tool availability and effective use points to a need to shift focus from merely measuring tool adoption to measuring learning outcomes and skill development enabled by the tools.

Interestingly, the perspective on AI tools differs between experienced and early-career developers. Professional developers often prioritize AI’s role as a productivity enhancer, viewing it through the lens of accelerating existing tasks. Early-career developers, however, are more likely to see AI as a potential learning aid, a tool that can help them understand complex systems or explore new concepts. Leaders can capitalize on this perspective, framing AI not just as a way to do things faster, but as a powerful resource for deepening understanding and accelerating skill acquisition.

To effectively bridge the proficiency divide and leverage AI as a learning aid:

- Explicitly frame AI tools as accelerators for learning during new developer onboarding and ongoing training. Highlight specific, practical use cases where AI can illuminate complex systems, explain intricate code logic, or help understand various architectural patterns by generating and analyzing examples. This reframes AI from a simple task automation tool to a sophisticated learning partner.

- Implement regular feedback mechanisms and open dialogues. Create channels for developers to share their experiences with AI tools, discuss challenges they face, report instances where AI assistance was particularly helpful or unhelpful, and suggest improvements for tool integration or training. Understanding how developers are actually using AI, their frustrations, and their successes is key to providing targeted support and refining the learning pathways.

- Foster a culture of experimentation and sharing. Encourage developers to try different AI tools and techniques for various tasks. Provide safe environments (like internal hackathons or dedicated exploration time) for them to experiment without the pressure of immediate project deliverables. Establish internal forums or regular “AI brown bag” sessions where developers can showcase how they are effectively using AI and share lessons learned.

- Provide training on how to use AI effectively. This goes beyond tool features and delves into prompt engineering, understanding the probabilistic nature of AI outputs, recognizing potential biases or inaccuracies, and developing the critical judgment needed to evaluate and refine AI-generated code or suggestions. Teach developers how to formulate clear prompts, ask follow-up questions, and iteratively refine AI interactions to achieve desired outcomes.

By focusing on learning and effective integration rather than just raw usage statistics, organizations can help a larger portion of their workforce become proficient AI collaborators, unlocking the full potential of these tools for increased innovation and efficiency.

Evolving Skills Assessment for AI-Augmented Development

The integration of AI into software engineering necessitates a fundamental rethinking of how engineering talent is assessed, both for hiring and for ongoing development. If AI tools can automate or significantly assist with tasks like writing boilerplate code, recalling syntax, or even generating initial implementations, then traditional assessments focused solely on these capabilities become less effective predictors of success. Organizations must evolve their assessment strategies to measure the skills that remain critical and become even more valuable in an AI-augmented world.

The focus needs to shift towards higher-level cognitive skills that AI currently complements rather than replaces. These include:

- Problem Solving: Can the candidate break down complex problems, identify core issues, and devise logical strategies, even when leveraging AI for parts of the solution?

- Critical Thinking: Can they evaluate the quality, efficiency, security, and appropriateness of AI-generated code or suggestions? Can they identify potential flaws or edge cases the AI might miss?

- System Design: Can they understand how different components of a system interact, design robust and scalable architectures, and make informed decisions about technology choices, leveraging AI to explore design options or analyze trade-offs?

- Understanding Fundamental Principles: Do they grasp the underlying computer science concepts (e.g., data structures, algorithms, operating systems, networking) that inform effective software development, allowing them to reason about code produced by AI or identify non-obvious bugs?

- Collaboration: Can they work effectively with AI tools as partners and collaborate with human team members, communicating clearly about their process, including how they used AI?

- Adaptability and Continuous Learning: Given the rapid pace of change, can they quickly learn new technologies, tools, and paradigms, including new AI capabilities?

- Ethical Considerations: Do they understand the ethical implications of using AI in development, such as data privacy, bias in models, and the responsible use of AI-generated content?

Assessment methods should evolve to reflect these priorities. Instead of purely asking candidates to write code from scratch under timed conditions (a task AI can heavily assist with), interviews and assessments should incorporate elements that reveal deeper thinking.

- Code Critique and Refactoring: Provide candidates with a piece of code (potentially AI-generated or containing subtle issues) and ask them to critique it, identify problems, explain why they are issues, and suggest improvements or alternative implementations. This tests understanding and critical evaluation skills.

- Design Discussions: Present a system requirement or problem and engage the candidate in a discussion about potential architectures, trade-offs, and implementation strategies. They could be asked how they might use AI tools in this design process.

- AI-Assisted Problem Solving: Provide a coding problem and allow candidates to use AI tools during the assessment. The evaluation then shifts from just the final code to the process they used. How did they interact with the AI? What prompts did they use? How did they verify or modify the AI’s output? This reveals their ability to effectively collaborate with AI and apply critical thinking.

- Explaining Complex Concepts: Ask candidates to explain complex technical concepts or the reasoning behind a particular design choice. This demonstrates their depth of understanding, not just their ability to recall information or generate code.

This evolved assessment approach is not limited to hiring. It is equally vital for the ongoing development of existing team members. Implementing continuous learning frameworks that link initial assessments to personalized development paths ensures that engineers are constantly growing the skills most relevant to the AI-augmented future. Regular performance reviews should include discussions about how engineers are leveraging AI, the challenges they face, and the skills they need to develop further (both fundamental and AI-related).

By focusing assessment on critical thinking, problem-solving, system design, and the ability to effectively partner with AI, organizations can build and nurture engineering teams that are not only productive today but are also equipped to thrive in the rapidly evolving technological landscape of tomorrow. The future belongs to engineers who can think critically, learn continuously, and collaborate intelligently with artificial intelligence.

Comments