A New Paradigm: How a Deep Learning Alternative Can Unlock Real-World Capabilities for AI Agents

A pioneering machine learning methodology is emerging, one that seeks to mirror the sophisticated mechanisms by which the human brain constructs models and acquires knowledge about its environment. This innovative approach has demonstrated remarkable efficiency in mastering a collection of simplified video games, signaling a potential shift in the landscape of artificial intelligence development.

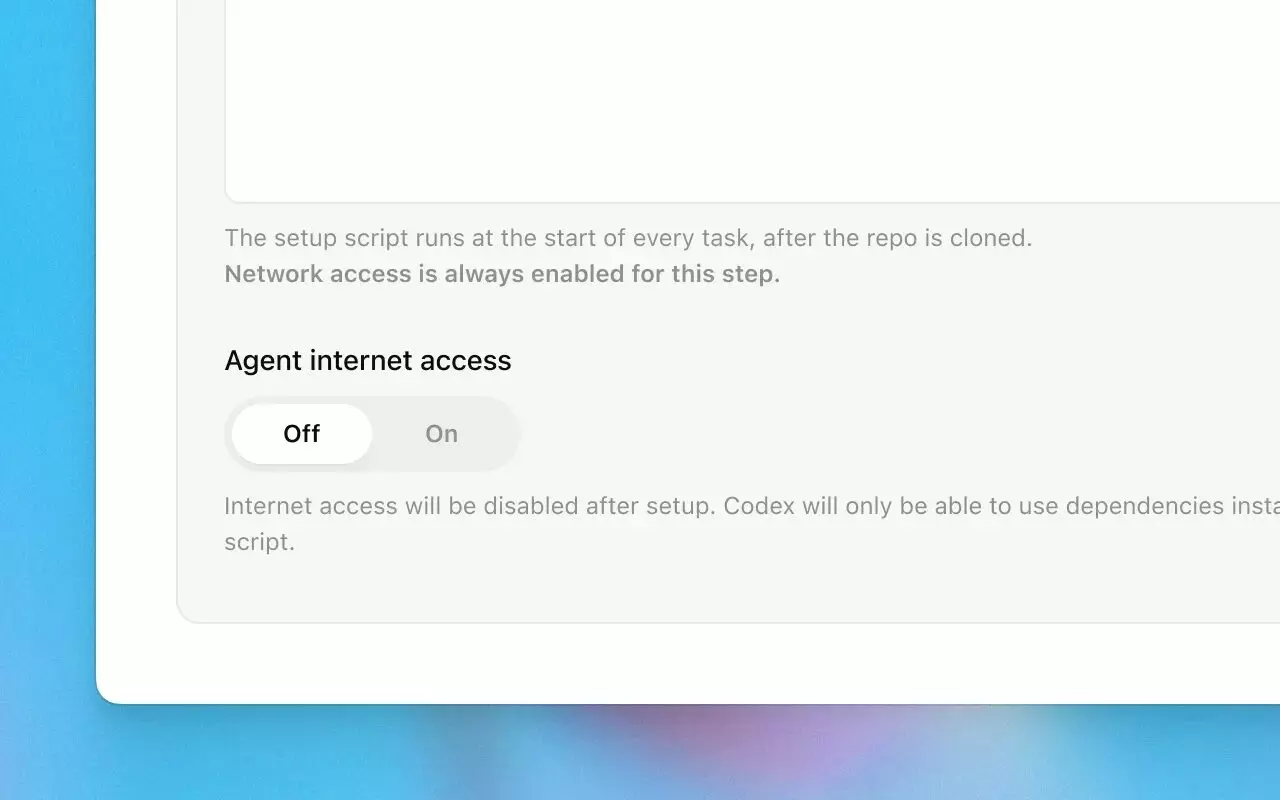

This novel system, known as Axiom, presents a distinct alternative to the artificial neural networks that currently dominate the field of modern AI. Axiom, developed by the software firm Verse AI, is engineered with an inherent understanding of how objects within its simulated world interact physically. It then employs a specific algorithm to formulate predictions about how the game environment will respond to its actions. This predictive model is continuously refined and updated based on the system’s observations – a dynamic process referred to as active inference.

The conceptual underpinnings of this methodology are deeply rooted in the free energy principle. This principle is a theoretical framework that endeavors to elucidate the nature of intelligence by integrating concepts drawn from mathematics, physics, information theory, and biological processes. The free energy principle was initially formulated by Karl Friston, a highly respected neuroscientist who now serves as the chief scientist at the “cognitive computing” company, Verses.

Dr. Friston emphasizes that this paradigm could be particularly crucial for the creation of sophisticated AI agents capable of navigating and interacting with the real world. He suggests that enabling the kind of cognitive abilities observed in biological brains necessitates considering not only the capacity for learning information but, more critically, the capacity for learning how to act effectively within the world. This focus on action-oriented learning distinguishes the approach.

The Conventional Path: Deep Learning and its Limitations

Traditionally, training AI to play games has heavily relied on a method known as deep reinforcement learning. This process involves training neural networks through extensive experimentation and iteratively adjusting their internal parameters based on positive or negative feedback received from their actions within the game environment. While this technique has successfully produced algorithms capable of surpassing human performance in many games, it typically requires a vast number of attempts and significant computational resources to achieve proficiency.

In contrast, Axiom has shown an impressive ability to master various simplified versions of popular video games, including those dubbed “drive,” “bounce,” “hunt,” and “jump.” It accomplishes this with substantially fewer training examples and reduced computational demands compared to conventional deep reinforcement learning methods. This efficiency is a key differentiator and suggests potential advantages for applications where data is scarce or real-time learning is essential.

The objectives and core characteristics of this new approach align with critical challenges in the pursuit of Artificial General Intelligence (AGI). Experts in the field, like AI researcher François Chollet, who created the ARC 3 benchmark to evaluate AI’s ability to solve unfamiliar problems, recognize the significance of these efforts. Chollet is also exploring novel machine learning techniques and uses his benchmark to test models’ capacity to genuinely learn problem-solving skills rather than merely replicating existing solutions.

Chollet notes that this work appears highly original, which he views as a positive sign. He stresses the importance of researchers exploring new concepts and moving beyond the more crowded avenues of large language models and language models focused on reasoning, indicating a need for diverse approaches in the AI landscape.

Understanding the Core Concepts

Modern artificial intelligence primarily utilizes artificial neural networks. While these networks draw rough inspiration from the structure of biological brains, their operational mechanisms are fundamentally different. Over the past decade and a half, deep learning, a methodology built upon these neural networks, has facilitated remarkable advancements, enabling computers to perform complex tasks such as transcribing spoken language, identifying faces in images, and generating new images. More recently, deep learning has been the driving force behind the development of large language models that power increasingly sophisticated and conversational chatbots.

Axiom, however, theoretically offers a potentially more efficient pathway to building intelligent AI systems from the ground up. Its architecture could be particularly well-suited for creating agents that must learn effectively and rapidly from their experiences. The CEO of Verses, Gabe René, suggests that Axiom’s technology is already being explored by a finance company interested in modeling market dynamics. René describes Axiom as a novel architectural design specifically for AI agents, highlighting its key advantages: enhanced accuracy, superior efficiency, and a significantly smaller footprint. He emphasizes their design philosophy, stating that these systems are “literally designed like a digital brain.”

It is interesting to note the historical connection between the free energy principle, which underpins Axiom, and the origins of modern AI. The free energy principle was, in fact, influenced by the work of British-Canadian computer scientist Geoffrey Hinton. Hinton, often regarded as a foundational figure in deep learning, received both the Turing award and the Nobel Prize for his pioneering contributions to the field. Hinton and Karl Friston were colleagues at University College London for many years, highlighting the intertwined history of these different approaches to understanding and building intelligence.

Axiom’s Architecture and Operation

The power of Axiom lies in its fundamentally different approach compared to the pattern recognition and function approximation that characterize much of deep learning. Instead of purely learning input-output mappings from massive datasets, Axiom builds and refines an internal model of the world it inhabits. This model isn’t just a static representation; it’s a dynamic system capable of generating predictions about future states based on current observations and potential actions.

Here’s a deeper look at its operational principles based on the source description:

- Prior Knowledge: Axiom is equipped with pre-existing knowledge, specifically regarding the physical interactions of objects within its environment. This isn’t learned from scratch through trial and error in the same way a typical neural network might start with a blank slate. This inherent understanding provides a powerful head start, allowing the system to make more informed initial predictions and reducing the need for vast amounts of exploratory data.

- Modeling Expectations: Based on its prior knowledge and current observations, Axiom constructs expectations about how the world will behave. For instance, in a game involving physics, it expects objects to fall, collide, and react according to physical principles it “understands” from its initial programming or design.

- Active Inference: This is the core learning mechanism. Axiom compares its predicted outcomes with the actual observations it receives from the game world. Any discrepancy between expectation and reality generates “prediction error.” The system then acts to minimize this error. This minimization can happen in two primary ways:

- By updating its internal model to better match observed reality (learning).

- By taking actions in the world that make the observed reality match its current model (acting).

This active inference loop is where the “agentic” nature comes into play. The system is not passively learning from data; it is actively interacting with its environment, making predictions, and adjusting its internal state (its model) and its external actions to reduce the gap between what it expects and what it perceives. This continuous cycle of prediction, observation, and error minimization allows Axiom to learn and adapt in a far more dynamic and potentially efficient manner than systems that rely purely on backward propagation of errors through layers of artificial neurons trained on static datasets.

Efficiency in Practice: Mastering Games

The source highlights Axiom’s success in mastering simplified video games like drive, bounce, hunt, and jump. The key takeaway here is the efficiency with which this mastery is achieved. Unlike deep reinforcement learning agents that might require millions or even billions of gameplay frames to learn optimal strategies, Axiom succeeds with significantly fewer examples.

Let’s consider the implications of this efficiency:

- Reduced Data Needs: Learning from fewer examples means the system requires less data collection, which is often a bottleneck in real-world AI applications. Real-world data can be expensive, difficult to acquire, or even dangerous to collect through random experimentation.

- Lower Computational Cost: Fewer examples also translate directly to less computation power required for training and deployment. This makes the approach more accessible and potentially deployable on less powerful hardware, broadening its potential applications.

- Faster Adaptation: Learning efficiently implies faster adaptation to new environments or changes within an environment. An agent that can quickly understand the rules of a new situation and adapt its behavior will be far more capable in dynamic, real-world scenarios than one that requires extensive retraining.

- Potential for Real-Time Learning: The active inference mechanism, constantly updating the model based on live observations, is inherently suited for real-time learning. This is crucial for agents operating in environments where conditions are constantly changing and decisions must be made on the fly, such as robotics, autonomous navigation, or complex simulation environments like financial markets.

While the specific data points comparing Axiom’s efficiency directly to deep reinforcement learning aren’t provided in a format suitable for a data table (e.g., “Axiom mastered Game X in Y examples vs. DRL in Z examples”), the descriptive comparison emphasizes a qualitative difference in learning speed and resource requirements. The success in these games serves as a concrete demonstration of the system’s ability to build functional models of dynamic environments rapidly.

Implications for Agentic AI

The term “agentic AI” refers to AI systems designed to act autonomously and interact with their environment to achieve goals. Building effective AI agents for the real world presents significant challenges. Unlike playing a game with fixed rules and clear rewards, the real world is complex, unpredictable, and requires continuous adaptation.

The capabilities demonstrated by Axiom, particularly its efficiency and real-time learning potential rooted in modeling the environment and active inference, appear highly relevant for developing sophisticated agents. Here’s why:

- Modeling Reality: Real-world agents need to understand more than just patterns in data; they need to understand the underlying principles governing their environment. A system that builds an internal model of physics, causality, and object interactions, as Axiom does in simplified game environments, is better equipped to handle novel situations and make predictions in complex settings.

- Learning How to Act: As Karl Friston noted, real brains learn not just what things are but how to interact with them effectively. Active inference directly addresses this by linking perception (observing prediction error) with action (acting to minimize error or update the model). An agent driven by active inference is inherently driven to explore and learn how its actions affect the world, a crucial aspect of agentic behavior.

- Handling Uncertainty: The real world is filled with uncertainty. Systems based on probabilistic modeling, which is often associated with active inference and the free energy principle, are potentially better suited to handle incomplete or noisy information and make robust decisions under uncertainty.

- Generalization: While the games tested are simplified, the process of building a predictive model and using active inference could, in theory, generalize more effectively to new, related tasks or environments compared to systems that have only learned to recognize patterns in specific datasets. Learning the “rules” of interaction is more powerful than just memorizing outcomes.

The potential application in modeling financial markets, as mentioned by Verses CEO Gabe René, illustrates this point. Financial markets are dynamic, complex systems with intricate interactions. An agent that can build a predictive model of market behavior based on principles and actively refine its model and trading strategies based on real-time data and outcomes could potentially navigate these complexities more effectively than a system trained purely on historical data patterns.

Contrasting Paradigms: Deep Learning vs. Model-Based Approaches

It’s important to understand the fundamental difference in philosophy between the dominant deep learning paradigm and the model-based approach exemplified by Axiom.

| Feature | Deep Learning (Typical) | Axiom (Model-Based/Active Inference) |

|---|---|---|

| Primary Goal | Learn mappings from input to output; pattern recognition. | Build internal model of environment; predict and act. |

| Learning Method | Trained on large datasets; backpropagation; reinforcement learning from trial-and-error. | Builds predictive model; updates model and actions based on prediction error (active inference). |

| Data Efficiency | Often requires massive datasets and many examples. | Can learn effectively from significantly fewer examples. |

| Computation | Can be computationally expensive, especially for training. | Potentially requires less computation for learning tasks. |

| Knowledge | Learns features and representations from data alone. | Can incorporate prior knowledge about the world’s dynamics. |

| Real-Time Adapt. | Can be challenging; often requires retraining or fine-tuning. | Designed for continuous, real-time model updates and adaptation. |

| Transparency | Often considered “black boxes”; difficult to interpret internal reasoning. | Model-based approaches can potentially offer more interpretable internal states (the model itself). |

This comparison, derived from the descriptions in the source content, highlights why researchers are excited about alternatives. While deep learning has achieved undeniable success in tasks like image recognition and language generation, its reliance on massive data and computational power, and its less direct path to understanding causality and interaction, present hurdles for building truly autonomous and adaptive agents for the complex physical or dynamic real world. Axiom’s approach, rooted in modeling and active prediction error minimization, offers a different path that might be more aligned with the demands of agentic behavior.

Perspectives on the Future of AI Architecture

The development of systems like Axiom underscores a growing interest in exploring foundational alternatives to the current deep learning paradigm. The success of large language models has captured significant attention, but researchers like François Chollet highlight the need for diversity in AI research directions. Focusing solely on scaling up existing architectures might not be sufficient to achieve true general intelligence or build agents capable of robustly interacting with the physical world.

Chollet’s work on benchmarks like ARC 3, which test a system’s ability to generalize and solve novel problems, reflects this perspective. He emphasizes that true intelligence involves more than just mimicking existing examples or recognizing patterns; it involves understanding underlying principles and being able to apply that understanding to entirely new situations. Axiom’s model-building approach seems inherently more aligned with this goal than purely data-driven methods.

Gabe René’s description of Axiom as a “new architecture for AI agents” designed “like a digital brain” further reinforces the idea that this is not just an incremental improvement on existing methods but a potentially different way of conceiving AI systems. The focus on real-time learning, efficiency, and a smaller footprint suggests a vision for AI that is more dynamic, resource-conscious, and potentially more capable of operating outside of highly controlled or data-rich environments.

The historical link to Geoffrey Hinton, a pioneer of deep learning, adds another layer to this narrative. It suggests that the pursuit of understanding intelligence, whether biological or artificial, involves exploring multiple avenues. The free energy principle, while different in its formalization and application in Axiom, shares some distant conceptual roots with the ideas that drove early neural network research – the desire to create systems that learn and process information in a manner inspired by biology.

Conclusion: A New Direction for Intelligent Agents

The development of Axiom represents an exciting step in the exploration of alternative machine learning architectures. By drawing inspiration from theories of brain function, specifically the free energy principle and the concept of active inference, Axiom offers a distinct contrast to the prevalent deep learning paradigm. Its demonstrated ability to master games with remarkable efficiency, requiring significantly fewer examples and less computation, points towards a potential solution for some of the key limitations of current AI, particularly when it comes to building robust and adaptable agents for the real world.

The focus on constructing internal models of the environment, incorporating prior knowledge, and driving learning through the minimization of prediction error provides a powerful mechanism for understanding and interacting with dynamic systems. While deep learning has undeniably transformed numerous fields, approaches like Axiom, centered on model-based reasoning and active learning, may prove essential for creating AI agents capable of navigating complexity, adapting to uncertainty, and learning “how to act in the world” in a manner that more closely resembles biological intelligence. As researchers continue to explore diverse pathways, systems like Axiom offer a compelling vision for the future of AI, promising more efficient, capable, and potentially more general-purpose intelligent agents.

Comments