Databricks Introduces Powerful New Tools to Enhance Enterprise AI and Agent Development

The landscape of enterprise data and artificial intelligence is constantly evolving, presenting organizations with both immense opportunities and complex challenges. Building sophisticated AI applications and intelligent agents that can effectively leverage vast amounts of proprietary data requires not just powerful models, but also robust, scalable, and user-friendly infrastructure. Recognizing this need, Databricks recently unveiled a suite of new tools and platforms designed to streamline and accelerate the development of AI solutions within the enterprise. These innovations, including Lakebase, Lakeflow Designer, and Agent Bricks, aim to empower organizations to more easily transform their data intelligence into impactful AI applications and agents.

For years, Databricks has focused on helping enterprises build AI applications and agents that can reason over their unique, company-specific data using the Data Intelligence Platform. The introduction of these new capabilities marks a significant step towards creating a more comprehensive and accessible environment for the entire AI lifecycle, from data preparation and management to agent creation and business intelligence integration. The goal is to simplify complexity, enhance collaboration, and provide the foundational components necessary for the next wave of enterprise AI adoption.

The rapid pace of AI development and the increasing sophistication of AI agents are reshaping business operations across industries. Enterprises are eager to harness this potential, but are often hindered by legacy systems and disconnected data stacks. A fundamental shift is needed to support the dynamic, data-intensive workloads that AI agents demand. The new tools from Databricks are designed to address these challenges head-on, providing a modern, integrated platform built for the unique requirements of the AI era.

Lakebase: A Modern Database for the AI Era

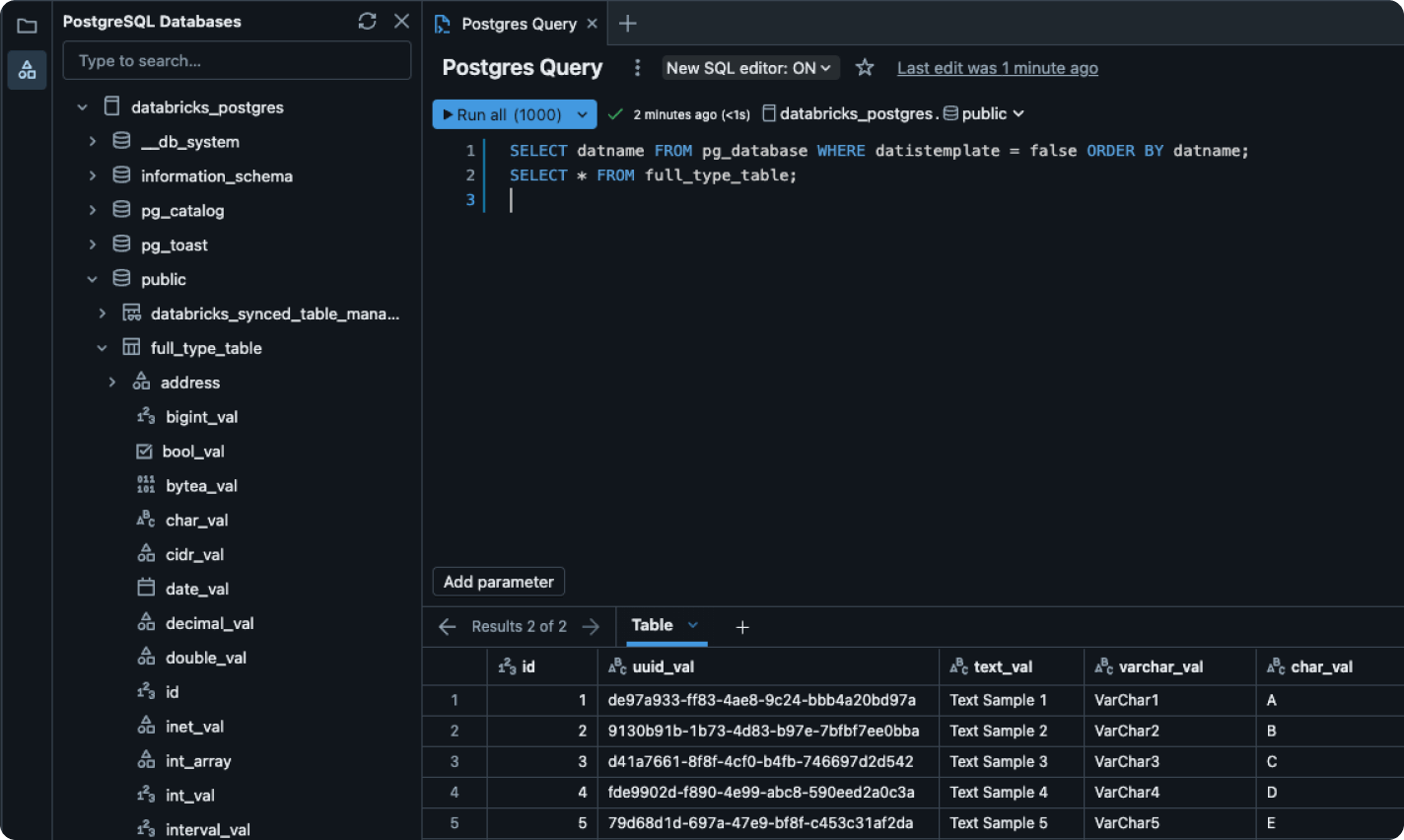

At the forefront of Databricks’ new announcements is Lakebase, a managed Postgres database specifically engineered to support AI applications and agents. This innovation introduces a critical operational database layer directly into the Databricks Data Intelligence Platform, bridging the gap between traditional operational workloads and the analytical capabilities of the lakehouse architecture.

Traditional operational databases, while foundational for many applications, were primarily designed for slowly changing data and predictable transaction patterns. The dynamic, often high-volume requirements of modern AI applications and autonomous agents, which may need to read and write data frequently and react in near real-time, can strain these older architectures. Lakebase is built to overcome these limitations by incorporating features essential for the demands of AI workloads.

A key innovation in Lakebase is its continuous autoscaling capability. This allows the database to automatically adjust its resources based on the fluctuating demands of agent workloads, ensuring consistent performance and cost efficiency without manual intervention. This is particularly crucial for agent-driven applications, which can experience unpredictable spikes in activity as they interact with data and execute tasks.

Lakebase is deeply integrated with the lakehouse, enabling seamless unification of operational and analytical data. This integration eliminates the need for complex ETL processes to move data between transactional systems and data warehouses or data lakes, providing a single source of truth and enabling AI agents to access the most current data for their operations and reasoning.

The architecture of Lakebase offers several significant benefits:

- Separation of Compute and Storage: This architectural choice provides flexibility and scalability, allowing compute resources to be scaled independently of storage, optimizing costs and performance.

- Built on Open Source (Postgres): Leveraging the popular and robust Postgres database as its foundation means Lakebase benefits from a well-understood and widely supported technology, making it easier for developers to adopt and integrate.

- Unique Branching Capability: This feature is particularly valuable for agent development. It allows developers to create isolated branches of the database for experimentation, testing, and development without impacting the main production environment. This facilitates rapid iteration and safe development of AI agents.

- Automatic Syncing with Lakehouse Tables: Data in Lakebase can be automatically synced to and from lakehouse tables, ensuring consistency and providing fresh data for both operational AI tasks and broader analytical initiatives.

- Fully Managed Service: As a managed service, Lakebase reduces the operational burden on IT teams, handling tasks like patching, backups, and scaling automatically.

Ali Ghodsi, co-founder and CEO of Databricks, highlighted the significance of Lakebase, stating, “We’ve spent the past few years helping enterprises build AI apps and agents that can reason on their proprietary data with the Databricks Data Intelligence Platform. Now, with Lakebase, we’re creating a new category in the database market: a modern Postgres database, deeply integrated with the lakehouse and today’s development stacks. As AI agents reshape how businesses operate, Fortune 500 companies are ready to replace outdated systems. With Lakebase, we’re giving them a database built for the demands of the AI era.”

The launch of Lakebase is supported by a broad ecosystem of partners, facilitating seamless integration with a variety of third-party tools for data integration, business intelligence, and governance. The extensive list of supported partners underscores Databricks’ commitment to an open platform and includes:

- Accenture

- Airbyte

- Alation

- Anomalo

- Atlan

- Boomi

- Cdata

- Celebal Technologies

- Cloudflare

- Collibra

- Confluent

- Dataiku

- dbt Labs

- Deloitte

- EPAM

- Fivetran

- Hightouch

- Immuta

- Informatica

- Lovable

- Monte Carlo

- Omni

- Posit

- Qlik

- Redis

- Retool

- Sigma

- Snowplow

- Spotfire

- Striim

- Superblocks

- ThoughtSpot

- Tredence

Lakebase is currently available as a public preview, with Databricks planning to introduce several significant improvements and expanded capabilities in the coming months based on user feedback. This new operational database layer is poised to become a cornerstone for building high-performance, data-intensive AI applications and agents within the enterprise.

Lakeflow Designer: Simplifying Data Pipelines with No-Code and AI

Getting high-quality, production-ready data to the right place is a fundamental challenge in building effective AI applications and agents. Data pipelines are the engine that powers this process, but building and managing them can be complex and time-consuming, often requiring specialized expertise. Databricks is addressing this challenge with Lakeflow Designer, a new no-code ETL (Extract, Transform, Load) capability designed to simplify the creation of production data pipelines.

Lakeflow Designer is built on the foundation of Lakeflow, Databricks’ existing solution for data engineers focused on building robust data pipelines. Lakeflow itself is now generally available and includes important new features such as Declarative Pipelines, a new Integrated Development Environment (IDE), new point-and-click ingestion connectors via Lakeflow Connect, and the ability to write directly to the lakehouse using Zerobus.

Lakeflow Designer extends these capabilities by offering a highly intuitive, visual interface. Its drag-and-drop UI allows users, potentially including data analysts and business users who may not have extensive coding experience, to design and configure data pipelines. This democratization of data pipeline creation is crucial for scaling AI efforts across an organization.

Adding further ease of use and accelerating the process, Lakeflow Designer incorporates an AI assistant. This intelligent assistant allows users to describe the data transformation or pipeline logic they need using natural language prompts. The AI assistant can then translate these descriptions into executable pipeline configurations, significantly reducing the manual effort involved in pipeline development. This combination of a visual interface and AI assistance aims to lower the barrier to entry for creating sophisticated data workflows.

The goal of Lakeflow Designer is to make it possible for a wider range of users within an organization to contribute to the process of preparing data for AI and analytical use cases. By simplifying the technical complexities of ETL, teams can accelerate the path from raw data to insights and intelligent applications.

Ali Ghodsi emphasized the importance of this accessibility: “There’s a lot of pressure for organizations to scale their AI efforts. Getting high-quality data to the right places accelerates the path to building intelligent applications. Lakeflow Designer makes it possible for more people in an organization to create production pipelines so teams can move from idea to impact faster.”

Lakeflow Designer is set to become available as a public preview soon, promising to transform how organizations build and manage their data pipelines, making the crucial step of data preparation more efficient and accessible for everyone.

Agent Bricks: Accelerating the Creation of Enterprise AI Agents

Building effective and reliable AI agents for enterprise-specific tasks is another significant hurdle for organizations. These agents need to understand context, interact with proprietary data, and perform actions accurately and safely within the business environment. Databricks’ new tool, Agent Bricks, is designed to dramatically simplify and accelerate this process.

Agent Bricks provides a streamlined workflow for creating agents tailored to enterprise use cases. The user begins by describing the specific task or objective they want the agent to accomplish. Critically, they can then connect the agent to their enterprise’s relevant proprietary data hosted on the Databricks platform. This connection is vital, as the ability for AI agents to leverage domain-specific data is key to their effectiveness and accuracy within a business context.

Behind the scenes, Agent Bricks automates many of the complex steps involved in agent development. One notable feature is its ability to create synthetic data based on the customer’s actual data. This synthetic data is used to supplement training the agent, helping it learn and generalize better, especially for edge cases or scenarios not heavily represented in the real dataset.

Furthermore, Agent Bricks utilizes a range of optimization techniques to refine the agent’s performance. These techniques help ensure the agent is efficient, reliable, and capable of performing its designated tasks effectively. By automating training data generation and optimization, Agent Bricks removes significant manual effort and technical expertise required in traditional agent development workflows.

The promise of Agent Bricks is to enable businesses to move from an initial idea for an AI agent to a production-grade application with unprecedented speed and confidence. It aims to remove the guesswork and manual tuning often associated with developing custom agents, while leveraging the underlying security and governance features of the Databricks platform.

According to Ali Ghodsi, “For the first time, businesses can go from idea to production-grade AI on their own data with speed and confidence, with control over quality and cost tradeoffs. No manual tuning, no guesswork and all the security and governance Databricks has to offer. It’s the breakthrough that finally makes enterprise AI agents both practical and powerful.”

Agent Bricks is positioned to empower enterprises to rapidly deploy intelligent agents that can automate tasks, assist decision-making, and interact with internal systems, all while grounded in the company’s unique data.

Other Key Announcements and Initiatives

Beyond the marquee announcements of Lakebase, Lakeflow Designer, and Agent Bricks, Databricks introduced several other significant updates aimed at enhancing the platform’s capabilities, expanding accessibility, and fostering innovation.

One notable addition is Databricks One, a new platform designed to bring data intelligence directly to business teams. Databricks One allows business users to interact with their data using natural language queries, eliminating the need for technical intermediaries or complex query languages. It also supports AI-powered business intelligence (BI) dashboards, providing intuitive visualizations and insights derived from the underlying data. Furthermore, users can access custom-built Databricks applications tailored to specific business needs within the Databricks One environment. This initiative aims to democratize access to data intelligence, enabling faster, data-driven decision-making across the organization.

Recognizing the importance of education and accessibility for fostering a broader talent pool in data and AI, Databricks announced the Databricks Free Edition. This offers a free tier of the platform, allowing individuals to experiment with Databricks capabilities and learn the skills needed for modern data and AI workflows. Complementing this, the self-paced courses offered through Databricks Academy are also now available for free. These changes are specifically designed to support students, aspiring professionals, and developers looking to gain hands-on experience with the Databricks platform and the broader data intelligence ecosystem.

Unity Catalog, Databricks’ unified governance layer for data and AI, is receiving several significant enhancements. A public preview was announced for full support of Apache Iceberg tables within Unity Catalog. Apache Iceberg is a popular open table format known for its performance and flexibility, and its integration into Unity Catalog provides users with greater choice and interoperability for managing their data lakes. Upcoming features for Unity Catalog include new metrics for better monitoring and understanding of data usage, a curated internal marketplace to facilitate sharing and discovery of certified data products within an organization, and the integration of Databricks’ AI Assistant to further simplify data discovery and governance tasks through natural language interfaces.

Finally, Databricks made a valuable contribution to the open-source community by donating its declarative ETL framework to the Apache Spark project. This framework will now be known as Apache Spark Declarative Pipelines. This donation underscores Databricks’ commitment to the open-source technologies that form the backbone of many modern data platforms and ensures that the benefits of declarative pipeline development are available to the wider Apache Spark community.

These collective announcements demonstrate Databricks’ ongoing efforts to build a comprehensive, integrated, and accessible platform for the entire data and AI lifecycle. By introducing tools that simplify data management, streamline pipeline creation, accelerate agent development, empower business users, and enhance governance, Databricks aims to provide enterprises with the capabilities they need to successfully navigate the complexities of the AI era and unlock the full potential of their data.

Comments