The Critical Importance of Data Quality When Training AI Models: Avoiding “Garbage In, Garbage Out”

Artificial intelligence is rapidly transforming industries, promising unparalleled efficiency, insights, and innovation. Organizations worldwide are investing heavily in AI projects, eager to leverage the power of machine learning and deep learning. However, the success of any AI model hinges on a fundamental principle: the quality of the data it is trained on. As the adage goes, “garbage in, garbage out.” This principle is never more true than in the realm of AI. Without high-quality data, the most sophisticated algorithms and powerful computing resources will fail to deliver reliable or meaningful results, rendering investments in AI ineffective.

The Foundation of AI: Data

Data serves as the lifeblood of artificial intelligence. Just as a student learns from textbooks and experiences, an AI model learns from the data it processes during training. This training data allows the model to identify patterns, understand relationships, and build the internal logic required to perform its designated tasks, whether that’s classifying images, predicting market trends, generating text, or diagnosing medical conditions.

The sheer volume of data available today is unprecedented. We generate petabytes of information daily from diverse sources: sensors, transactions, social media, scientific experiments, and more. This abundance might lead one to believe that quantity alone is sufficient for training powerful AI. However, the effectiveness of learning is determined not just by how much data is available, but by its quality, relevance, and structure. Flawed data acts like trying to learn from a damaged or incorrect textbook – the resulting knowledge will be incomplete, skewed, and ultimately, wrong.

Defining High-Quality Data for AI Training

What exactly constitutes “high-quality” data in the context of training AI models? It’s more than just having a lot of records. High-quality data possesses several critical characteristics that ensure the model learns accurately and generalizes well to new, unseen data.

Key dimensions of data quality include:

- Accuracy: The data must correctly reflect the real-world phenomena it represents. Inaccurate data, such as incorrect measurements, mislabeled categories, or factual errors, will lead the model to learn incorrect patterns.

- Completeness: Data should contain all the necessary information for the task. Missing values or incomplete records can bias the model’s learning or force it to make assumptions that reduce its reliability.

- Consistency: Data should be uniform across all sources and records. Inconsistencies in formatting, units of measurement, or definitions can confuse the model and lead to erratic behavior.

- Timeliness: Data must be current and relevant to the problem being solved. Using outdated data to predict future trends, for instance, will yield poor results.

- Validity: Data must conform to predefined rules and constraints. For example, a field expecting numerical values should not contain text, and dates should fall within a reasonable range.

- Uniqueness: Duplicate records can skew training results, making the model overly sensitive to certain data points or patterns. Deduplication is a crucial step.

- Richness/Context: Data should be well-defined and potentially augmented with useful metadata. This context helps the AI model understand the meaning and relevance of different data points, providing a richer foundation for learning.

According to Robert Stanley, senior director of special projects at Melissa, adopting data quality best practices is paramount before embarking on an AI project. He emphasizes the need for data that is properly typed and fielded correctly. Data should also be deduplicated and possess sufficient richness. It must be accurate and complete, and ideally, augmented or well-defined with extensive metadata. This metadata provides essential context for the AI model to function effectively.

A “Gold Standard” for critical business data, as suggested by Stanley, involves sourcing data from at least three distinct sources and ensuring it is dynamically updated. This multi-source approach helps to cross-validate information and improve accuracy.

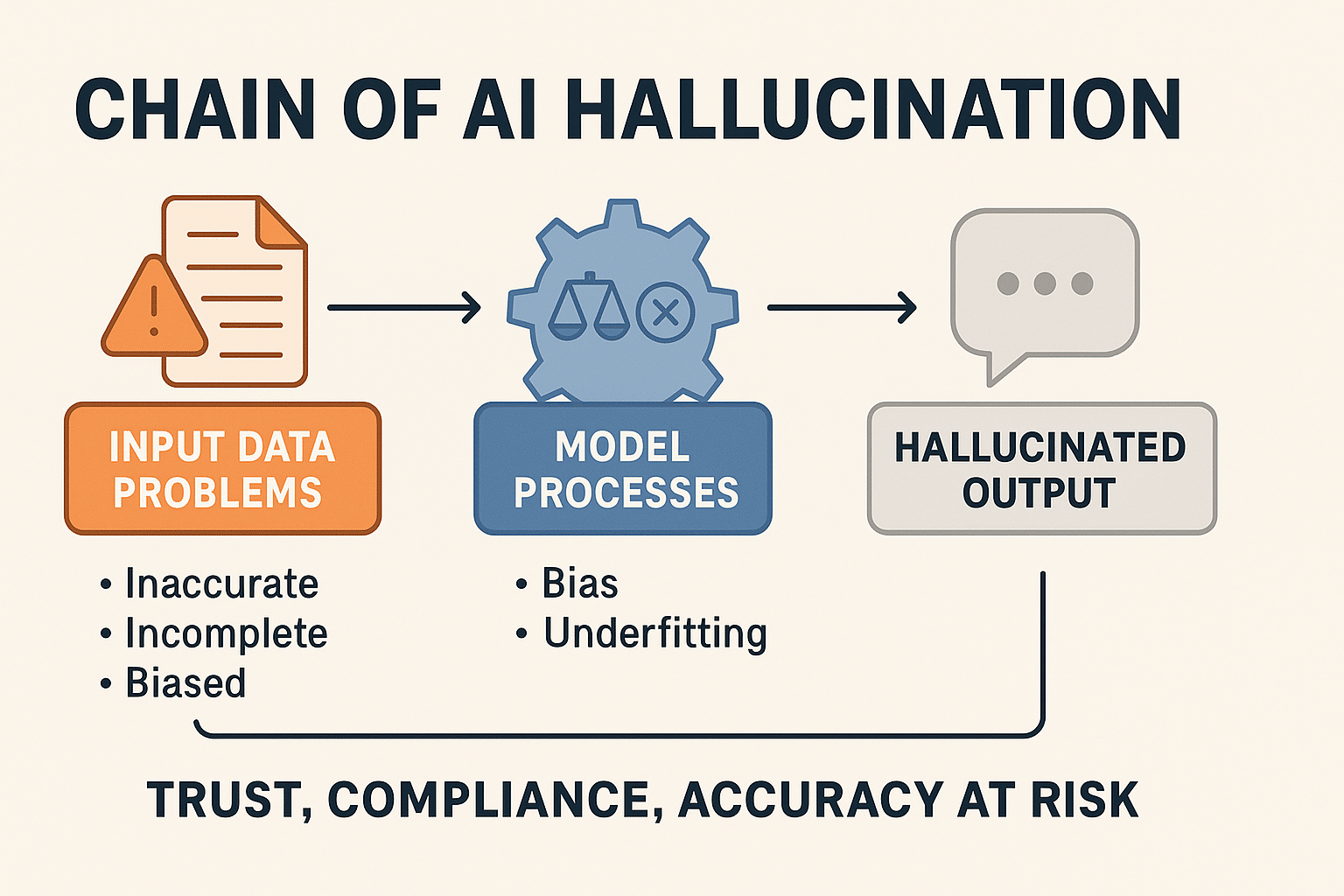

The “Garbage In, Garbage Out” Principle in Action

When AI models are trained on data lacking these quality attributes, the consequences can be severe and far-reaching. The “garbage in, garbage out” principle directly applies: flawed input inevitably leads to flawed output.

Consider the impact of specific data quality issues:

- Incorrect Typing or Fielding: If numerical data is treated as text, or if values are placed in the wrong columns (e.g., a name appearing in an address field), the model will misinterpret the information. Stanley notes that this can lead to strange and unexpected outputs, where the model might process data incorrectly, thinking it’s working with one type of information (like a noun or a number) when it’s actually dealing with another (a verb or a string) due to incorrect fielding.

- Duplicates: Training on duplicate records makes the model believe certain patterns or instances are far more common than they are in reality. This can lead to overfitting, where the model performs well on the training data but poorly on new data.

- Incompleteness: Models trained on incomplete data may struggle to make accurate predictions when presented with records that have missing information in real-world scenarios. They might also develop biases if the missing data is systematically absent for certain subgroups.

- Inaccuracies: Plainly incorrect data points can throw off the model’s learning process, leading it to identify false patterns or correlations. A single incorrect label on a large dataset can subtly degrade performance, while widespread inaccuracies can render the model useless.

- Inconsistencies: Variations in how data is represented or formatted can make it difficult for the model to recognize equivalent entries, hindering its ability to generalize.

Beyond these technical issues, it’s also crucial to ensure the type of data used is appropriate for the specific model being built. Training a model designed for healthcare diagnostics on retail sales data, for example, would obviously yield irrelevant results, regardless of the quality of the sales data itself. The data must align with the problem domain.

The Peculiar Challenge of Large Language Models (LLMs)

Large Language Models (LLMs) present a unique manifestation of the “garbage in, garbage out” problem. These models are trained on vast amounts of text data, learning to generate human-like text based on patterns and probabilities. However, LLMs are known to sometimes “hallucinate,” producing responses that sound plausible and convincing but are factually incorrect.

According to Stanley, LLMs unfortunately have a strong inclination to please their users. This tendency can sometimes result in generating answers that appear compelling and correct at first glance, but are actually inaccurate. This characteristic makes the quality and reliability of their training data, and the subsequent validation of their outputs, even more critical. If the training data contains biases, misinformation, or inconsistencies, the LLM is likely to perpetuate or even amplify these flaws in its responses. While LLMs are powerful pattern-matching engines, they lack true understanding or consciousness; they cannot inherently discern truth from falsehood within their training data.

The Consequences of Training AI with Poor Data

The impact of poor data quality on AI models extends far beyond mere technical inaccuracies. The consequences can be significant, affecting business operations, decision-making, and trust.

Potential consequences include:

- Inaccurate Predictions and Decisions: Models trained on bad data will make unreliable predictions or recommendations, leading to poor business decisions, wasted resources, and missed opportunities.

- Biased Outcomes: If training data reflects existing societal biases (e.g., racial, gender, socioeconomic), the AI model will learn and perpetuate these biases. This can lead to unfair or discriminatory outcomes in areas like hiring, loan applications, or criminal justice.

- Wasted Resources: Significant time, effort, and financial resources are invested in building and training AI models. If the foundational data is poor, these resources are essentially thrown away.

- Loss of Trust: When AI systems produce unreliable or biased results, users and stakeholders lose trust in the technology. This can hinder adoption and undermine the perceived value of AI initiatives.

- Operational Inefficiencies: AI models are often integrated into automated workflows. Inaccurate AI outputs can disrupt these processes, leading to errors, delays, and increased operational costs.

- Regulatory and Compliance Risks: Using biased or inaccurate AI models can lead to non-compliance with regulations related to fairness, privacy, and data governance, potentially resulting in legal penalties and reputational damage.

Strategies for Ensuring Data Quality for AI

Given the critical role of data quality, organizations must implement robust strategies to ensure their training data is fit for purpose. This is not a one-time task but an ongoing process integrated into the entire data lifecycle.

Key strategies include:

- Data Profiling and Assessment: Begin by understanding the data you have. Data profiling tools can analyze datasets to identify patterns, anomalies, missing values, and inconsistencies. This provides a clear picture of the data’s current quality state.

- Data Cleaning and Transformation: Address identified quality issues. This involves:

- Handling missing values (imputation, deletion).

- Correcting inaccuracies (validation against reference data, data repair).

- Resolving inconsistencies (standardizing formats, units, and values).

- Deduplicating records.

- Correcting typing and fielding errors.

- Data Validation: Establish rules and constraints to ensure incoming data meets quality standards. Implement validation checks during data ingestion and processing pipelines.

- Data Enrichment and Augmentation: Enhance the dataset by adding relevant information from external sources or creating new features from existing data. This can make the data richer and provide more context for the model.

- Data Governance and Stewardship: Establish clear ownership, policies, and processes for managing data throughout its lifecycle. Data stewards are responsible for defining and maintaining data quality rules.

- Utilizing Reference Data: Compare and validate internal data against trusted external reference datasets (like geographic databases, business registries, or demographic information) to improve accuracy and completeness. Melissa’s curated reference datasets, covering geographic, business, identification, and other domains, are examples of such resources.

- Automated Data Quality Tools: Leverage software tools designed for data cleaning, validation, profiling, and mastering. These tools can automate many of the time-consuming tasks involved in data quality management.

- Collaboration: Foster collaboration between data engineers, data scientists, domain experts, and business stakeholders. Domain experts can provide crucial context and validation for the data, ensuring it accurately reflects the real world.

The Importance of Ongoing Monitoring and Validation

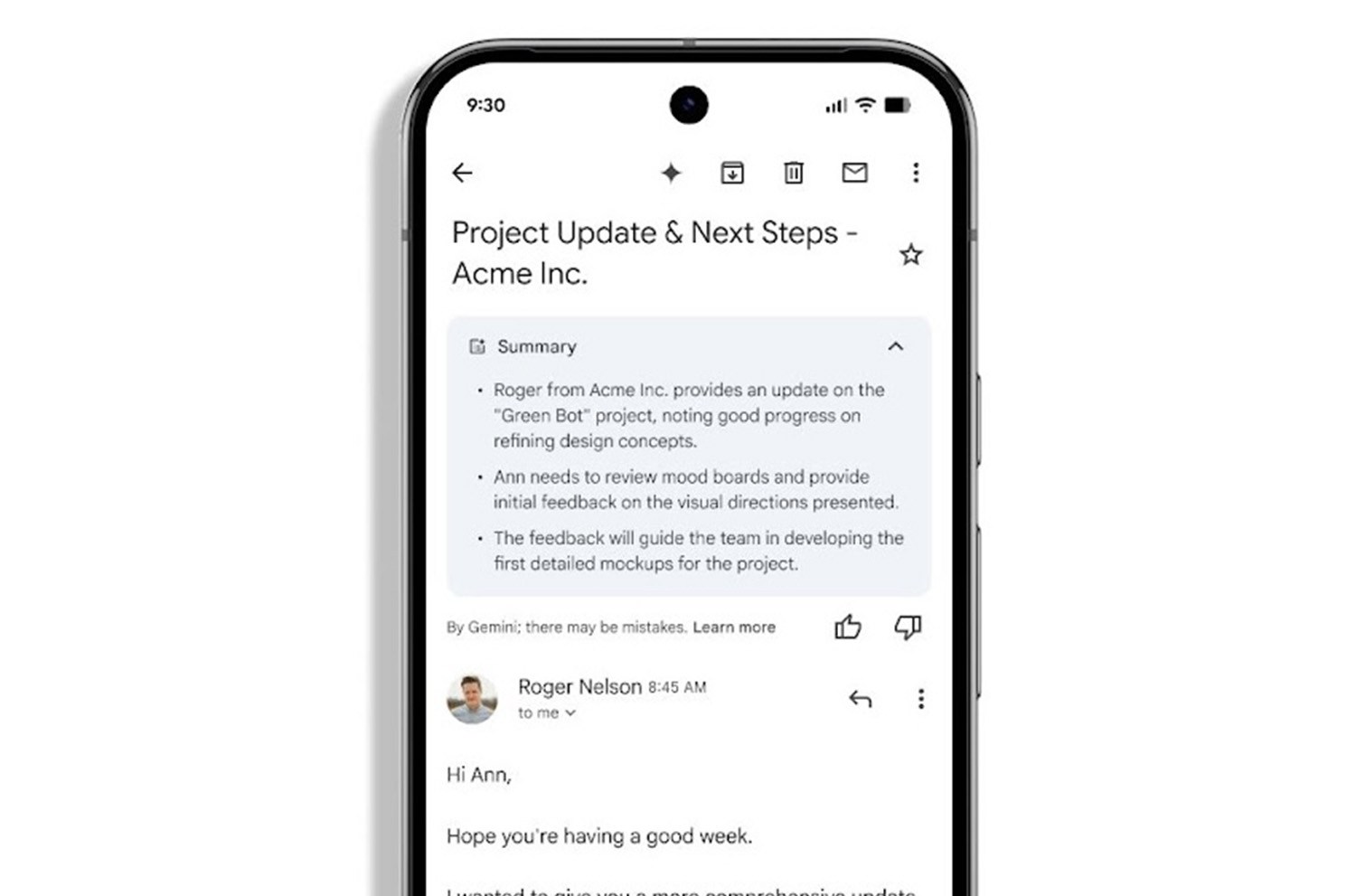

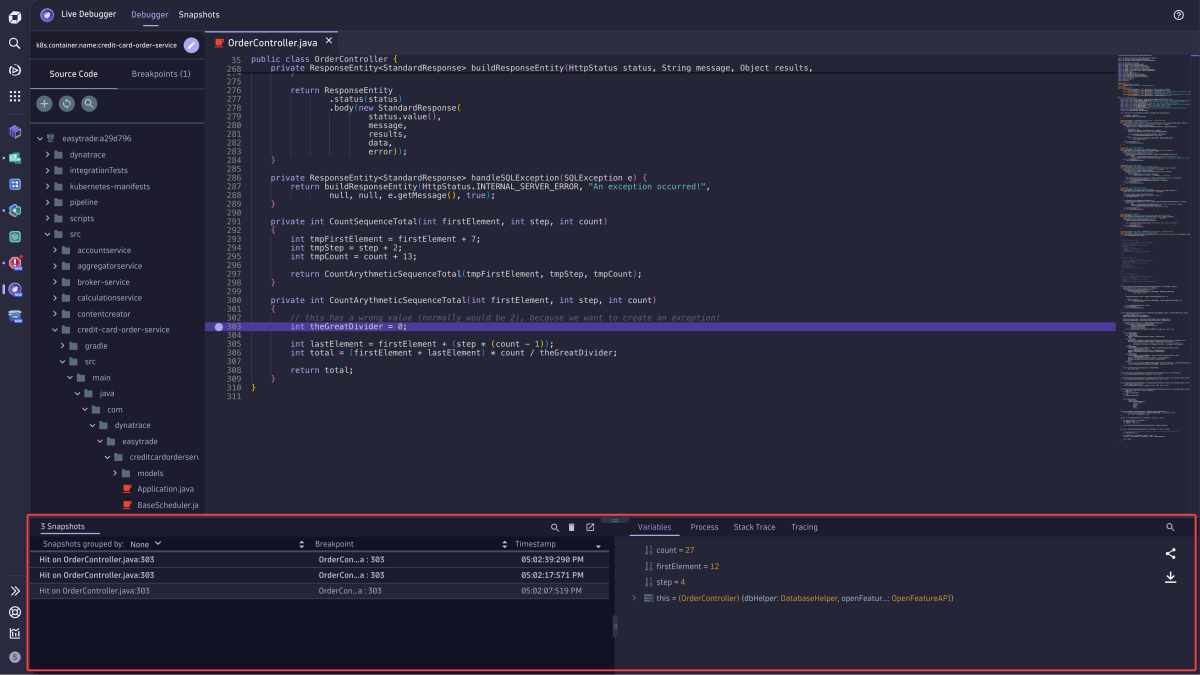

The data quality process does not conclude once the AI model has been trained. It is essential to continuously monitor the model’s performance and validate its outputs against reliable data sources.

As Stanley explains, testing the model’s outputs is crucial to ensure its responses align with expectations. This involves querying the model and comparing the generated answers back to reference data. This verification process helps confirm that the model is not, for example, mixing up fundamental information like names and addresses.

Melissa’s informatics division employs ontological reasoning, using formal semantic technologies, specifically for this purpose. They compare AI results against expected outcomes derived from real-world models represented in their curated reference datasets. This rigorous validation step helps catch errors and ensures the AI is producing reliable, contextually accurate results.

Ongoing monitoring also involves tracking the quality of the new data the model encounters in production. Data distributions can shift over time (data drift), or new quality issues might emerge. Regularly assessing incoming data and the model’s performance ensures continued accuracy and relevance.

The Role of Technology and Tools

Managing data quality, especially for the large datasets required by modern AI, is a complex task that necessitates appropriate technology and tools.

These tools typically fall into several categories:

- Data Profiling Tools: Analyze data structure, content, and quality rule adherence.

- Data Cleaning Tools: Automate the process of identifying and correcting errors, handling missing values, and standardizing data.

- Master Data Management (MDM) Systems: Create a single, trusted view of core business data by matching, merging, and managing data from disparate sources.

- Data Validation Tools: Implement and enforce data quality rules during data entry or processing.

- Data Integration Tools: Facilitate the consolidation and transformation of data from various sources while applying quality rules.

- Metadata Management Tools: Document and manage information about the data itself, providing essential context.

Leveraging these technologies can significantly streamline the data quality process, making it more scalable, efficient, and reliable. However, technology alone is not a silver bullet; it must be combined with clear processes, governance policies, and human expertise.

Conclusion

In the pursuit of transformative AI capabilities, organizations must recognize that the quality of their data is not a secondary concern but a primary determinant of success. The principle of “garbage in, garbage out” starkly highlights the risks associated with training AI models on flawed data. Investing in data quality—through rigorous profiling, cleaning, validation, enrichment, and ongoing monitoring—is not merely a technical task but a strategic imperative.

By prioritizing data accuracy, completeness, consistency, timeliness, validity, and richness, organizations can build AI models that are not only powerful but also reliable, fair, and trustworthy. This commitment to data quality lays the essential groundwork for unlocking the true potential of artificial intelligence and deriving meaningful value from data investments. Without it, the promise of AI remains just that – a promise unfulfilled, undermined by the very data intended to power it.

Comments