The AI Productivity Paradox in Software Engineering: Balancing Efficiency and Human Skill Retention

Generative artificial intelligence is rapidly changing the landscape of software development. Its promise of accelerating delivery cycles and reducing operational costs through capabilities like code generation and test automation has captured significant attention across organizations. However, the swift integration of these powerful tools introduces unforeseen complexities. Increasingly, reports indicate that while productivity might see improvements at the level of individual tasks, overall system performance can, in fact, decline.

This exploration synthesizes insights from cognitive science, software engineering practices, and organizational governance frameworks. We will examine the tangible impacts of AI tools on the quality of software delivery and investigate how the necessary skills and expertise of human engineers are evolving in this new era. Our central argument is that the sustained, long-term value derived from AI goes beyond mere automation. It critically depends on thoughtful, responsible integration, a deliberate effort to preserve essential cognitive skills within the workforce, and the adoption of a systemic perspective to avoid falling victim to the very paradox where immediate efficiency gains lead to detrimental long-term consequences.

The Core of the Productivity Paradox

AI tools are fundamentally reshaping how software development is approached, and they are doing so with astonishing velocity. Their inherent capability to automate tasks that are often repetitive or routine – such as scaffolding initial code structures, generating comprehensive test cases, or drafting technical documentation – presents a compelling vision of seamless efficiency and substantial cost savings. Yet, the apparent benefits observable on the surface can often obscure more profound, structural challenges lying beneath.

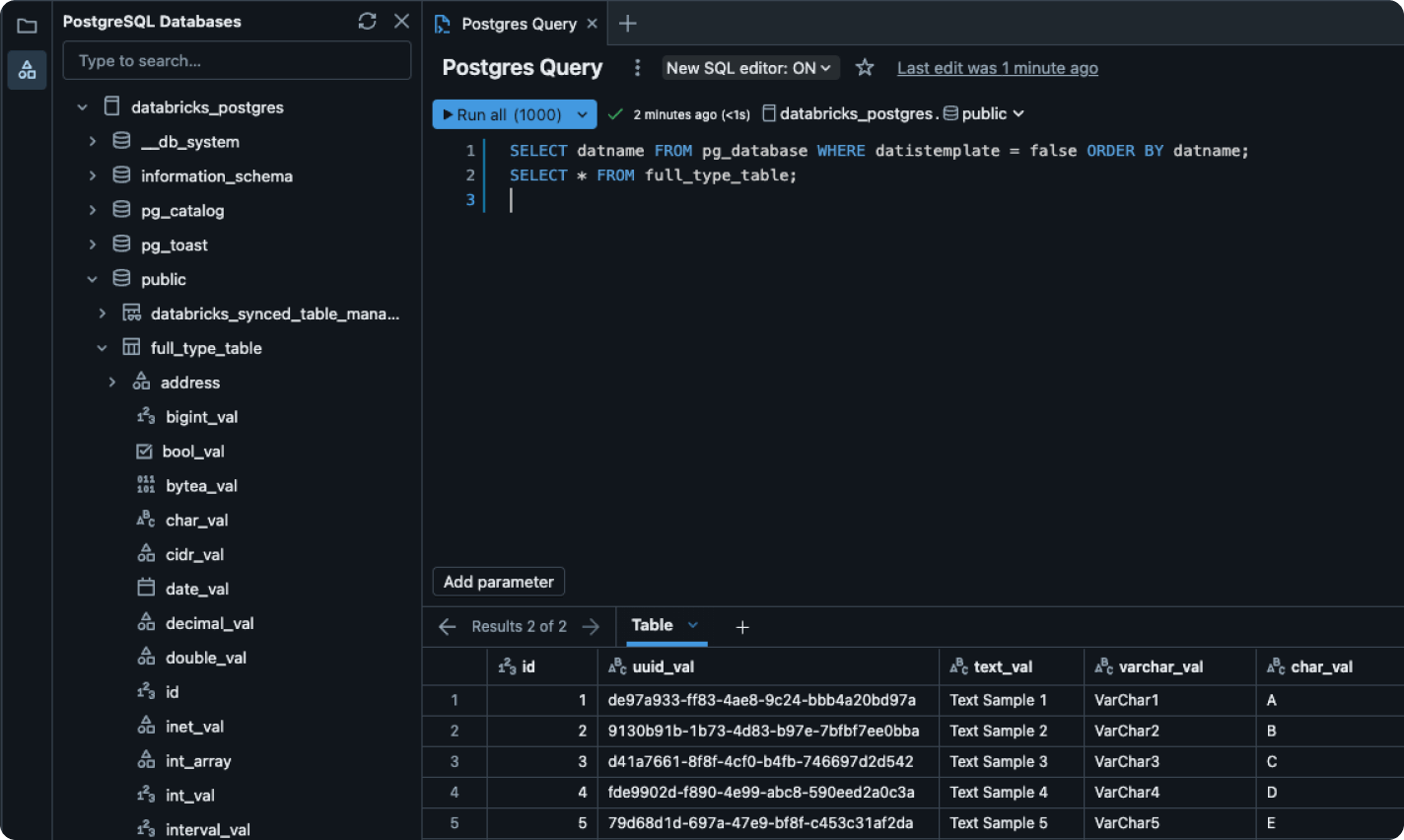

Recent data provides a striking illustration of this paradox. According to analysis presented in the 2024 DORA report, a notable 25% increase in the adoption of AI tools across software engineering teams was unexpectedly correlated with a 1.5% reduction in delivery throughput and a significant 7.2% decrease in overall delivery stability. These findings directly challenge the widespread assumption that integrating AI will universally lead to accelerated productivity across the board. Instead, the data suggests that while localized improvements might occur within specific tasks or functions, these gains can inadvertently displace problems downstream in the workflow, create new bottlenecks elsewhere in the process, or ultimately lead to an increase in the amount of necessary rework.

This apparent contradiction points to a critical underlying concern: organizations are currently focusing their optimization efforts on maximizing speed and efficiency at the individual task level, without adequately ensuring that these micro-optimizations align harmoniously with the broader health and performance of the entire software delivery system. This article delves into this complex paradox by meticulously examining AI’s multifaceted impact on various dimensions, including workflow efficiency, the cognitive processes of developers, the frameworks of software governance, and the dynamic evolution of human skills within the field.

Local Optimizations Versus Systemic Outcomes

The current dominant trend in the adoption of AI within software engineering places a strong emphasis on achieving micro-efficiencies. This manifests through features like automated code completion suggestions, the automatic generation of boilerplate documentation, and the creation of synthetic test data or test cases. These specific features are particularly attractive to junior developers, as they offer immediate, rapid feedback cycles and seemingly reduce the reliance on constant guidance from more senior or experienced colleagues. However, a significant consequence of these seemingly beneficial localized gains is the often-invisible introduction of technical debt.

Outputs generated by AI tools frequently possess excellent syntactic correctness – the code looks structurally right and compiles without basic errors. Yet, they may critically lack semantic rigor; the generated logic might not accurately reflect the intended behavior, may contain subtle logical flaws, or may be inefficient or fragile in certain contexts. Junior users, who typically lack the extensive experience and deep understanding required to effectively evaluate and identify these nuanced flaws, may inadvertently propagate brittle architectural patterns or incomplete logic into the codebase. These underlying issues inevitably surface later, often reaching senior engineers during critical stages like code reviews or architectural assessments. This redistribution of workload means that instead of truly streamlining the overall delivery pipeline, AI might inadvertently shift bottlenecks towards these essential review and validation phases, increasing the cognitive load on senior staff.

Within the domain of software testing, this illusion of accelerated progress is particularly prevalent. Organizations often operate under the assumption that AI tools can simply replace human testers by automatically generating a large volume of test artifacts. However, unless the manual creation of test cases has been empirically identified as a genuine bottleneck within the testing process through rigorous analysis, this direct substitution may yield minimal actual benefit. In some scenarios, it could even lead to significantly worse outcomes by masking fundamental quality issues beneath layers of machine-generated test cases that might be superficially correct but fail to cover critical edge cases or real-world user scenarios effectively.

The fundamental issue at play is a significant mismatch between optimizing for local gains and ensuring robust system-wide performance. Isolated improvements in specific tasks often fail to translate into meaningful increases in overall team throughput or enhancements in product stability. Instead, they can create a deceptive appearance of rapid progress while simultaneously escalating the costs associated with necessary coordination, validation, and debugging activities downstream in the development lifecycle.

Shifts in Cognition: From Foundational Principles to Prompt-Driven Logic

Artificial intelligence is far more than just another tool in the software engineer’s toolkit; it represents a profound cognitive transformation in the fundamental ways engineers approach and interact with complex technical problems. Traditional software development methodologies heavily involve bottom-up reasoning. Engineers typically construct software line by line, meticulously writing, debugging, and refining code through an iterative process of building from foundational elements. With the advent of generative AI, engineers are now increasingly engaging in a mode of top-down orchestration. This involves expressing their high-level intent through abstract prompts and then attempting to validate the often opaque or non-deterministic outputs generated by the AI.

This entirely new mode of interaction introduces three primary challenges that developers must navigate:

- Prompt Ambiguity: The process of communicating intent to an AI model through natural language prompts is inherently susceptible to ambiguity. Even small misinterpretations of the developer’s intended meaning by the AI can result in the generation of incorrect, suboptimal, or potentially dangerous code behavior. Crafting prompts that are both precise and comprehensive enough to reliably elicit the desired output is a skill that requires practice and understanding.

- Non-Determinism: Unlike traditional, rule-based systems or human-written code which typically produce predictable results given the same inputs, generative AI models can often yield varied outputs even when presented with the identical prompt multiple times. This inherent non-determinism significantly complicates the crucial processes of validation, debugging, and ensuring the reproducibility of results – core tenets of reliable software engineering.

- Opaque Reasoning: AI models, particularly complex large language models, operate as “black boxes.” Engineers are often unable to directly inspect or trace the internal steps and reasoning processes that led the AI tool to produce a specific piece of code or result. This lack of transparency makes it difficult to establish a strong sense of trust in the generated output and complicates efforts to understand why something might be incorrect or how to effectively fix it without resorting to simply regenerating the output.

Junior developers, in particular, find themselves placed into a challenging new role focused on evaluation and validation. They are tasked with assessing the quality and correctness of AI-generated code without necessarily possessing the deep domain knowledge or foundational understanding required to effectively reverse-engineer or fundamentally comprehend outputs they did not personally author line by line. Senior engineers, while generally more capable of performing rigorous validation, sometimes find that the effort involved in understanding, vetting, and potentially correcting AI-generated code outweighs the effort required to simply write the secure, deterministic code from scratch using their established expertise.

However, this shift should not be viewed as a definitive endpoint or a “death knell” for traditional engineering thinking and skill. Rather, it represents a significant relocation and transformation of cognitive effort. AI tools are shifting the primary focus of the developer’s task away from the direct, manual implementation of code and towards higher-level activities such as critical specification, the skilled orchestration of AI capabilities, and rigorous post-hoc validation of the generated results. This evolving landscape necessitates the development and mastery of a new set of meta-skills, which include:

- Expertise in designing, refining, and iterating on effective prompts to guide AI behavior.

- A heightened ability to recognize and mitigate potential narrative bias or subtle inaccuracies inherent in AI-generated outputs.

- A strong, holistic system-level awareness, including a deep understanding of dependencies, interactions, and potential downstream impacts.

Furthermore, the traditionally siloed expertise often found within individual engineering roles is beginning to undergo a notable evolution. Developers are increasingly being required to operate fluidly across different stages of the software development lifecycle, integrating responsibilities that historically belonged distinctly to design, testing, deployment, and operations. This necessitates the development of holistic system fluency – the ability to understand and interact effectively with the entire software delivery ecosystem. In this way, AI tools may be acting as a catalyst, accelerating the convergence of narrowly defined technical roles into broader, more integrated, and inherently multidisciplinary ones.

Governance, Traceability, and the Unseen Risk Vacuum

As artificial intelligence becomes an increasingly pervasive and integral component of the Software Development Life Cycle (SDLC), its widespread adoption introduces substantial new risks and challenges concerning governance, accountability, and the fundamental ability to trace the origin and evolution of code. Consider a scenario where a function generated with the assistance of an AI model inadvertently introduces a critical security vulnerability into a production system. Who or what ultimately bears the responsibility for this flaw? Is it the individual developer who formulated the prompt that led to the code generation? Is the liability placed upon the vendor of the specific AI model used? Or does the responsibility fall to the organization that deployed the code into their production environment without establishing sufficient audit trails or validation processes?

In the current reality, most development teams and organizations lack clear established protocols and possess insufficient clarity regarding these critical questions. A significant volume of AI-generated content frequently enters codebases without adequate tagging, clear attribution, or integration with version tracking systems in a way that distinguishes it from human-written code. This lack of differentiation creates pervasive ambiguity, severely hindering essential activities such as ongoing maintenance efforts, comprehensive security audits, ensuring compliance with legal and regulatory requirements, and accurately managing intellectual property rights.

Compounding these governance risks, engineers are often observed copying and pasting fragments of proprietary business logic or sensitive architectural patterns into third-party AI tools. The data usage policies of these tools can be unclear, and in doing so, developers may be unintentionally exposing or leaking sensitive corporate information, unique algorithmic approaches, or even customer-specific data structures. This practice represents a significant, often underestimated, security and intellectual property risk.

Fortunately, industry bodies and standards organizations are beginning to recognize and actively address these critical gaps in AI governance within the SDLC. Frameworks and standards such as ISO/IEC 22989 (covering Artificial Intelligence Concepts and Terminology) and ISO/IEC 42001 (focused on AI Management Systems), alongside the NIST AI Risk Management Framework from the National Institute of Standards and Technology, are increasingly advocating for the formal establishment of specific roles dedicated to managing AI risk and integration. These proposed roles include positions such as AI Evaluator, AI Auditor, and Human-in-the-Loop Operator. Establishing these roles is considered crucial for several key reasons:

- To establish clear and robust traceability for all AI-generated code, data, and artifacts integrated into the software system.

- To implement systematic processes for validating the overall system behavior and rigorously assessing the quality and reliability of AI-generated outputs.

- To ensure consistent adherence to internal organizational policies, external industry standards, and relevant regulatory compliance requirements related to AI use.

Until such comprehensive governance frameworks and dedicated roles become standard operational practice across the industry, the integration of AI will regrettably remain not only a source of potential innovation and efficiency but also a significant and largely unmanaged source of systemic risk within the software development and delivery pipeline.

Vibe Coding and the Seduction of Playful Productivity

An interesting and somewhat informal practice emerging within the community of AI-assisted development is termed “vibe coding.” This phrase describes a more playful, experimental, and exploratory mode of utilizing AI tools during the software creation process. This approach often significantly lowers the initial barrier to entry for experimentation, allowing developers to iterate more freely and rapidly on ideas and code snippets. Engaging in vibe coding can often evoke a strong sense of creative flow and novelty, making the development process feel more intuitive and less constrained.

However, despite its appealing aspects, vibe coding can also be subtly dangerous and potentially seductive. Because the code generated by AI models is typically syntactically correct and is often presented with polished, confident-sounding language (a characteristic derived from the AI’s training data), it can create a powerful illusion of completeness, accuracy, and fundamental correctness. This phenomenon is closely related to narrative coherence bias, which is a well-documented human psychological tendency. It describes our inclination to readily accept information or outputs as valid and truthful simply because they are presented in a well-structured, coherent, and persuasive narrative format, regardless of the actual underlying factual accuracy or technical validity.

In the context of vibe coding, this means developers might be prone to accepting and utilizing code or other artifacts generated by AI that “look right” on the surface and fit neatly into a plausible technical narrative, but which have not been subjected to adequate critical evaluation, thorough testing, or rigorous vetting. The informal and often rapid nature of vibe coding can easily mask significant underlying technical liabilities, particularly when the generated outputs bypass standard review processes or lack sufficient explainability to be fully understood and validated by the human developer.

The appropriate response to this phenomenon is not to discourage the valuable process of experimentation and creative exploration that AI enables. Instead, the key lies in finding a careful balance between fostering creativity and embedding robust practices of critical evaluation. Developers must receive training and guidance specifically designed to help them recognize potential patterns in AI behavior that might indicate issues, cultivate a healthy skepticism that prompts them to question the plausibility and correctness of AI outputs, and establish reliable internal quality gates for vetting generated content – even when operating in highly exploratory or rapid prototyping contexts. Cultivating this balance is essential for harnessing the creative potential of AI while mitigating its inherent risks.

Charting a Course for Sustainable AI Integration in the SDLC

The ultimate long-term success and value of integrating artificial intelligence into software development processes will not be primarily measured by how quickly AI tools can churn out code snippets or generate documentation artifacts. Instead, its true measure will be how thoughtfully and strategically these powerful capabilities are integrated into existing organizational workflows, team dynamics, and governance structures. Achieving sustainable adoption requires a comprehensive, holistic framework that addresses multiple interconnected dimensions of the software development lifecycle. Key elements of such a framework should include:

- Empirical Bottleneck Assessment: Before rushing to automate specific tasks with AI, organizations must conduct rigorous, empirical analysis of their existing processes. This involves identifying precisely where true delays, inefficiencies, or constraints genuinely exist within their workflows through data-driven assessment, rather than relying on assumptions or perceived benefits. AI automation should be targeted strategically at these verified bottlenecks to yield meaningful improvements.

- Operator Qualification and Training: Individuals utilizing AI tools for software development must be properly qualified and trained. This training should go beyond basic tool usage instructions. It must encompass a thorough understanding of the technology’s inherent limitations, an awareness of potential biases that can manifest in AI outputs, and the development of critical skills in output validation, error detection, and effective prompt engineering techniques. Developers need to understand how AI works, what its potential pitfalls are, and how to interact with it effectively and safely.

- Embedded Governance and Traceability: Robust governance mechanisms must be seamlessly integrated into the development pipeline. This mandates that all AI-generated outputs, including code, test cases, and documentation, are systematically tagged, subjected to appropriate review processes (which may differ from human code reviews but are no less critical), and thoroughly documented. This embedded approach ensures clear traceability back to the source or prompt that generated the content, which is essential for facilitating maintenance, conducting security audits, ensuring regulatory compliance, and managing intellectual property.

- Focus on Meta-Skill Development: Organizations must prioritize investing in the development of advanced meta-skills among their engineering teams. Developers need to be trained not merely to use AI tools as passive recipients of generated content, but rather to work collaboratively with AI. This involves cultivating skills in critical thinking, skeptical evaluation of AI outputs, responsible application of AI capabilities, and the ability to effectively orchestrate complex workflows involving both human and AI contributions. The focus shifts from just coding proficiency to proficiency in leveraging advanced tools intelligently.

These practices collectively shift the conversation surrounding AI in software development. It moves from an initial fascination with technological hype towards a more grounded focus on architectural integration and strategic alignment. The goal is no longer simply deploying AI for the sake of having the latest tools, but rather deploying AI in a manner that is strategically aligned with overall business objectives and contributes positively to the health and efficiency of the entire development ecosystem. The organizations that will achieve the most significant and sustainable success will not necessarily be those that are the quickest to adopt AI, but rather those that invest the time and effort to adopt it in the most thoughtful, strategic, and well-governed manner.

Architecting the Future with Deliberation

The transformative power of artificial intelligence in software engineering is undeniable, yet it will not inherently replace human intelligence and the critical role of human skill – unless organizations inadvertently create the conditions where this outcome becomes a possibility. If businesses and development teams neglect the crucial cognitive, systemic, and governance dimensions inherent in integrating AI into their workflows, they run the significant risk of trading away long-term resilience, stability, and true innovation capacity for the illusion of short-term velocity gains.

However, the future need not be framed as a zero-sum competition between AI and human engineers. When AI is adopted and integrated with careful consideration and a strategic approach, it possesses the immense potential to elevate the practice of software engineering. It can move it beyond the more manual, repetitive labor aspects and transform it into a higher-level cognitive design discipline. This elevation enables engineers to engage in more abstract problem-solving, perform more rigorous and sophisticated validation of systems, and innovate with greater confidence and creativity by offloading tedious tasks.

The most effective path forward lies in conscious adaptation and thoughtful strategy, rather than simply pursuing blind acceleration driven by technological enthusiasm. As the field of AI-assisted software development continues to mature and evolve, the true competitive advantage will accrue not to the organizations that merely adopt AI tools the fastest, but to those who demonstrate a deep understanding of AI’s capabilities and limitations, who master the art of orchestrating its effective and responsible use, and who diligently design their systems and processes to leverage AI’s strengths while carefully mitigating its inherent weaknesses.

| Metric | AI Adoption Increase | Correlated Impact on Delivery Metrics |

|---|---|---|

| Delivery Throughput | 25% | -1.5% (Decrease) |

| Delivery Stability | 25% | -7.2% (Decrease) |

Key elements for sustainable AI integration include:

- Empirical Bottleneck Assessment

- Operator Qualification and Training

- Embedded Governance and Traceability

- Focus on Meta-Skill Development

New meta-skills required for engineers in the AI era include:

- Prompt design and refinement

- Recognition and mitigation of narrative bias in AI outputs

- System-level awareness and understanding of dependencies

Industry frameworks advocating for AI governance roles include:

- ISO/IEC 22989

- ISO/IEC 42001

- NIST AI Risk Management Framework

Suggested new roles within software engineering teams:

- AI Evaluator

- AI Auditor

- Human-in-the-Loop Operator

Comments