The Future of Creativity: Adobe Reimagines the Workflow with Firefly AI

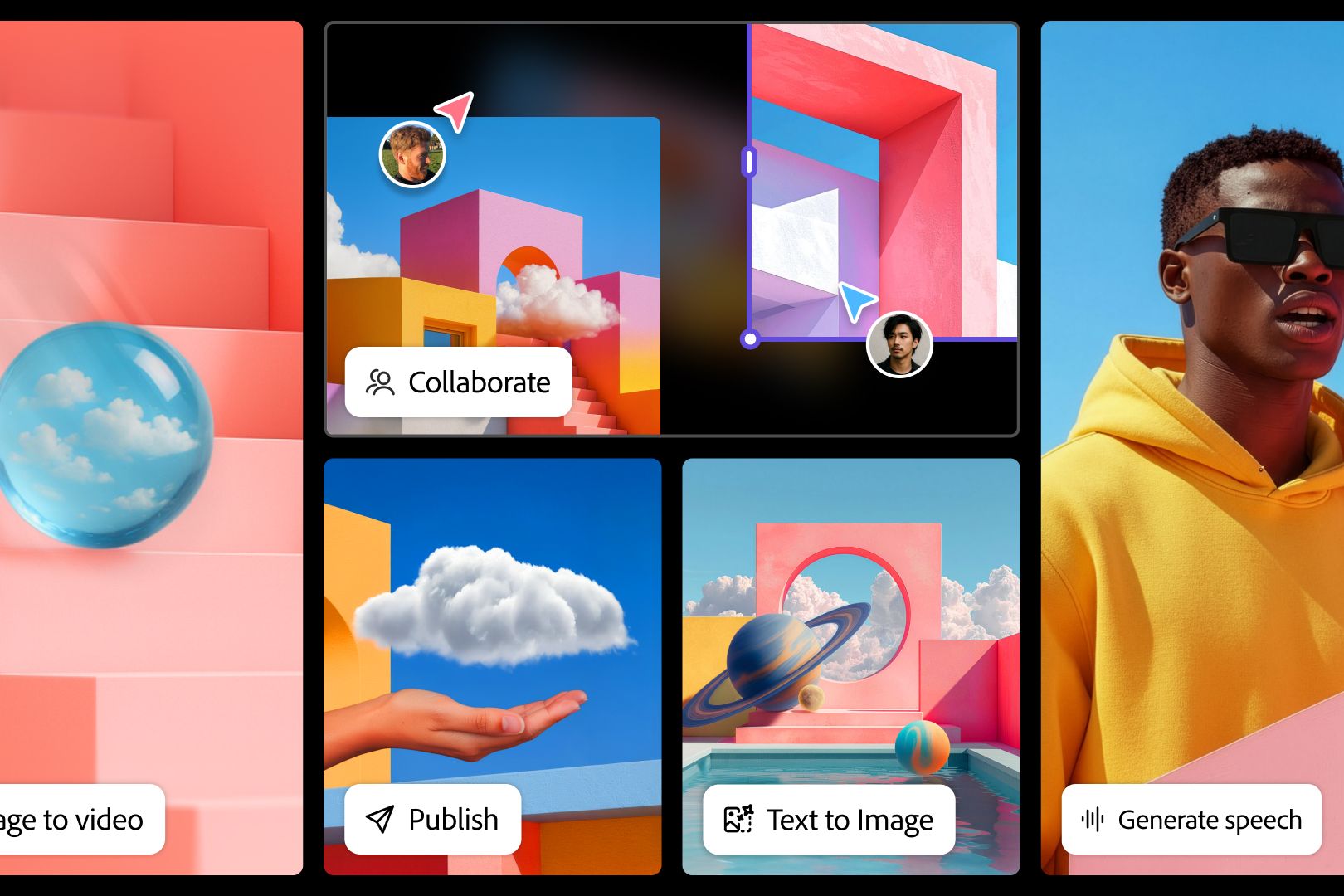

The world of digital creativity is in a state of constant, exhilarating transformation, driven by the relentless advancement of artificial intelligence. At the forefront of this revolution is Adobe, which just used its annual MAX conference to unveil a breathtaking suite of AI-powered updates that promise to redefine how artists, designers, and creators of all kinds bring their visions to life. Moving far beyond simple image generation, Adobe is weaving AI into the very fabric of the creative process, introducing groundbreaking tools for generating custom audio, developing personalized AI models, and leveraging intelligent assistants that act as a creative co-pilot.

These announcements signal a fundamental shift from AI as a novelty to AI as an indispensable, integrated partner in the creative workflow. From the new Firefly Image Model 5 that understands images in layers to the ability to generate entire soundtracks and nuanced speech with a simple prompt, Adobe is building a future where technology amplifies human ingenuity rather than replacing it. This deep dive explores every major announcement from the conference, unpacking what these new capabilities mean for the future of creativity in Photoshop, Express, and the entire Adobe ecosystem.

The Personalization Revolution: Custom Models in Firefly

For years, generative AI has been a powerful tool for creating novel images, but it has often struggled with a critical element for professional creators: consistency. Generating the same character, object, or artistic style across multiple images has been a significant challenge. Adobe is directly addressing this with one of its most impactful announcements: the rollout of custom models in Firefly for individual users.

Previously a feature reserved for enterprise clients, this capability allows any creative to train their own specialized AI model. The process is remarkably accessible. Adobe states that a creator needs as few as six to twelve images to train the model on a specific character, and only slightly more to teach it a unique tone or style. This unlocks a new level of creative control and consistency.

Imagine a comic book artist training a model on their protagonist to ensure perfect likeness in every panel, a brand manager creating a model that perfectly captures their company’s visual aesthetic for marketing materials, or a fine artist developing a model that generates images in their signature “tone.”

Critically, these custom models are not built from scratch. They are layered on top of Adobe’s own foundational Firefly model. This is a crucial distinction, as it means the training process leverages Adobe’s vast, ethically sourced dataset. The company has made a commitment to training its AI on proprietary data and Adobe Stock, ensuring that the generated content is commercially safe to use and free from the copyright entanglements that plague other models. This fusion of personalization with a secure foundation makes the feature both powerful and practical for professional use.

The rollout for custom models is slated for the end of the year, but eager creators can join a waiting list starting in November to get early access to this game-changing technology.

Firefly Image Model 5: Redefining AI-Powered Photo Editing

Alongside personalization, Adobe is pushing the boundaries of raw image quality and editing capability with the launch of Firefly Image Model 5. This new version of the core image-generation engine introduces a sophisticated feature that blurs the line between AI generation and professional photo compositing: layered image editing.

While the new model maintains the impressive 4-megapixel native resolution (equivalent to 2K or 2560 x 1440) of its predecessor, its true innovation lies in its contextual understanding of an image’s components.

Instead of seeing a flat collection of pixels, Image Model 5 identifies distinct elements within a scene. This allows for an unprecedented level of control. During a demonstration, Adobe showcased this power using an image of a bowl of ramen. The AI was able to:

- Identify and Isolate: It automatically recognized the chopsticks as a separate object from the bowl and noodles.

- Manipulate and Move: The user could then select the chopsticks and move them to a different part of the image, with the AI intelligently filling in the background where they used to be.

- Generate and Integrate: The user then prompted the AI to add a bowl of chili flakes to the scene. The model generated the new object and seamlessly integrated it into the image, complete with realistic lighting, shadows, and reflections.

This entire process was executed cleanly, without the visual artifacts or jagged edges that typically result from manual selection and compositing. This layered editing capability represents a monumental leap forward, transforming generative AI from a tool that simply creates images to one that can deconstruct and intelligently reconstruct existing ones. It promises to save creators countless hours of tedious masking and editing, allowing them to focus on the creative aspects of their work.

| Feature Comparison | Firefly Image Model 4 | Firefly Image Model 5 |

|---|---|---|

| Native Resolution | 4 MP (2K) | 4 MP (2K) |

| Prompt-Based Editing | Supported | Supported (at 2MP) |

| Key Advancement | High-Quality Generation | Intelligent Layered Image Editing |

| Use Case | Creating final images from text | Deconstructing, editing, and compositing elements within existing images |

Firefly Image Model 5 is rolling out now, placing this powerful new editing paradigm directly into the hands of creatives.

Beyond Visuals: Firefly’s Expansion into Audio Generation

Perhaps the most surprising and exciting announcements from MAX were Firefly’s expansion into the auditory realm. Adobe is introducing two powerful new generative AI features, Generate Soundtrack and Generate Speech, designed to solve major pain points for video editors, podcasters, and multimedia creators.

Generate Soundtrack

Finding the right music for a video is a notoriously difficult and time-consuming process, often involving endless scrolling through stock music libraries. Generate Soundtrack aims to eliminate this friction entirely. The tool allows a user to upload their video, which Firefly then analyzes to understand its pacing, mood, and content. Based on this analysis, it suggests a descriptive prompt for a custom soundtrack.

From there, creators can refine the music by adjusting parameters like vibe, style, and purpose. Rather than searching for a pre-existing track that “sort of fits,” you can now generate a perfectly bespoke piece of music. For instance, a creator could prompt for a “tense, cinematic orchestral score for a high-speed chase scene” or an “upbeat, acoustic folk track for a travel montage.” This provides an infinite library of royalty-free, perfectly timed music, tailored to the specific needs of any project.

Generate Speech

For the first time, Adobe is bringing high-quality text-to-speech (TTS) capabilities into the Firefly ecosystem with Generate Speech. This feature leverages a powerful combination of Adobe’s own AI models and those from ElevenLabs, a leader in realistic voice generation. The result is a tool that goes far beyond robotic narration.

Key features of Generate Speech include:

- Multi-language Support: At launch, the tool will support 15 different languages, making it accessible for global creators.

- Emotional Nuance: Users can apply “emotion tags” to the text to control the delivery.

- Precise Inflection Control: The true innovation lies in the ability to apply different emotion tags to specific words or phrases within a single line. This allows for incredibly nuanced performances, with the AI changing its inflection and tone naturally throughout a sentence, just as a human voice actor would.

This tool is a game-changer for creating voiceovers for tutorials, generating placeholder dialogue for animations, producing audio for accessibility purposes, or even creating full podcast episodes. Both Generate Soundtrack and Generate Speech are rolling out to Firefly shortly.

To house these new capabilities, Adobe also announced a new Firefly video editor. This browser-based tool will feature a full, multi-track timeline and deep integration with all of Firefly’s generative features, creating a unified space where creators can combine captured video with AI-generated images, music, and speech. A waiting list is available for early access.

The Rise of the Creative Co-Pilot: AI in Photoshop and Express

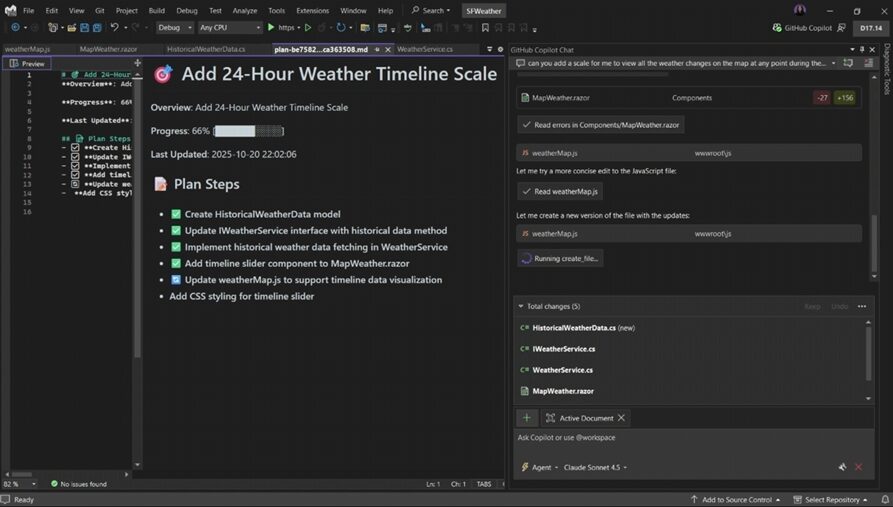

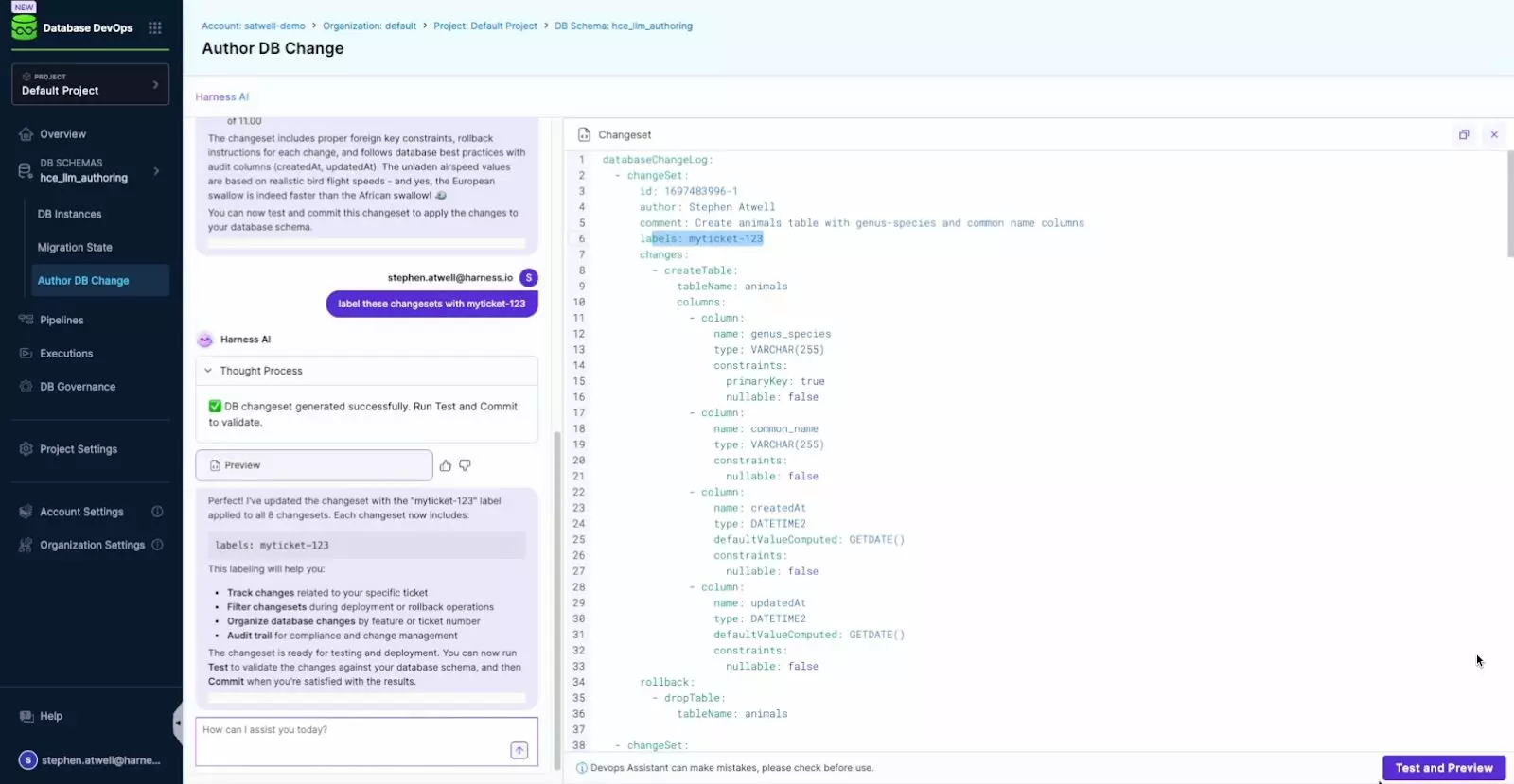

One of the most significant trends in AI is the development of “agentic AI”—assistants that don’t just execute commands but understand user intent and help accomplish complex tasks. Building on the success of its assistant in Acrobat, Adobe is now bringing this co-pilot capability to Photoshop and Express.

Adobe describes this new assistant as striking a balance between “tactile and agentic.” The goal isn’t to automate creativity but to streamline the technical process and serve as an interactive learning tool. Instead of searching through menus for the right tool, a user can simply ask the assistant what they want to accomplish. For example, a user could ask, “How do I make the sky in this photo more dramatic?” The assistant could then suggest using the Sky Replacement tool, guide the user to it, and offer different stylistic options, all while leaving the final creative decisions in the user’s hands.

This approach makes Adobe’s powerful but often complex applications more accessible to newcomers, while also speeding up the workflow for seasoned professionals. The AI assistant in Express is available to try now, and a waiting list is open for the Photoshop assistant.

A Glimpse into the Future: Project Moonlight and ChatGPT

Beyond the features rolling out today, Adobe also offered a tantalizing look at its long-term vision for a fully integrated, context-aware creative ecosystem.

Project Moonlight: The Universal Creative Context

Project Moonlight is an ambitious system designed to carry the context of your work across Adobe’s entire suite of applications. It aims to create a persistent AI memory of your unique creative style, brand guidelines, and project assets. By connecting your social accounts, for example, Project Moonlight could learn your specific tone and aesthetic to generate content that is authentically yours.

This means the color palette you defined in Illustrator, the character model you trained in Firefly, and the video editing style you used in Premiere Pro could all be instantly accessible and understood by the AI in After Effects or Photoshop. It’s a vision for a truly seamless workflow where the AI acts as an extension of the creator’s own mind, anticipating needs and maintaining consistency without requiring manual input. A private beta is launching now, with a public beta expected soon.

Adobe Meets ChatGPT: A New Creative Interface

In a move that could reshape how millions of people interact with creative tools, Adobe announced it is exploring a deep integration with OpenAI’s ChatGPT. The goal is to bring Adobe’s powerful and commercially safe generative models directly into the world’s most popular chatbot interface. This would allow users to generate images, video, and more using Firefly’s technology from within a ChatGPT conversation.

This collaboration, facilitated via Microsoft, signals a future where creative generation is not confined to specialized apps but is a fluid capability available wherever ideas are being discussed. While still in the very early stages, this potential partnership underscores a major shift toward democratized and distributed creative workflows.

A New Paradigm for Human-Machine Collaboration

The announcements from Adobe MAX 2025 are more than just a list of new features; they represent a cohesive and forward-thinking vision for the future of digital creation. By focusing on personalization with custom models, advancing technical editing with Image Model 5, expanding the creative canvas to include audio, and providing intelligent assistance with AI co-pilots, Adobe is building an ecosystem where AI serves as a powerful collaborator. The future they are building is one where technology removes tedious barriers, allowing human creativity to flourish like never before.

Comments