Why AI Agents Are Failing as Freelancers: A Reality Check on the Automation Hype

The narrative surrounding artificial intelligence has reached a fever pitch. We hear daily about the spectacular advances in AI, fueling widespread speculation that it will soon surpass human intelligence and render countless jobs obsolete. Tech leaders have made bold predictions, with some suggesting that tasks like coding could be 90 percent automated within months. This vision of autonomous AI agents taking over office work and creative roles has become a dominant theme in discussions about the future.

But what happens when these advanced AI agents are taken out of the lab and thrown into the real world of freelance work? Can they actually perform the complex, multi-step, and economically valuable chores that humans do every day? A groundbreaking new experiment sought to answer this very question, and the results provide a sobering reality check on the current state of AI capabilities. It turns out, even the most sophisticated AI agents are surprisingly terrible at being freelance workers.

The Grand Experiment: Putting AI to the Freelance Test

To move beyond hypothetical debates, researchers at the data annotation company Scale AI and the nonprofit Center for AI Safety (CAIS) developed a new benchmark called the Remote Labor Index. Its purpose is elegantly simple yet profoundly revealing: to measure the ability of frontier AI models to automate genuine, economically valuable work. This isn’t about solving riddles or passing standardized tests; it’s about executing real-world jobs that people get paid to do.

The methodology was designed to mirror the actual freelance ecosystem. The researchers collaborated with verified workers on the platform Upwork to generate a wide range of real freelance tasks. These weren’t simple, isolated commands but complete projects spanning several professional domains, including:

- Graphic Design

- Video Editing

- Game Development

- Administrative Chores (like data scraping and organization)

For each task, the AI agents were given the same materials a human freelancer would receive: a detailed description of the job, a directory of all the necessary files to perform the work, and a clear example of a finished project produced by a human expert. This setup provided a rigorous test of an agent’s ability to understand complex instructions, manage files, use various (simulated) tools, and deliver a finished product that meets professional standards.

The Shocking Results: A Near-Total Failure

After subjecting several of the world’s leading AI agents to this gauntlet of freelance tasks, the Remote Labor Index delivered a clear and decisive verdict. The performance of even the best AI was, to put it mildly, underwhelming. The experiment revealed a massive gap between the theoretical potential of AI and its practical application in the workforce.

The most capable AI agents could successfully perform less than 3 percent of the assigned freelance work. Out of a total possible earning of $143,991 across all tasks, the agents collectively managed to earn just $1,810.

This stark figure cuts through the hype and provides a quantifiable measure of AI’s current limitations. It demonstrates that while an AI might be able to write a single line of code or generate a stock image, it consistently fails when asked to complete a holistic project that requires planning, tool integration, and sustained effort.

The researchers evaluated several prominent AI tools, establishing a hierarchy of capability based on their performance in these real-world scenarios.

| AI Agent | Developer | Performance Ranking |

|---|---|---|

| Manus | Manus (Chinese Startup) | 1 (Most Capable) |

| Grok | xAI | 2 |

| Claude | Anthropic | 3 |

| ChatGPT | OpenAI | 4 |

| Gemini | 5 |

Dan Hendrycks, the director of CAIS, hopes these findings will ground the conversation about AI in reality. “I should hope this gives much more accurate impressions as to what’s going on with AI capabilities,” he stated. He also cautioned against assuming that the recent rapid pace of AI improvement will inevitably continue at the same rate, a crucial point often lost in the excitement over new model releases. This isn’t the first time technology has inspired misplaced predictions about job displacement. For years, there were warnings about the imminent replacement of radiologists by AI algorithms, a future that has yet to materialize.

Why Do Advanced AI Agents Stumble on Real-World Work?

The results of the Remote Labor Index beg a critical question: If these AI models are so good at coding, math, and logical reasoning, why do they fail so spectacularly at tasks a junior freelancer could handle? According to Hendrycks and other experts, the reasons lie in the fundamental differences between isolated intelligence and applied competence.

The Tool-Use Problem

A typical freelance project requires the use of multiple software tools. A graphic designer might start with a brief in a Word document, create mockups in Figma, refine assets in Adobe Photoshop, and finally deliver the files via a cloud storage service. Humans navigate this digital toolkit seamlessly, but for an AI agent, it represents a monumental challenge. They struggle to operate and integrate different applications, manage file paths, and maintain context across disparate software environments. This inability to use a diverse set of tools is a primary barrier to automation.

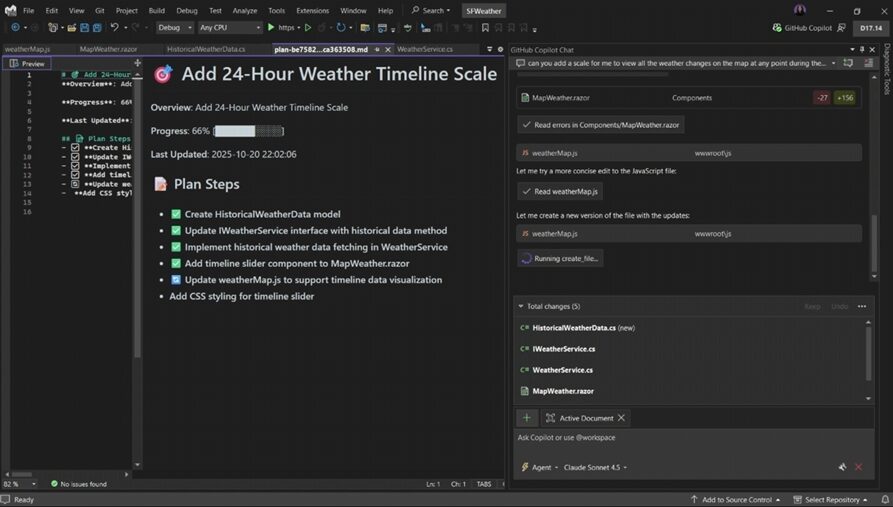

The Challenge of Complex, Multi-Step Projects

AI models excel at short, discrete tasks. Ask one to write an email, and it will do so capably. Ask it to run an entire email marketing campaign—which involves strategy, list segmentation, A/B testing, design, copywriting, and performance analysis—and it will fall apart. Real work is a chain of interconnected steps, where the output of one step becomes the input for the next. The agents in the experiment often failed because they couldn’t formulate or stick to a long-term plan, getting lost after completing just one or two stages of a larger project.

The Memory and Learning Gap

Perhaps the most significant limitation is the absence of true learning and memory. As Hendrycks points out, “They don’t have long-term memory storage and can’t do continual learning from experiences. They can’t pick up skills on the job like humans.” A human freelancer learns from every project, incorporating client feedback and adapting their workflow. If a client dislikes a particular color scheme, the freelancer remembers that preference for future projects. Today’s AI agents effectively start from scratch with every new task, unable to build upon past experiences or learn from their mistakes in a persistent way.

A Tale of Two Benchmarks: Measuring What Matters

The findings of the Remote Labor Index offer a sharp counterpoint to other measures of AI progress, most notably a benchmark from OpenAI called GDPval. According to GDPval, frontier models like the forthcoming GPT-5 are approaching human-level abilities on a set of 220 tasks found across a variety of office jobs.

So, why the dramatic difference in conclusions? The discrepancy likely comes down to what is being measured. GDPval appears to focus more on discrete, cognitive skills—the ability to reason through a problem or generate a specific piece of text or code in isolation. The Remote Labor Index, by contrast, evaluates the entire workflow. It tests an agent’s ability to not only “think” about the task but to actually execute it from start to finish using a realistic set of tools and constraints.

This highlights the crucial gap between cognitive aptitude and practical application. An AI may be able to correctly answer a question about video editing principles, but that is a world away from actually opening a project file, trimming clips, color grading footage, and exporting a final video in the correct format. As Bing Liu, director of research at Scale AI, noted, “We have debated AI and jobs for years, but most of it has been hypothetical or theoretical.” The Remote Labor Index attempts to anchor this debate in tangible, real-world performance.

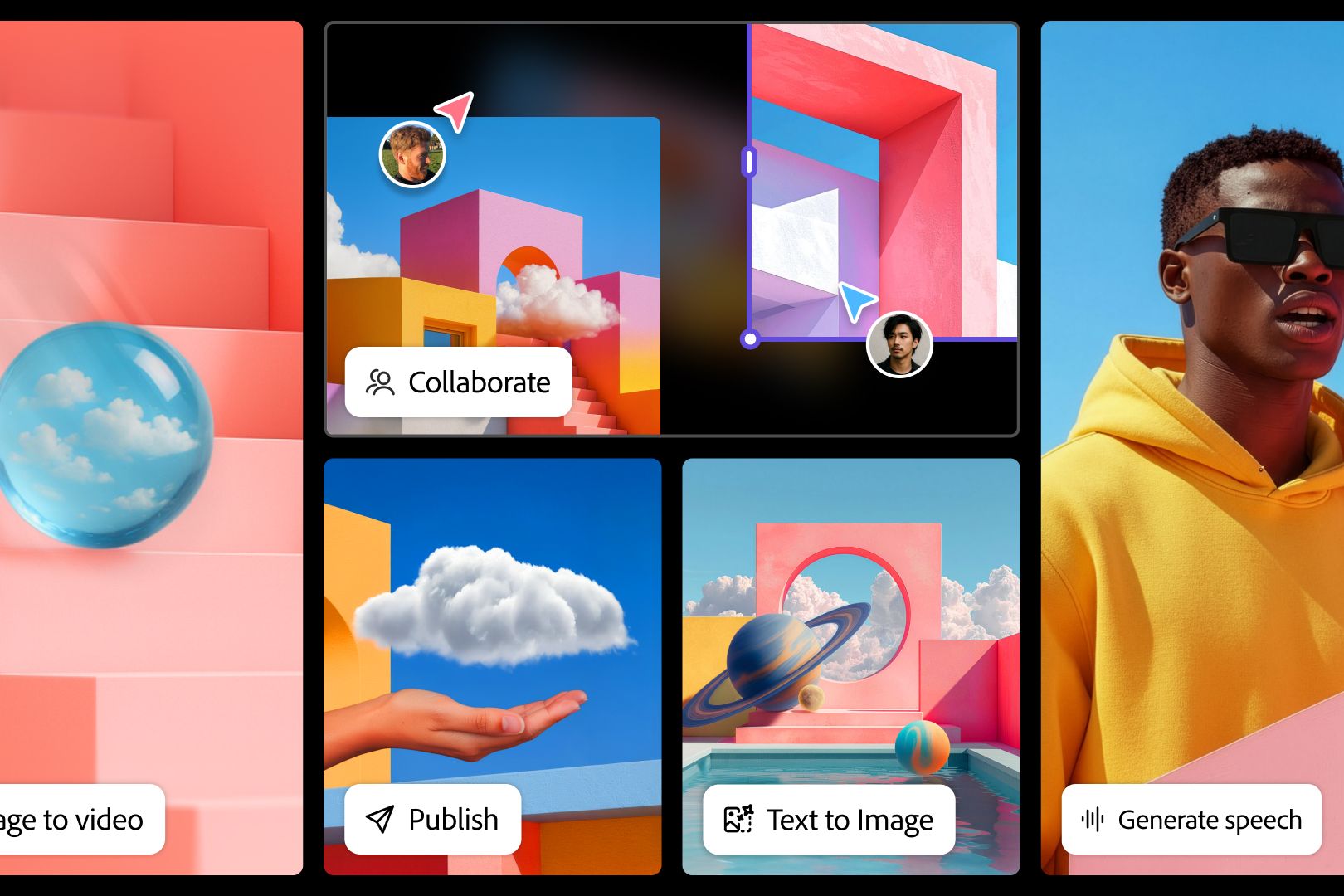

The Human-in-the-Loop Reality: AI as a Tool, Not a Replacement

It is important to acknowledge the limitations of this benchmark. The experiment was designed to test for full automation—the ability of an AI agent to perform a job with no human intervention. While this remains a distant goal, it doesn’t capture the more immediate and profound impact AI is having on the workforce.

In reality, the most effective use of AI today is not as an autonomous replacement for human workers but as a powerful tool that amplifies their productivity. A freelance writer might use an AI to brainstorm outlines and check for grammatical errors, while still handling the core creative work of drafting and storytelling. A developer might use an AI copilot to write boilerplate code, freeing them up to focus on complex architecture and problem-solving.

In this human-in-the-loop model, the AI handles the repetitive and formulaic parts of a task, while the human provides the strategic oversight, creative direction, quality control, and client communication—precisely the skills that the Remote Labor Index shows are still far beyond the reach of AI. The future may not belong to AI agents that replace freelancers, but rather to freelancers who master the art of leveraging AI as a force multiplier.

Navigating the Mixed Signals: Automation and Layoffs

Even as experiments show AI is not ready for autonomous work, the technology is frequently cited in corporate decision-making, most notably in workforce reductions. This week, Amazon announced it would cut 14,000 jobs, a move it partly attributed to the rise of generative AI.

In a publicly shared memo, Beth Galetti, Amazon’s senior vice president of people experience and technology, wrote, “This generation of AI is the most transformative technology we’ve seen since the Internet. It’s enabling companies to innovate much faster than ever before (in existing market segments and altogether new ones).”

How can we reconcile this with the finding that AI can barely complete 3% of freelance tasks? There are a few possibilities. Companies may be using AI as a convenient justification for broader restructuring aimed at cutting costs. Alternatively, they may be automating specific, narrow tasks within jobs, allowing them to consolidate roles and operate with a leaner workforce. It’s also possible that these are forward-looking decisions, made in anticipation of future AI capabilities rather than a reflection of what is possible today.

But if the Remote Labor Index is any indication, AI is highly unlikely to be stepping in to perform the complex, end-to-end responsibilities of the roles being vacated.

The Future of Work: A Partnership, Not a Pink Slip

The rush to declare the end of human work is premature. While the progress of artificial intelligence is undeniably impressive, the ability to perform coherent, complex, and economically valuable work autonomously remains a distant frontier. The journey from demonstrating isolated skills in a controlled environment to delivering reliable performance in the messy, dynamic context of the real world is long and arduous.

The true story of AI in the workplace today is not one of replacement but of collaboration. The challenge for professionals in every field is to understand both the incredible potential and the profound limitations of this technology. The most valuable skills in the coming decade will not be the ones that can be automated but the ones that AI cannot replicate: critical thinking, creative problem-solving, strategic planning, and the uniquely human ability to integrate disparate tools and ideas to create something new. The future of work isn’t about AI taking your job; it’s about building a partnership with AI to do your job better than ever before.

Comments