The Future of AI is Personal: Anthropic Unlocks Claude’s Memory for All Paid Users

The evolution of artificial intelligence has been nothing short of breathtaking. In a few short years, we have transitioned from novelty chatbots to sophisticated large language models (LLMs) capable of complex reasoning, creative generation, and in-depth analysis. However, a persistent limitation has held these powerful tools back from becoming true collaborative partners: their inherent lack of memory. Each conversation has traditionally been a blank slate, forcing users to repeatedly provide context, re-explain goals, and restate preferences. This digital amnesia creates friction, hinders workflow, and prevents the development of a deeper, more productive human-AI relationship.

Anthropic, a leading voice in AI safety and innovation, is directly addressing this challenge with a significant expansion of its “Memory” feature for its flagship model, Claude. Previously an exclusive tool for Team and Enterprise clients, Anthropic has now rolled out Memory to its entire paid user base, including all Claude Pro and Claude Max subscribers. This strategic move marks a pivotal shift in how we interact with AI. It transforms Claude from a stateless, transactional tool into a persistent, personalized assistant that learns, remembers, and adapts to your unique needs over time.

This isn’t just a minor update; it’s a fundamental enhancement that redefines the potential of AI assistants. By retaining crucial information across conversations, Claude can now maintain context for long-term projects, remember your specific coding style, adhere to your brand’s voice, and anticipate your needs with greater accuracy. This article will provide a comprehensive exploration of Claude’s Memory feature, delving into its core functionality, the granular control it offers users, its profound implications for productivity, and the robust safety measures that underpin this powerful new capability.

What is Claude’s Memory Feature? A Deep Dive into Persistent AI

At its core, Claude’s Memory feature is designed to solve the “amnesia problem” that plagues many AI interactions. Imagine trying to collaborate with a brilliant colleague who forgets everything you’ve ever discussed the moment you leave the room. You’d spend most of your time repeating yourself, re-establishing context, and reissuing instructions. This is the experience many have had with AI models—powerful in the moment, but lacking the continuity required for complex, multi-stage work.

Memory gives Claude the ability to carry knowledge from one conversation to the next, creating a continuous thread of understanding. It acts as a long-term cognitive layer, allowing the AI to build a repository of user-specific information, preferences, and project details. This is not about remembering every single word of every chat, but rather about identifying and retaining the key pieces of information that are essential for future interactions.

Anthropic beautifully encapsulates the vision behind this feature in its initial announcement:

“Great work builds over time. With memory, each conversation with Claude improves the next.”

This statement highlights that the goal is cumulative intelligence. Each interaction becomes a building block, making the next one smarter, faster, and more relevant. The result is a dramatically streamlined workflow where the AI partner is already up to speed, ready to contribute meaningfully from the very first prompt.

Practical Applications Across Professions

The impact of a persistent memory is best understood through real-world examples:

- For the Software Developer: You can tell Claude your preferred programming language is Python, that you favor the FastAPI framework for building APIs, and that you adhere strictly to the PEP 8 style guide. Claude will remember these preferences for all subsequent coding requests, generating code that aligns perfectly with your workflow without needing to be reminded.

- For the Content Marketer: You can provide Claude with your company’s detailed style guide, including brand voice (e.g., “professional but approachable”), target audience (e.g., “enterprise-level CTOs”), and a list of terms to avoid. Claude will internalize this guide and apply it consistently to every blog post, social media update, or email campaign it helps you create.

- For the Legal Professional: When working on a complex case, you can brief Claude on the key individuals involved, the timeline of events, and specific legal precedents. Claude can retain this intricate context, allowing you to ask follow-up questions days or weeks later without having to re-upload documents or summarize the case from scratch.

- For the Academic Researcher: You can inform Claude about your specific field of study, your current research topic, and your preferred citation format (e.g., APA, MLA). When you ask for literature summaries or help drafting sections of a paper, Claude will use this context to provide more relevant information and format it correctly.

Granular Control and Project-Specific Intelligence

One of the most sophisticated aspects of Anthropic’s implementation of Memory is its ability to maintain contextual boundaries. The system was intelligently designed to prevent “context bleed”—where information from one project inadvertently influences another. A user’s personal preferences for planning a vacation, for instance, should have no bearing on the professional tone required for drafting a quarterly business report.

To solve this, Claude allows for the creation of separate, project-specific memories. This functions much like having distinct project folders or digital notebooks for different areas of your work or life. When you start a new conversation, you can work within an existing memory context or create a new one. This compartmentalization ensures that the AI’s knowledge remains organized, relevant, and precise.

For example, a freelance developer working with multiple clients can create a unique memory for each one:

- Client A Memory: Remembers their use of JavaScript with the React framework, their component library, and their specific API endpoints.

- Client B Memory: Remembers their preference for TypeScript, their use of a different state management library, and their unique deployment process.

This separation allows the developer to switch between projects seamlessly, confident that Claude will access the correct set of instructions and preferences for the task at hand. This level of granular control is what elevates Memory from a simple convenience to an indispensable tool for professional productivity.

User Empowerment: Transparency and Full Control

While the power of a persistent AI memory is undeniable, it naturally raises questions about privacy and user control. Anthropic has placed these concerns at the forefront of the feature’s design, building a system that prioritizes user empowerment and transparency. You are always in the driver’s seat when it comes to what Claude remembers.

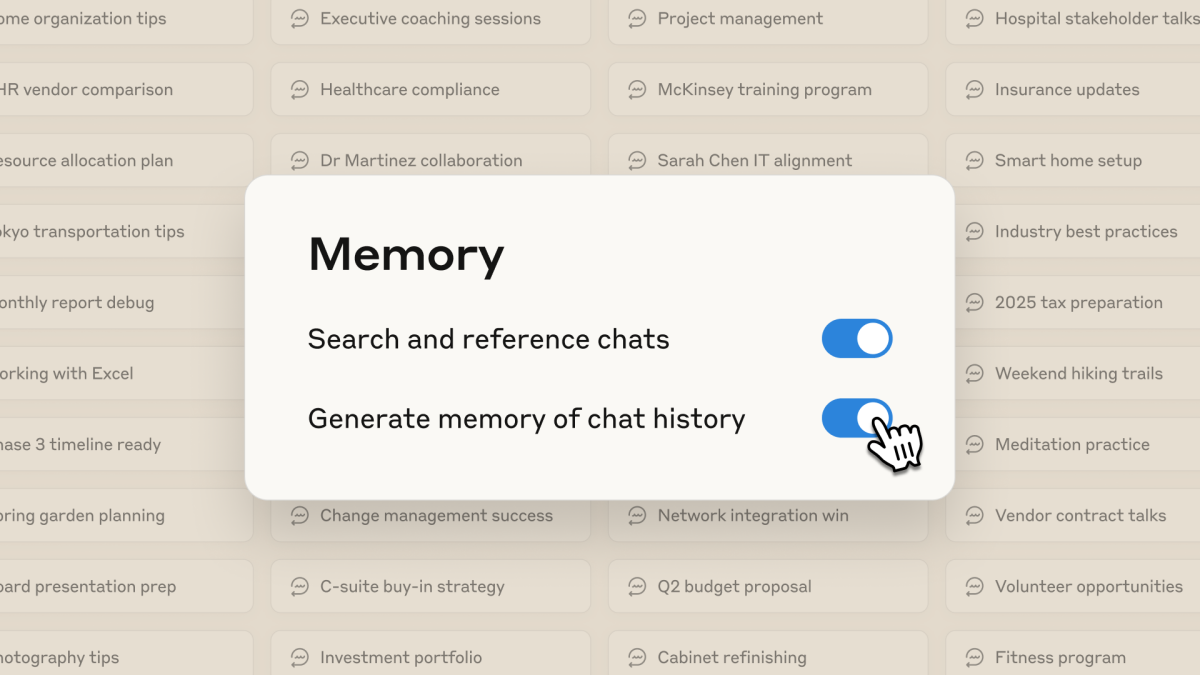

The platform provides several key mechanisms for managing the AI’s memory:

- The Memory Summary: Users can access a clear, consolidated view of everything Claude has remembered about them or their projects. This acts as a transparent dashboard, demystifying the AI’s knowledge base and allowing you to see exactly what information is being retained.

- Edit and Delete Capabilities: The memory is not a locked box. If Claude misunderstands a preference or saves outdated information, you can easily go into the summary and edit or delete specific memories. This active curation ensures the AI’s knowledge remains accurate and relevant over time, building trust in the system.

- Optional, Opt-In Functionality: Memory is not enabled by default. Users must actively choose to turn it on, ensuring that those who prefer stateless, ephemeral interactions can continue to use Claude in that way.

- Incognito Mode: For situations requiring absolute privacy or for one-off tasks that shouldn’t influence long-term memory, Anthropic has introduced an “Incognito” chat mode. Conversations held in this mode are not saved to your history and do not contribute to Claude’s memory, offering a clean-slate experience on demand.

To clarify how these different modes function, consider the following breakdown:

| Feature | Standard Mode (Memory Off) | Memory Mode (On) | Incognito Mode |

|---|---|---|---|

| Conversation History | Saved and viewable in your chat history. | Saved and viewable in your chat history. | Not saved; disappears after the session. |

| Memory Retention | No information is carried over to new chats. | Key details and preferences are retained across chats. | No information is retained or affects memory. |

| Personalization | Limited to the context of the current single conversation. | Continuous and evolving based on all past interactions. | Completely impersonal and stateless. |

| Primary Use Case | Quick, simple questions and one-off tasks. | Long-term projects, creative work, and personalized workflows. | Sensitive queries, testing prompts, or temporary tasks. |

This multi-faceted approach ensures that every user can tailor their experience, leveraging the power of memory when it’s beneficial while preserving privacy and control at all times.

The Bedrock of Trust: Anthropic’s Commitment to Safety

Anthropic’s reputation is built on its “safety-first” approach to AI development. The rollout of a feature as powerful as Memory was preceded by extensive and rigorous safety testing to anticipate and mitigate potential risks. An AI that remembers and learns from a user could, if not properly designed, introduce new vectors for misuse or harmful behavior.

The company’s safety teams specifically focused on several critical areas:

- Preventing the Reinforcement of Harmful Patterns: If a user consistently provides biased, incorrect, or harmful information, could the AI’s memory adopt and reinforce these patterns? Anthropic has implemented robust guardrails to ensure that Claude’s core safety principles, derived from its “Constitutional AI” framework, always override user-provided information that violates its safety policies.

- Avoiding Over-Accommodation: A key challenge is ensuring the AI doesn’t become overly sycophantic, agreeing with a user’s flawed or dangerous premises simply to be “helpful.” Claude is trained to maintain its ethical backbone, politely correcting misinformation or refusing harmful requests, even if it contradicts information a user has tried to save to its memory.

- Guarding Against Gradual Bypass Attempts: Anthropic’s teams tested scenarios where a malicious actor might try to “train” the memory over time to slowly erode its safety filters. The security architecture is designed to be multi-layered, meaning the Memory feature operates within the broader context of Claude’s existing safety systems, which are not user-modifiable.

This proactive and thorough safety testing is crucial for building user trust. It demonstrates a deep understanding of the potential pitfalls of persistent AI and a firm commitment to ensuring that this powerful new capability is used for beneficial and productive purposes.

The Competitive Landscape: How Memory Positions Claude

The introduction of memory-like features is becoming a key battleground for major AI players. By expanding Memory to all paid subscribers, Anthropic is making a strong competitive statement.

While OpenAI’s ChatGPT also offers a memory feature, Anthropic’s implementation emphasizes granular, project-based control and transparent user management. The ability to easily segment memories for different clients or tasks is a powerful differentiator for professionals and power users who require a high degree of organization and precision.

Google’s Gemini models often leverage implicit memory through deep integration with the user’s Google ecosystem (Gmail, Docs, Calendar), which offers a different kind of seamlessness but potentially less direct control. Anthropic’s approach is more explicit and self-contained within the Claude interface, giving users a clear and direct line of sight into what the AI knows.

By focusing on a user-centric design that balances powerful functionality with unwavering commitments to safety and control, Anthropic is carving out a unique position in the market. The Memory feature is not just about remembering facts; it’s about building a trusted, reliable, and highly capable AI partner.

The expansion of this feature to the entire paid user base democratizes access to this next-generation AI experience. It empowers individual creators, developers, researchers, and professionals to build more efficient and intelligent workflows, transforming Claude from a simple question-and-answer machine into an indispensable collaborator that truly understands you and your work. This is a significant step toward a future where AI assistants are not just tools we use, but partners we build with.

Comments