Chaos Engineering: The Unsung Hero in the Age of Artificial Intelligence

The world is in the midst of a technological renaissance, powered by the explosive growth of Artificial Intelligence. From generative AI creating stunning art to large language models (LLMs) revolutionizing customer service, companies are in a frantic race to deploy AI-powered applications. This rush to innovate is creating a parallel, often unseen, surge in system complexity. With every new AI model, API integration, and data pipeline, we are building digital skyscrapers on foundations we haven’t fully stress-tested. This escalating complexity is a direct threat to the uptime, availability, and reliability that customers demand.

It boils down to a simple but dangerous equation: more interconnected components and dependencies equal more potential points of failure. This risk is exponentially magnified when AI also serves as an accelerant for deployment velocities, pushing code into production faster than ever before. In this new, high-stakes environment, traditional quality assurance methods are no longer sufficient. We cannot simply test for the knowns; we must prepare for the unknowns.

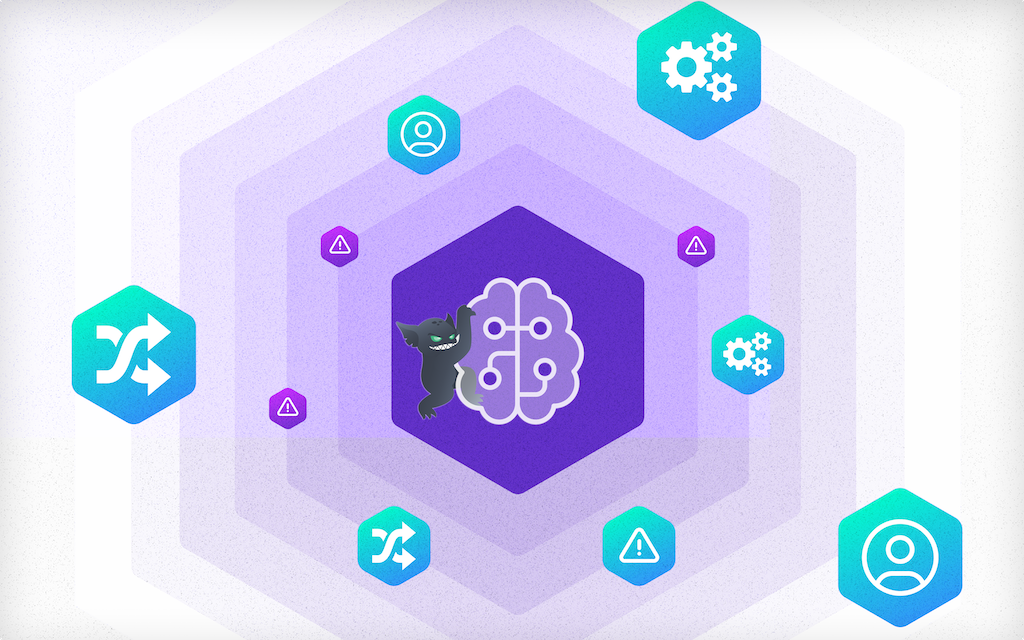

This is precisely where Chaos Engineering becomes not just a best practice, but a non-negotiable, core discipline for any organization serious about building resilient AI systems. The practice of Fault Injection through Chaos Engineering is the only proven method to systematically uncover the latent failure modes lurking between services, applications, and the complex AI models they depend on. By integrating it into your development and testing lifecycle, you can proactively plug these critical gaps before they escalate into costly, reputation-damaging incidents.

A Familiar Echo: Why the AI Boom Mirrors the Cloud Revolution

For those who have been in the industry for a while, this moment feels familiar. We have witnessed a similar seismic shift before: the migration to the cloud. The cloud was a game-changer, promising unprecedented speed, scale, and agility. However, it came with its own set of headaches. In exchange for execution speed, engineering teams relinquished direct control over their hardware. Suddenly, they had to design for servers that could disappear without warning, treat every internal service as a network dependency, and contend with an entirely new class of failure modes.

It was in the crucible of this cloud migration that Chaos Engineering was born. At Netflix, as the company scrambled to move its massive infrastructure to the cloud, a tool called Chaos Monkey was created. Its purpose was not to cause random havoc but to serve as a forcing function—a deliberate, automated way to simulate host failures and compel engineers to confront the ephemeral nature of cloud infrastructure head-on. It trained teams to design for resilience from the ground up, building systems that could withstand the inherent chaos of a distributed environment.

Chaos Engineering has evolved significantly since those early days of simply shutting down servers. Today, it is a sophisticated and precise toolkit for systematically injecting a wide range of faults into a system. Engineers can now simulate everything from network blackholes and crippling latency spikes to resource exhaustion and catastrophic node failures. It allows us to surgically probe for weaknesses and understand the intricate, often nasty, interactions that can derail complex distributed systems.

This evolution couldn’t have come at a better time, because the AI boom is cranking up the stakes to an entirely new level. As companies race to integrate AI, they are dramatically expanding their architectural footprints with more dependencies and faster deployment cycles, multiplying reliability risks. Without the proactive, empirical validation that Chaos Engineering provides, these hidden gaps will inevitably transform into major outages that hit your customers and your bottom line hard.

The Hidden Fragility of Modern AI Architectures

Even without the added layer of AI, modern applications are a minefield of potential failures. It’s common to see production environments running hundreds of interconnected Kubernetes services, where the opportunities for things to go sideways are nearly endless. But AI cranks that complexity up to eleven, exponentially ballooning deployment scale and performance demands.

Consider a seemingly straightforward application that integrates with a commercial LLM, like GPT-4 or Claude, through an API. Even if you maintain your core architecture, you are introducing a plethora of new network calls—in other words, new hard dependencies. Each of these API calls can fail. They can slow down dramatically, introducing unacceptable latency. The provider could experience an outage, rate-limit your requests, or push a breaking change to their API. The quality of the model’s response could even degrade under load, as Anthropic discovered when load balancer issues led to low quality Claude responses.

The challenge is even greater if you choose to host your own model. Now, you’re responsible for the entire stack, which includes maintaining the quality and consistency of responses, managing resource-intensive GPU clusters, and ensuring the health of complex data pipelines. These are not trivial problems. Overlooking these potential gotchas is easy when you’re on the bleeding edge of innovation. That is precisely why a “trust, but verify” ethos is essential. Chaos Engineering provides the framework to make this ethos a reality, allowing you to uncover and mitigate these vulnerabilities before they turn into full-blown disasters.

To truly appreciate the shift, it’s helpful to compare the failure domains of traditional applications with their modern AI-powered counterparts.

| Failure Domain | Traditional Monolithic App | Modern AI-Powered App |

|---|---|---|

| Compute | Server CPU overload, Memory leaks | GPU/TPU starvation, Pod eviction storms, Resource contention in shared clusters |

| Network | Internal network latency, Database connection loss | API gateway timeouts, LLM provider latency/outage, Vector database connectivity issues |

| Data | Corrupt database records, Slow queries | Data pipeline failure, Malformed input data, Model drift, “Poisoned” training data |

| Dependencies | Library version conflicts, Third-party service outage | External model API changes, Rate limiting, Authentication failures with AI services |

| State | Session state loss, Inconsistent cache | Inconsistent model responses, Hallucinations under stress, Failure to maintain conversational context |

Beyond Testing: Embracing a Culture of Proactive Resilience

Unveiling a slick new chatbot or an AI-driven analytics tool is the glamorous part of the job. Ensuring it stays online, responsive, and reliable day after day? That’s the grind. But the truth is, mastering this unglamorous work is what unlocks the bandwidth for the truly innovative projects that excite engineers and drive the business forward. Most product roadmaps don’t budget time for firefighting production incidents, so every outage is a direct tax on your team’s ability to deliver new features.

This isn’t just a theory. A major telecom client recently analyzed the performance of their services, comparing teams that had embraced a robust Chaos Engineering practice against those that had not. The results were stark. The services powered by a platform like Gremlin experienced significantly fewer pages and maintained rock-solid uptime. Their engineers spent far less time battling incidents and more time shipping killer features that delighted customers.

The core philosophy of Chaos Engineering is not about recklessly breaking things in production. It is about conducting controlled, scientific experiments to build confidence in your system’s ability to withstand turbulent and unexpected conditions. It’s about moving from a reactive stance of fixing things after they break to a proactive culture of building systems that are resilient by design.

To build this culture, you must leverage the full spectrum of modern fault injection techniques.

- Network Faults: Simulate unreliable network conditions by introducing latency, packet loss, or blackhole traffic between your application and a critical AI service. How does your system behave when the connection to your LLM provider becomes slow or drops entirely?

- Resource Exhaustion: Deliberately stress CPU, memory, GPU, or I/O to observe how the system performs under heavy load. This is a common scenario during peak traffic or intensive model inference. Does your application gracefully degrade, or does it crash and burn?

- Dependency Failures: Simulate a complete failure or a severely degraded performance from a critical dependency. This could be a vector database, an authentication service, or the LLM API itself. Does your application have proper timeouts, retries, and fallback mechanisms?

- State and Application-Level Faults: Go beyond infrastructure and inject application-specific errors. Force a model to return malformed or nonsensical data. Simulate a cache failure that forces every request to hit the primary model. These experiments reveal weaknesses that infrastructure-level tests might miss.

A Practical Blueprint for Implementing Chaos Engineering in Your AI Stack

Adopting Chaos Engineering doesn’t have to be an overwhelming, all-or-nothing endeavor. The key is to start small and build momentum. By following a systematic approach, you can progressively build a powerful resilience practice across your organization.

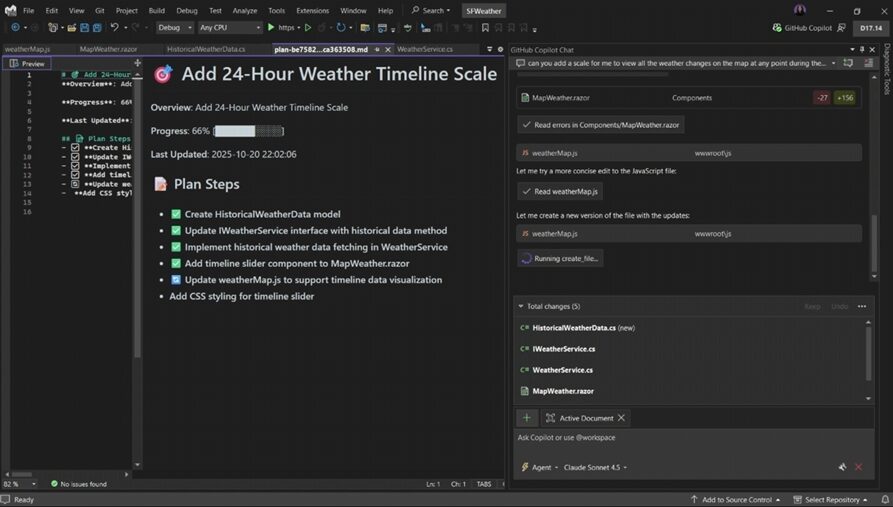

1. Get Systematic and Start Small Begin by zeroing in on high-stakes failure points. Don’t try to boil the ocean. Identify a single, business-critical AI feature. Ask a simple question: “What is the worst thing that could happen to this feature?” Perhaps it’s your LLM API endpoint becoming unresponsive. This is the perfect target for your first experiment.

2. Formulate a Clear Hypothesis A chaos experiment is a scientific process, and every good experiment starts with a hypothesis. Define what you expect to happen when you inject a fault. For example: “If the primary LLM API experiences a 500ms latency spike, our application will gracefully fall back to a cached response within 1 second, and the user-facing error rate will not increase.” This clarity is crucial for determining success or failure.

3. Run Controlled, Contained Experiments Use a dedicated Chaos Engineering tool to inject the fault in a controlled environment. Always start in a staging or development environment to validate your experiment. Once you are confident, move to production, but limit the “blast radius.” Target a small subset of traffic—perhaps only internal users or a tiny percentage of customers—to minimize any potential impact.

4. Measure, Analyze, and Iterate Throughout the experiment, closely monitor your key business and system metrics: application error rates, request latency, CPU utilization, and user satisfaction scores. Did the system behave as your hypothesis predicted? Did your failover and retry mechanisms kick in as designed? Use the findings to identify weaknesses, fix them, and then run the experiment again to validate the fix.

5. Scale, Standardize, and Automate The true power of Chaos Engineering is unlocked when it becomes a consistent, organization-wide practice.

The real win is achieving consistency. Curate a shared library of standard attacks and failure scenarios. This lightens the load for individual teams, establishes a common resilience benchmark, and amplifies the impact of the practice across the entire organization.

Make these experiments a routine part of your development lifecycle. Schedule regular tests to spotlight evolving risks before they escalate into incidents. Integrate automated chaos experiments directly into your CI/CD pipeline, making resilience a non-negotiable quality gate for every new release. Finally, create a reliability scorecard to track trends over time. Highlight wins, hold teams accountable when issues arise, and loop in executive leadership not just for visibility, but to champion a cross-company culture of reliability. This isn’t about finger-pointing; it’s about rallying the entire organization around the shared goal of building unbreakable systems.

If Chaos Engineering has been sitting on your technical backlog, the AI surge is the urgent cue to turn up the heat. The technological landscape is shifting faster than ever before, and reliability must keep pace. By embracing this discipline, you ensure that when a user interacts with your groundbreaking AI feature, it is always available, responsive, and delivering the results they can count on. The future is being built on AI, but that future will only be as strong as its resilience.

Comments