GitKraken Unveils Insights: A New Era for Measuring the ROI of AI in Software Development

In a significant move to address one of the most pressing challenges in modern software development, GitKraken, a company renowned for its commitment to enhancing the developer experience, has officially announced the launch of GitKraken Insights. This innovative platform is engineered to provide engineering leaders and organizations with the critical data needed to accurately measure and understand the true impact of artificial intelligence on developer productivity and overall team performance. As AI tools become deeply integrated into development workflows, the ability to quantify their return on investment (ROI) has remained elusive—a problem GitKraken Insights aims to solve head-on.

The rapid adoption of AI-powered coding assistants, automated testing tools, and intelligent CI/CD pipelines has fundamentally altered the landscape of software development. While the promise of accelerated timelines and enhanced code quality is compelling, leadership has struggled to connect these tools to tangible business outcomes. Traditional metrics, once the gold standard for measuring engineering efficiency, were not designed for the nuances of an AI-augmented development lifecycle. GitKraken’s latest offering steps into this gap, providing a comprehensive solution built for the complexities of today’s tech environment.

The AI Productivity Paradox: Are We Faster, or Just Busier?

The central challenge that GitKraken Insights addresses is what the company refers to as the “AI Productivity Paradox.” This paradox describes a situation where the adoption of AI tools leads to a flurry of activity, but not necessarily a corresponding increase in genuine productivity or value delivery. Engineering teams are undoubtedly changing how they work, but the fundamental question remains: is this change leading to better results?

Consider the common scenarios in an AI-driven team:

- Increased Code Volume: AI code generators can produce vast amounts of code in seconds. This might lead to a higher number of pull requests and commits.

- Superficial Velocity: On the surface, metrics like pull request counts might skyrocket. A manager looking at this data in isolation might conclude that the team has become dramatically more productive.

- Hidden Costs: The reality, however, can be far more complex. Is the AI-generated code of high quality? Does it introduce subtle bugs or increase technical debt? Does it require more extensive and time-consuming review cycles from senior developers, shifting the bottleneck rather than eliminating it?

This is the crux of the paradox. Without a deeper, more contextualized view, organizations risk mistaking motion for progress. GitKraken astutely points out the limitations of existing measurement frameworks in this new era.

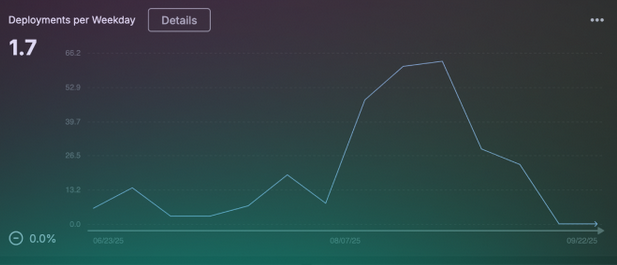

“DORA metrics can tell you if deployment frequency changed, but they can’t tell you why. Pull request counts might go up, but is that because developers are more productive, or because AI is generating code that requires more review cycles?”

This statement encapsulates the problem perfectly. Teams are flying blind, investing heavily in AI without a reliable system to verify if those investments are paying off. An increase in deployment frequency could be due to smaller, more efficient batch sizes, or it could be the result of shipping lower-quality code that requires frequent hotfixes. GitKraken Insights is designed to provide the clarity needed to distinguish between these scenarios.

Why Traditional Engineering Metrics Fall Short in the Age of AI

For years, the software development industry has relied on established frameworks like DORA (DevOps Research and Assessment) metrics to gauge the health and performance of engineering teams. These metrics—Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Time to Restore Service—have been invaluable for organizations transitioning to DevOps and continuous delivery models. They provide a clear, high-level view of a team’s delivery throughput and stability.

However, the introduction of AI complicates this picture. DORA metrics are excellent at measuring the outcomes of the development process but offer little insight into the quality and sustainability of the work being done within that process. AI’s primary impact is on the “black box” of code creation, review, and refactoring—areas that DORA doesn’t explicitly measure.

The table below illustrates the gap between what traditional metrics measure and what’s needed to understand AI’s impact.

| Metric Category | Traditional DORA Metrics Focus | AI-Era Considerations (The Gap) |

|---|---|---|

| Throughput | How often are we deploying? How long does it take from commit to deploy? | Is the increase in throughput sustainable? Are we accumulating hidden technical debt that will slow us down later? |

| Stability | How often do our deployments fail? How quickly can we recover from failure? | Is the AI-generated code more or less prone to bugs? Is the Change Failure Rate increasing due to complex, hard-to-understand code? |

| Code Quality | Not directly measured by DORA. Assumed to be a factor in stability. | How complex is the new code? Does it adhere to team standards? Is it maintainable? Is the AI introducing security vulnerabilities? |

| Developer Effort | Not directly measured. Inferred from lead time and deployment frequency. | How much time is spent reviewing AI code vs. writing original code? Is developer cognitive load decreasing, or just shifting to different tasks? |

| Team Dynamics | Not measured. Focus is on the output of the entire system. | Is the adoption of AI creating friction points in the review process? How is it impacting developer satisfaction and the overall developer experience? |

GitKraken’s core argument is that to truly understand AI’s ROI, organizations need a new, multi-faceted approach to measurement—one that combines the proven value of DORA metrics with deeper insights into code quality, technical debt, and the developer experience itself.

Introducing GitKraken Insights: A Holistic Approach to Engineering Intelligence

GitKraken Insights is positioned as the solution to these challenges. It is not merely another dashboard but a comprehensive intelligence platform that weaves together disparate data points to create a cohesive and actionable narrative about a team’s performance. By integrating a wide range of metrics, the platform aims to move beyond simplistic measures of activity and provide a true picture of engineering effectiveness.

The new offering was developed in close collaboration with GitClear, a company specializing in advanced software engineering intelligence (SEI). This partnership is a cornerstone of the product’s power. GitKraken brings its deep expertise in the developer experience (DevEx) and a user base of millions of developers who trust its tools. GitClear contributes powerful analytical capabilities for dissecting code contributions and workflow patterns.

As GitKraken explains, this combination creates a unique value proposition: “Their SEI capabilities, combined with our developer experience expertise and the trust we’ve earned with millions of developers, create something unique in the market.” This synergy allows Insights to analyze not just what is being produced, but how it is being produced, and how the developers producing it feel about the process.

Deconstructing the Five Pillars of GitKraken Insights

To paint a complete picture of engineering health, GitKraken Insights is built on five interconnected pillars of measurement. This multi-pronged approach ensures that teams can see the cause-and-effect relationships between their actions, tools, and outcomes.

- DORA Metrics: The platform doesn’t abandon traditional metrics but incorporates them as a foundational layer. Teams can still track their deployment frequency, lead time, and stability, providing a consistent baseline for high-level performance and a bridge between old and new measurement paradigms.

- Code Quality Analysis: This goes beyond simple pass/fail on a linter. Insights will likely analyze factors like code complexity (cyclomatic complexity), churn (how often code is rewritten shortly after being committed), and adherence to best practices, providing an objective measure of the maintainability of the codebase. This is crucial for evaluating whether AI tools are contributing clean, sustainable code or just complex, unmanageable spaghetti.

- Technical Debt Tracking: Insights helps teams quantify and monitor the accumulation of technical debt. By identifying shortcuts, legacy patterns, or poorly implemented AI-generated code, it allows teams to address these issues proactively before they snowball into major problems that cripple future velocity.

- AI Impact Measurement: This is the platform’s most innovative feature. It provides specific metrics designed to isolate the effect of AI tools on the workflow. This could include measuring the percentage of code that is AI-generated, the time saved during code creation, and the impact on code review cycles (e.g., comparing review times for human-written vs. AI-assisted pull requests).

- Developer Experience Indicators: Recognizing that a productive team is a happy and engaged team, Insights includes metrics related to the developer experience. This could involve analyzing friction in the development process, such as long wait times for CI builds, excessive context switching, or bottlenecks in the pull request review process. By identifying these pain points, teams can make targeted improvements that directly enhance developers’ day-to-day work.

Beyond Surveillance: Ethical Metrics for Empowering Teams

One of the biggest hurdles for any engineering analytics tool is the perception of it being a “Big Brother” surveillance system. Developers are rightfully wary of tools that measure individual performance, as these can be easily misused to create unhealthy competition, foster a culture of fear, and reward the wrong behaviors (e.g., writing more lines of code instead of better code).

GitKraken has been deliberate and thoughtful in addressing this concern. The company emphasizes that Insights was built with a developer-first mindset, focusing on teams and workflows rather than individuals.

“We’re not just providing tools that make developers more productive—we’re helping teams prove it, measure it, and continuously improve it.”

The platform is designed to:

- Surface Systemic Bottlenecks: Instead of highlighting an individual developer as “slow,” Insights identifies points in the workflow where the entire team is getting stuck. This shifts the focus from blame to process improvement.

- Identify Friction Points: The tool helps visualize where developers are losing time and energy, whether it’s waiting for builds, struggling with a complex legacy module, or dealing with an inefficient review process.

- Facilitate Collaborative Improvement: By providing objective, team-level data, Insights empowers teams to have constructive conversations about their processes. The data serves as a neutral starting point for identifying problems and brainstorming solutions together.

This ethical approach is critical for gaining the trust and buy-in of the very developers the tool is meant to help. By focusing on systemic improvements, GitKraken ensures that Insights is a tool for empowerment, not enforcement.

Proving the Value: The Full Promise of a DevEx Platform

The launch of GitKraken Insights marks a pivotal moment for the company and the broader industry. It signifies a maturation of the Developer Experience (DevEx) category, moving it from a focus on individual tools that make life easier to integrated platforms that deliver measurable business value.

The ultimate promise is a complete feedback loop. Developers use tools that make them more effective, and the platform provides the data to prove that effectiveness to leadership. This creates a virtuous cycle:

- Adopt Tools: A team adopts a new AI coding assistant.

- Measure Impact: GitKraken Insights analyzes how this tool affects code quality, review times, and overall throughput.

- Identify Opportunities: The data reveals that while code generation is faster, review times have increased by 15% due to a lack of clear documentation for the AI-generated functions.

- Improve Process: The team establishes a new standard requiring AI-generated code to include detailed comments and links to relevant documentation.

- Verify Improvement: Insights confirms that the new standard has reduced review times back to baseline while retaining the speed benefits of the AI tool.

This is the future of high-performing engineering organizations. It’s a data-driven, continuous improvement model applied directly to the software development lifecycle. GitKraken’s final message underscores this forward-looking vision.

“We’re not chasing hype. We’re not promising AI will replace developers. We’re building practical tools that make developers more effective, and now we’re helping teams understand whether those tools—and all AI tools—are actually delivering value.”

With the launch of Insights, GitKraken is not just releasing a new product; it’s providing a roadmap for how to navigate the complexities of the AI-native era, ensuring that the incredible potential of artificial intelligence is harnessed in a way that is both productive and sustainable.

Comments