How Data Centers Actually Work: The Unseen Engines of the AI Revolution

In the race for artificial intelligence supremacy, tech giants are investing hundreds of billions of dollars into a global infrastructure arms race. This year alone, companies like OpenAI, Amazon, Meta, and Microsoft have poured staggering sums into the construction and expansion of AI data centers—vast, anonymous warehouses packed with powerful computers that form the backbone of the digital world. Projects like OpenAI’s new “Stargate” data center in Texas signal a monumental bet on the future of AI.

Yet, as the deals pile up and the facilities grow larger, so do pressing concerns about their viability and sustainability. These energy-hungry behemoths are placing unprecedented strain on power grids, consuming vast quantities of water, and sparking political battles in communities around the world. Are we building the foundation for a technological utopia, or are we fueling an unsustainable bubble destined to burst? This deep dive explores how these critical facilities actually work, the immense environmental and economic stakes involved, and whether the AI industry’s relentless pursuit of scale is headed for a cliff.

From Your Keyboard to the Cloud: The Journey of an AI Query

Most of us interact with AI daily, but few understand the complex journey a simple query takes. When you type a question into a tool like ChatGPT—whether asking for dinner recipes or brainstorming a business plan—you initiate a near-instantaneous process that spans the globe and engages some of the most powerful hardware ever built. This journey reveals why data centers are so indispensable to the AI revolution.

The process begins the moment you hit “send.” Your request is transmitted from your device to the AI company’s servers, where it first undergoes a series of checks:

- Authentication: The system verifies that you are a valid user with permission to access the service.

- Moderation: Your prompt is scanned to ensure it aligns with the platform’s content guidelines and safety protocols.

- Load Balancing: A crucial step where the system determines which data center, and which specific servers within it, are best equipped to handle your request at that moment. This prevents any single facility from being overloaded.

Once cleared, the core of your request—the text itself—is broken down into smaller, manageable pieces called “tokens.” These tokens can be words, parts of words, or even individual characters, and they act as the fundamental building blocks that AI models can process. This tokenized query is then dispatched to its final destination: a server rack filled with specialized hardware.

This is where Graphics Processing Units, or GPUs, come into play. Originally designed for rendering complex video game graphics, GPUs are masters of parallel processing, meaning they can perform millions of calculations simultaneously. This capability makes them perfectly suited for the intense computational demands of AI. Data centers are lined with metallic rows of servers, each containing powerful GPUs like Nvidia’s coveted H100s.

When your tokenized query arrives at these servers, the AI model begins what is known as “inference.” It analyzes the tokens from your prompt and starts predicting the most probable sequence of tokens to form a coherent and relevant answer. One by one, it generates the next word, then the next, and the next, rapidly assembling a full response. Finally, this completed answer is sent back through the same network path, appearing on your screen in a matter of seconds. It’s a miraculous feat of engineering, and it all hinges on the immense, centralized power of the data center.

The Colossal Energy Appetite of AI Infrastructure

The miracle of instantaneous AI responses comes at a significant environmental cost. Data centers are among the most energy-intensive facilities on the planet. The sheer density of high-performance servers generates an enormous amount of heat, requiring constant, powerful cooling systems just to keep the hardware from overheating. Beyond cooling, these facilities need energy to power the network equipment, maintain lighting, and run security systems. This creates a relentless demand for electricity.

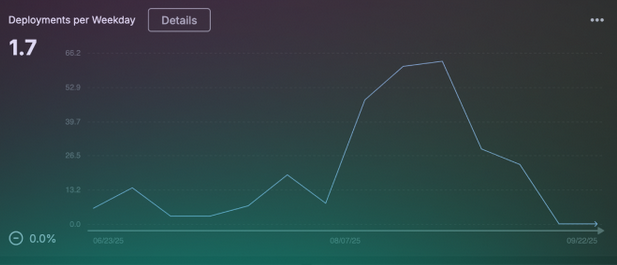

The environmental footprint of a data center isn’t static; it fluctuates based on usage cycles, often peaking during the day and decreasing overnight. More importantly, its impact is directly tied to the energy source it relies on. A facility connected to a grid powered primarily by fossil fuels like coal or natural gas will generate far more carbon emissions than one powered by solar or wind.

Calculating the precise environmental toll is notoriously difficult, as companies often treat their energy consumption and operational data as proprietary information. However, public announcements and regional analyses provide a glimpse into the staggering scale of their power needs.

Meta’s upcoming “Hyperion” data center in Louisiana is projected to require about five gigawatts of power. To put that in perspective, that is roughly half the peak power load of New York City, all for a single facility.

This is not an isolated case. The proliferation of data centers is pushing energy grids to their limits in several regions, creating what experts call an energy cliff.

| Region | Data Center Energy Consumption Impact |

|---|---|

| Ireland | Data centers already consume over 20% of the entire country’s electricity. |

| Virginia, USA | A major data center hub, Virginia is projected to see a dramatic surge in energy demand in the coming years, straining its grid capacity. |

| Memphis, USA | Local communities are facing air pollution and energy strain from new facilities, highlighting environmental justice concerns. |

This explosive growth in energy demand is forcing a global reckoning. While many tech companies make public commitments to renewable energy, the sheer scale and speed of their expansion often outpace the development of clean energy sources, leading them to rely on existing, often dirtier, power grids to meet their needs. The result is a growing tension between technological advancement and environmental responsibility.

The Transparency Problem: Unmasking AI’s True Environmental Cost

One of the most significant challenges in assessing the sustainability of the AI boom is a profound lack of transparency. While tech leaders occasionally offer figures to quell public concern, these numbers often raise more questions than they answer.

This summer, OpenAI CEO Sam Altman stated in a blog post that an average ChatGPT query uses about 0.34 watt-hours of energy—comparable to running an oven for just over a second or a high-efficiency light bulb for a few minutes. While seemingly small, experts like Sasha Luccioni, the climate lead at Hugging Face, argue such figures are misleading without proper context. Luccioni points out that this “average query” is undefined. Does it refer to a simple question or a complex code-generation task? More importantly, how many billions of these queries are happening every day?

The true impact depends on numerous factors that companies rarely disclose: the specific data centers processing the queries, the energy efficiency of those facilities, and the carbon intensity of the local power grids they are connected to.

As Luccioni bluntly put it, Altman “pulled that figure out of his ass.” She elaborates on the core issue: “It blows my mind that you can buy a car and know how many miles per gallon it consumes, yet we use all these AI tools every day and we have absolutely no efficiency metrics, emissions factors, nothing.”

This opacity makes it nearly impossible for the public, regulators, and even researchers to conduct independent audits of the industry’s environmental footprint. The problem extends beyond direct energy use. The complete lifecycle emissions—from mining the raw materials for GPUs to manufacturing and shipping server components across the globe—are rarely incorporated into corporate sustainability reports. This “keep going down the rabbit hole” nature of emissions reporting allows companies to present a narrow, more favorable picture of their impact. Without standardized, comprehensive reporting, the public is left to rely on corporate assurances, and the true cost of the AI revolution remains hidden in the data centers’ shadows.

The Billion-Dollar Bet: Who’s Fueling the Data Center Boom?

The immense financial and logistical scale of the AI infrastructure boom is driven by a handful of the world’s wealthiest and most powerful corporations. This is the era of the “hyperscalers”—a class of major cloud service providers like Meta, Amazon, Microsoft, and Google, who possess the capital and influence to build data centers on a global scale.

Their investments are staggering. The “Stargate” project alone represents a $500 billion, 10-gigawatt commitment between a consortium of giants including OpenAI, SoftBank, Oracle, and MGX. This illustrates the complex web of partnerships and frenemy-like collaborations defining the industry, where companies compete fiercely while also relying on each other’s technology and infrastructure.

Interestingly, these massive investments are increasingly described not in terms of dollars or the number of GPUs purchased, but in terms of energy capacity: gigawatts. This shift in language reveals the industry’s core assumption: the demand for AI compute power will continue to scale upwards indefinitely, and securing access to massive amounts of energy is the primary bottleneck to future growth. These are not one-time investments but staggered, long-term commitments predicated on a future where AI is woven into every facet of our lives.

These companies have the resources to build bigger and faster than anyone else, and they are getting creative in their pursuit of dominance. They are pioneering new cooling technologies, exploring unconventional locations, and forging direct partnerships with energy producers to secure the power they need. This aggressive build-out is not just about meeting current demand; it’s a strategic land grab for the future, where control over physical infrastructure translates directly into market power and a competitive edge in the AI arms race.

A Political Battleground: The Clash Between Progress and People

The rapid expansion of data centers is not just a technological or environmental issue; it has become a fierce political battleground. The conflict pits the national ambition of building an “American AI empire” against the tangible concerns of local communities who bear the brunt of the industry’s impact.

At the national level, there is strong bipartisan support for bolstering the country’s AI capabilities. Administrations see AI dominance as a matter of economic competitiveness and national security. The Trump administration, for instance, has aligned this goal with support for the fossil fuel industry, advocating for data centers to be powered by oil, gas, and coal. This approach creates a powerful alliance between Big Tech and Big Energy, as a massive expansion of power demand presents a lucrative new market for traditional energy producers.

However, a very different story is unfolding on the ground. Communities are organizing in opposition to new data center projects for a variety-of-reasons:

- Rising Electricity Rates: The immense energy draw of a new data center can strain the local grid, potentially leading to higher utility bills for all residents.

- Water Consumption: Many data center cooling systems use vast amounts of water, a critical issue in drought-prone regions.

- Noise Pollution: The constant hum of cooling fans and machinery can create significant noise pollution for nearby neighborhoods.

- Environmental Justice: Facilities are often sited in low-income communities or communities of color that already suffer from disproportionate levels of industrial pollution.

A stark example of this conflict occurred in Memphis, where Elon Musk’s xAI installed a series of unpermitted gas turbines to quickly power its new data center. These turbines were located in a majority-Black community already burdened with high rates of asthma and poor air quality. The vocal and organized resistance from local residents brought national attention to the issue, highlighting how the costs of technological progress are often not shared equally. This local opposition is creating unlikely political alliances, with figures as disparate as grassroots environmental activists and conservative politicians like Marjorie Taylor Greene raising alarms about the unchecked growth of data centers.

An Industry on the Brink? Questioning the Sustainability of AI’s Scaling Obsession

For all the billions being invested and the breathless hype surrounding AI’s potential, a critical question looms over the industry: Is this aggressive expansion truly sustainable, or are we witnessing the inflation of a massive economic bubble? The central vulnerability lies in the growing disconnect between the colossal investment in infrastructure and the current level of consumer spending on AI services. While enterprise adoption is growing, revenue from individual users has yet to materialize at a scale that justifies the current spending frenzy.

Furthermore, reports have surfaced that hyperscalers may be using accounting tricks to depress their reported infrastructure spending, which in turn inflates their perceived profits from AI. This combination of speculative investment and opaque financial reporting has many observers worried that the industry’s foundation is less stable than it appears.

The technological premise of endless scaling is also being challenged. The current paradigm is built on the assumption that bigger is always better—that larger models trained on more data will inevitably lead to greater capabilities. However, we may be approaching a point of diminishing returns. The computational cost of training the next generation of frontier models is becoming astronomical, while the incremental gains in performance are shrinking.

This has opened the door for new approaches:

- Smaller, Efficient Models: Researchers are developing smaller, highly specialized models that can perform specific tasks with remarkable accuracy at a fraction of the computational cost. The success of models like DeepSeek from China serves as a reality check, proving that cutting-edge performance doesn’t always require massive scale.

- Novel Chip Designs: The industry is actively exploring new hardware architectures beyond the traditional GPU to perform AI calculations more efficiently.

- Alternative Computing: Paradigm-shifting technologies like quantum computing, while still in their infancy, hold the long-term promise of solving complex problems that are intractable for even the largest supercomputers today.

If these more efficient alternatives gain traction, the tech giants who have bet everything on massive, centralized data centers could find themselves saddled with billions of dollars in fixed, and potentially obsolete, infrastructure.

Navigating the Future: Our Role in the Age of AI

As the AI infrastructure boom continues to reshape our world, it can feel like a force beyond our control. However, as citizens, consumers, and thinkers, we have a vital role to play in shaping a more sustainable and equitable technological future. The path forward requires critical engagement, not passive acceptance.

First, we must become more informed about the hidden systems that power our digital lives. A powerful first step is to learn how your local electric utility works. These entities, whether investor-owned or public, make the decisions that determine where our power comes from and how much it costs. When a data center comes to town, it is the utility that negotiates the terms and manages the impact on the grid and on your bill. By understanding this process and supporting local groups advocating for renewable energy and fair rate structures, you can exert real influence over how your community balances technological growth with public interest.

Second, in an age increasingly dominated by machine-generated content, we must double-down on what makes us uniquely human. The rise of AI should be a catalyst for a renewed appreciation of the humanities, critical thinking, and genuine human connection. Spend time away from the screen, read books written by people, watch films, and invest in real-world relationships. Our ability to think critically, create original art, and build empathetic communities is not something AI can replicate. This is not an act of Luddite resistance, but a necessary rebalancing to ensure technology serves humanity, not the other way around.

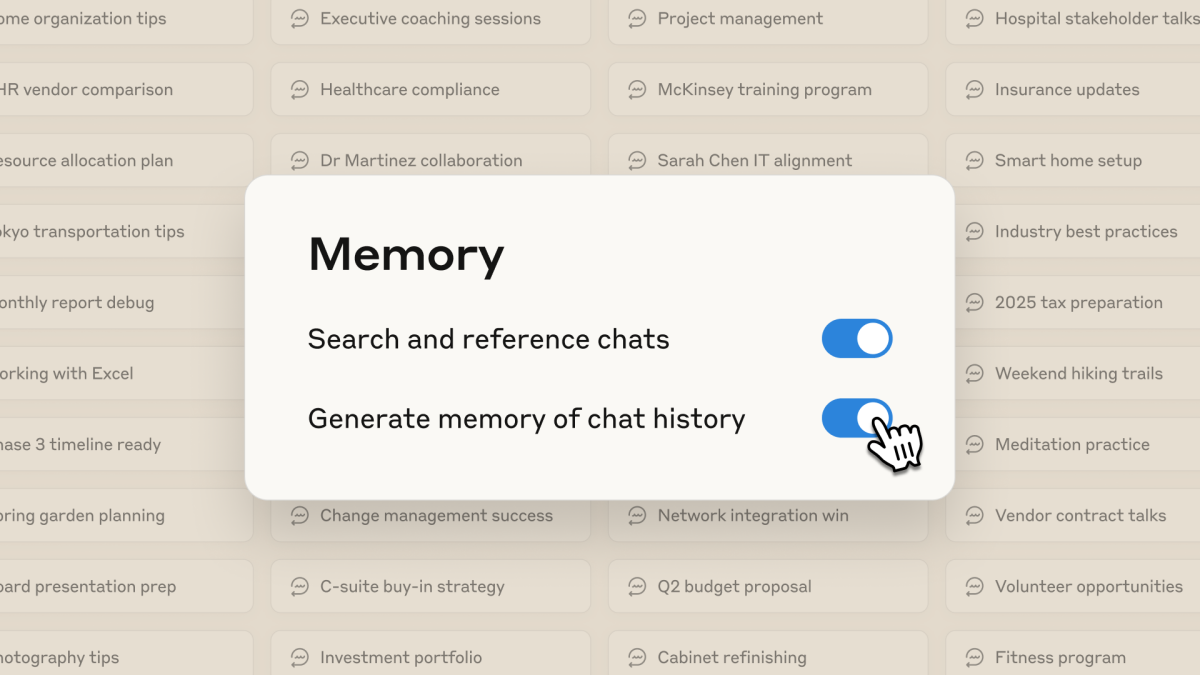

Finally, engage with AI technology, but do so critically and mindfully. To form an informed opinion, you must understand the tools. Experiment with them, learn their capabilities and their limitations. But resist the urge to use AI like a monster. Simple acts, like avoiding superfluous pleasantries such as “thank you” in your prompts, can reduce unnecessary computation. More importantly, push back when AI features are crammed into products where they add no real value. Turning off an unhelpful AI feature is the digital equivalent of turning off the lights when you leave a room—a small act of conservation that, when multiplied, can make a difference. By being deliberate and conscious in our use, we can help steer the industry toward genuine utility rather than growth for its own sake.

Comments