Revolutionizing AI in Development: JetBrains Unveils a New Era of Productivity Benchmarking

The integration of artificial intelligence into the software development lifecycle is no longer a futuristic concept; it is our daily reality. AI-powered coding assistants, from code completion tools to automated debugging agents, have permeated our IDEs and workflows, promising a new golden age of developer productivity. Yet, amidst the excitement and rapid adoption, a critical question looms over every development team and organization: Are these tools really making us more effective? How do we move beyond anecdotal evidence and marketing claims to quantify the true impact of AI on our engineering output?

The current methods for measuring the performance of Large Language Models (LLMs) often feel disconnected from the tangible, complex challenges developers face. We are told a model is superior because it scores well on a synthetic benchmark, but that score rarely translates directly to its ability to refactor a legacy codebase or generate meaningful tests for a complex microservice. This disconnect creates a fog of uncertainty, making it difficult for developers to choose the right tools and for leaders to justify significant investments in AI technology.

In a landmark move to bring clarity to this critical issue, JetBrains has announced the launch of the Developer Productivity AI Arena (DPAI Arena), an open and neutral benchmarking platform designed to measure the real-world impact of AI development tools. This initiative represents a fundamental shift from abstract evaluations to practical, task-based assessments, promising to reshape how we understand, evaluate, and ultimately leverage AI in software engineering.

The Blind Spot in AI Adoption: Why Current Benchmarks Fall Short

To appreciate the significance of the DPAI Arena, we must first understand the inherent flaws in the status quo. For years, the performance of LLMs has been gauged against standardized tests that, while useful in academic circles, fail to capture the nuance and complexity of modern software development. These traditional benchmarks suffer from several critical limitations that render them inadequate for enterprise-level decision-making.

“As AI coding tools advance rapidly, the industry still lacks a neutral, standards-based framework to measure their real impact on developer productivity.”

This statement from JetBrains’ announcement perfectly encapsulates the industry’s core challenge. The existing framework is not only lacking but also potentially misleading. The primary issues can be broken down into three key areas:

- Reliance on Outdated and Irrelevant Datasets: Many popular benchmarks are trained and tested on datasets that are years old. The software world, however, evolves at a breakneck pace. A model that excels at solving problems using deprecated libraries or outdated coding patterns offers little value to a team working with the latest frameworks and language features. This is like training a pilot on a propeller plane simulator and then expecting them to fly a modern jetliner; the fundamental skills may be there, but the context is dangerously wrong.

- A Narrow and Homogeneous Technological Scope: The vast majority of public benchmarks are heavily skewed towards a few popular languages, most notably Python and JavaScript. While these are vital parts of the tech ecosystem, they do not represent the full spectrum of technologies used in enterprise environments. A complex, multi-platform application might involve Java, Kotlin, C#, Go, and a host of other languages, each with its own intricate frameworks and build systems. A benchmark that ignores this diversity cannot provide a holistic view of an AI tool’s capabilities.

- Oversimplification of Development Workflows: Perhaps the most significant failing of current benchmarks is their laser focus on simplistic, isolated tasks. The “issue-to-patch” workflow—where an AI is given a bug report and expected to generate a fix—is a common testing scenario. However, a developer’s job is infinitely more multifaceted. It involves code review, architectural design, performance optimization, test generation, documentation, and complex refactoring. A tool’s true productivity impact is measured by its ability to assist across this entire spectrum, not just in solving a single, well-defined problem.

This chasm between synthetic testing and real-world application creates an environment where choosing an AI tool feels like a gamble. The DPAI Arena was conceived to bridge this gap, offering a transparent, relevant, and comprehensive standard for evaluation.

Traditional Benchmarks vs. Real-World Developer Needs

| Feature | Traditional LLM Benchmarks | Real-World Developer Needs |

|---|---|---|

| Codebase | Often based on outdated, open-source code repositories. | Involves modern, proprietary, and complex codebases with evolving standards. |

| Tech Stack | Primarily focused on popular languages like Python or JavaScript. | Diverse technology stacks including Java, C#, Kotlin, Go, and multiple frameworks. |

| Task Complexity | Simple, isolated tasks like fixing a single bug (issue-to-patch). | Complex, multi-step workflows: PR reviews, test generation, refactoring, documentation. |

| Evaluation Metric | Code generation accuracy or pass/fail on a unit test. | True productivity impact: time saved, reduction in cognitive load, code quality improvement. |

| Context | Lacks business context and architectural constraints. | Deeply embedded in specific business logic, architectural patterns, and team conventions. |

| Governance | Often proprietary and controlled by the tool vendor. | Requires a neutral, community-governed standard for unbiased comparison. |

Introducing the Developer Productivity AI Arena (DPAI Arena)

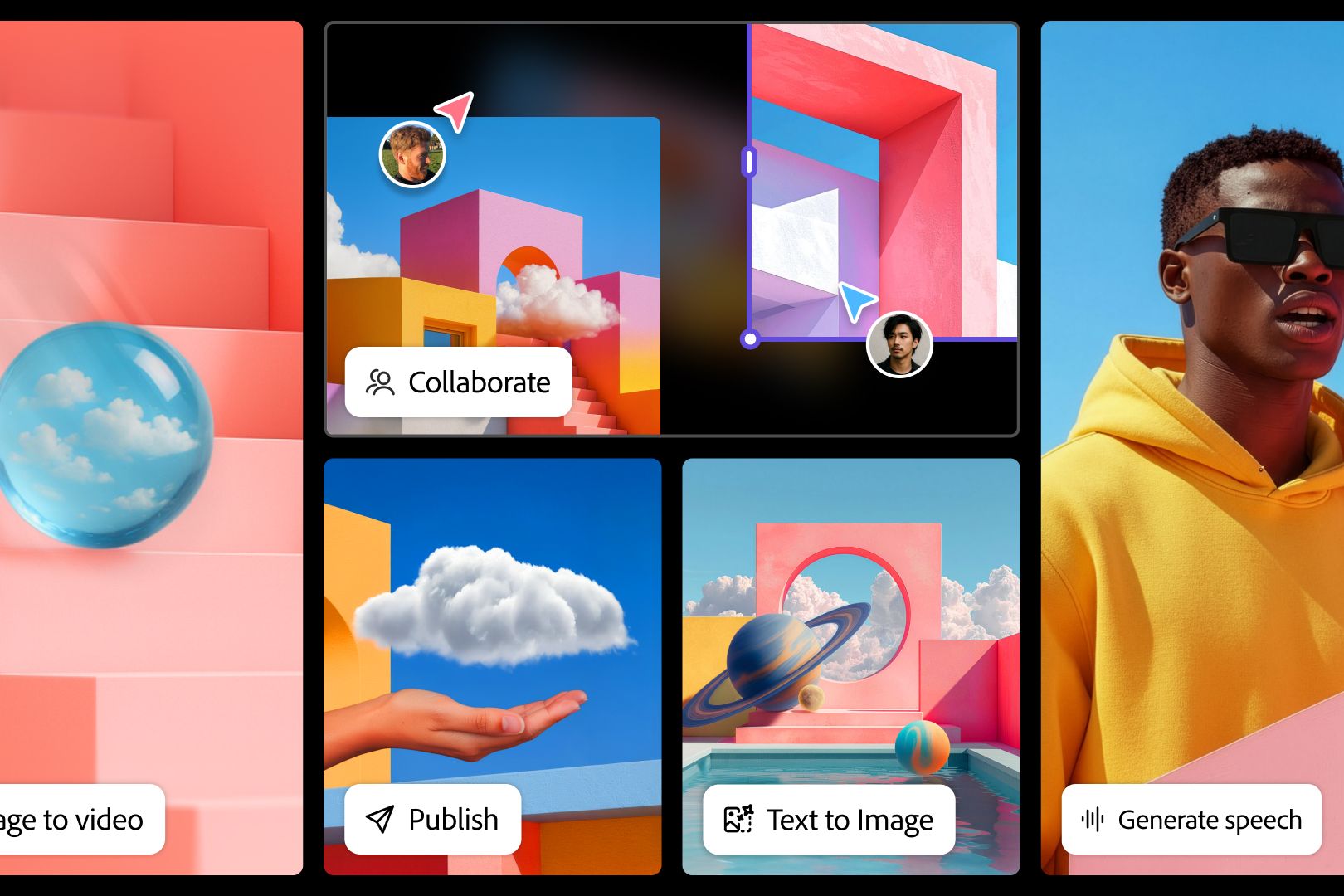

The Developer Productivity AI Arena is not merely another benchmark; it is an extensible platform and an open ecosystem. Its core mission is to shift the focus from abstract model capabilities to tangible developer productivity gains. It achieves this by creating a framework where AI tools are tested against realistic software engineering tasks within modern, relevant technology stacks.

The platform is built on several foundational pillars that directly address the shortcomings of previous approaches:

- Real-World Task Simulation: Instead of isolated coding puzzles, DPAI Arena evaluates AI agents on comprehensive, multi-step tasks that mirror a developer’s daily work. This includes patching bugs, generating comprehensive test suites, reviewing pull requests, and performing static analysis.

- Flexible, Track-Based Architecture: The platform uses a modular “track” system, where each track represents a specific workflow or technology domain. This allows for highly specialized and reproducible comparisons, enabling users to see how a tool performs on a “bug fixing track” versus a “test generation track.”

- Multi-Language and Framework Support: DPAI Arena is designed to be language-agnostic, supporting a wide array of programming languages and frameworks. This ensures that evaluations are relevant to the diverse technology stacks found in modern enterprises.

- Openness and Extensibility: Recognizing that no single organization can represent the entire software landscape, JetBrains has designed the platform to be open. A “Bring Your Own Dataset” (BYOD) approach empowers contributors to create and share their own domain-specific benchmarks, leveraging the shared infrastructure for standardized evaluation.

A Deeper Dive into the DPAI Arena’s Architecture

The power of the DPAI Arena lies in its thoughtful design, which prioritizes relevance, flexibility, and community collaboration. Let’s explore the key components that make this platform a game-changer.

The “Bring Your Own Dataset” (BYOD) Revolution

This feature is arguably one of the most transformative aspects of the DPAI Arena. It allows companies, open-source projects, and technology vendors to create benchmarks using their own codebases. For an enterprise, this means they can evaluate an AI coding assistant’s performance on their own proprietary, complex systems before committing to a large-scale rollout.

Imagine a financial institution wanting to see how well an AI tool can navigate its decades-old COBOL and Java codebase, or a gaming company testing an agent’s ability to optimize C++ graphics shaders. BYOD moves the evaluation from a generic sandbox to the specific environment where the tool will be deployed, providing an unparalleled level of accuracy in predicting its real-world value and return on investment.

The Foundational Spring Benchmark

To kickstart the initiative and provide a blueprint for future contributions, JetBrains has created the first official benchmark for the platform: the Spring Benchmark. This serves as the technical standard, demonstrating how to construct a high-quality, comprehensive benchmark that tests an AI’s ability to work within the popular and complex Spring Framework. It sets a high bar for future contributions, ensuring that benchmarks for other ecosystems—like Django, Ruby on Rails, React, or .NET—will be equally rigorous and valuable.

Governance and Community: Forging a Trusted Industry Standard

A platform of this magnitude cannot succeed if it is perceived as being controlled by a single vendor. To ensure neutrality, transparency, and long-term viability, JetBrains has made a crucial decision regarding the platform’s governance.

A New Home at the Linux Foundation

JetBrains has announced its intention to contribute the DPAI Arena to the Linux Foundation, one of the most respected stewards of open-source technology in the world. This move is a powerful signal of commitment to open governance. By placing the platform under the umbrella of the Linux Foundation, JetBrains ensures that it will be guided by the interests of the entire community, not the commercial ambitions of one company.

The Technical Steering Committee (TSC)

Overseeing the platform’s evolution will be a Technical Steering Committee (TSC). This governing body will be responsible for key decisions, including:

- Platform Development: Guiding the technical roadmap and architecture of the DPAI Arena itself.

- Dataset Governance: Establishing and enforcing quality standards for all contributed benchmarks and datasets.

- Community Contributions: Reviewing and approving new tracks and benchmarks submitted by the community.

This structure ensures that the DPAI Arena remains a fair, inclusive, and technically excellent resource that the entire industry can trust.

The Ripple Effect: Who Benefits from the DPAI Arena?

The launch of the DPAI Arena is poised to have a profound and positive impact across the entire software development ecosystem. Its value extends far beyond a simple leaderboard of AI models, creating a virtuous cycle of improvement and transparency that benefits everyone.

Benefits for Key Stakeholders

| Stakeholder | Key Benefits |

|---|---|

| Individual Developers | - Transparent Insights: Access to unbiased, data-driven comparisons of AI tools. - Informed Choices: Ability to select tools proven to be effective for their specific tech stack and workflow. - Focus on Value: Move beyond marketing hype to understand what truly boosts personal productivity. |

| Enterprise Leaders | - Data-Driven ROI: A reliable method to evaluate and justify investments in AI technology. - Reduced Procurement Risk: Test tools against internal, proprietary codebases before making large-scale commitments. - Strategic Adoption: Make informed decisions about which tools to deploy to which teams for maximum impact. |

| AI Tool Providers | - Neutral Proving Ground: A credible, third-party platform to benchmark and validate their tools on real-world tasks. - Actionable Feedback: Detailed performance data reveals strengths and weaknesses, guiding model refinement. - Competitive Differentiation: Showcase superior performance on complex, domain-specific tasks. |

| Technology Vendors | - Ecosystem Enablement: Ensure their frameworks and platforms are well-supported by leading AI tools. - Promote Best Practices: Contribute benchmarks that encourage AI models to generate idiomatic and high-quality code. - Community Engagement: Strengthen their ecosystem by providing valuable resources for AI-assisted development. |

The Future of AI-Assisted Development is Measurable

The era of ambiguity surrounding AI’s role in software development is drawing to a close. Vague promises of “10x productivity” are no longer sufficient. The industry has matured to a point where rigorous, transparent, and relevant measurement is not just a luxury but a necessity. The Developer Productivity AI Arena is the right platform at the right time, providing the critical infrastructure needed for this next phase of evolution.

By establishing a neutral, community-driven standard, JetBrains is fostering a collaborative environment where AI tool providers can refine their offerings, enterprises can make intelligent investments, and developers can confidently choose the tools that will genuinely enhance their craft. This is more than just a benchmarking platform; it is a foundational piece of the future of software development—a future where the impact of artificial intelligence is not just claimed, but proven.

Comments