Software Engineering Foundations for the AI-Native Era

The world of software development is undergoing a seismic shift. Artificial Intelligence is no longer a futuristic concept discussed in research papers; it is a pervasive, powerful force actively reshaping how we design, build, and deploy software. Developers are transitioning from being sole authors of code to becoming co-creators, collaborating with AI partners to accelerate innovation. However, simply handing AI tools to engineering teams is not enough. To truly harness this revolution, enterprises must invest in the foundational pillars that support an AI-native future.

According to a recent Gartner survey, organizations that properly equip their teams with the right AI technologies can unlock staggering productivity gains.

Software engineering leaders who equip their teams with the right AI technologies can achieve productivity improvements of more than 25%.

The stakes are incredibly high. Software engineering leaders who neglect to build these foundational layers risk condemning their companies to irrelevance. While they struggle with legacy systems and outdated practices, faster, AI-enabled rivals will seize innovation, capture revenue, and dominate the market.

To avoid this fate and set their teams up for unprecedented success, leaders must focus on five critical foundational practices. These pillars are not just technical upgrades; they represent a holistic transformation of technology, process, and culture.

1. Platform Engineering: The Backbone of AI-Powered Development

In the AI-native era, the ad-hoc adoption of tools is a recipe for chaos, inefficiency, and security risks. The solution is a robust platform engineering strategy that provides developers with a standardized, secure, and efficient way to leverage AI. This approach creates a centralized backbone for all AI-driven development, ensuring consistency and scale across the organization.

A mature platform engineering practice focuses on three key areas:

Building “Paved Roads” for AI Tools

The concept of a “paved road” is simple yet powerful: create a well-defined, easy-to-follow path for developers. Instead of letting every team research, vet, and implement their own AI tools, a platform team provisions a curated set of approved technologies for the entire software development life cycle (SDLC). This removes the immense cognitive load from individual developers, allowing them to focus on creating value.

These paved roads come with built-in guardrails that enforce best practices for:

- Quality: Automated code analysis for AI-generated suggestions.

- Cost: Centralized monitoring of API usage for expensive models.

- Reliability: Standardized deployment patterns for AI-infused services.

- Security: Pre-configured access controls and data sanitization to prevent leaks.

Operationalizing AI Models and Agents (ModelOps & AgentOps)

As AI becomes integral to applications, managing the lifecycle of models and agents becomes a critical operational challenge. This is where ModelOps and AgentOps come in.

- ModelOps (Model Operationalization): This discipline is essentially DevOps for machine learning. The platform must provide tools and processes for the entire lifecycle of ML models, including deployment, monitoring, versioning, and governance of Large Language Models (LLMs). This ensures that models are not just built, but are also maintained, secured, and updated according to enterprise standards. The platform can also manage prompt engineering, providing curated prompt templates to tailor AI responses to the specific context of the business.

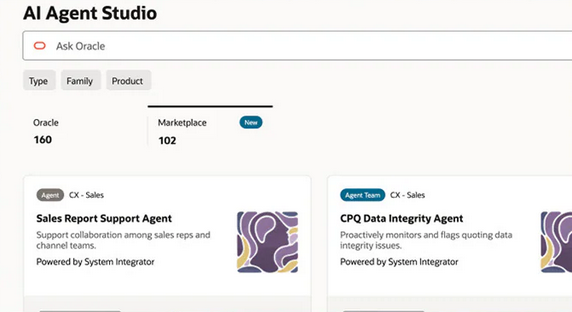

- AgentOps (Agent Engineering and Operations): With the rise of autonomous AI agents, a new operational layer is required. AgentOps focuses on orchestrating, managing, and monitoring these agents. The platform needs to provide the infrastructure to run agents reliably, track their performance, and ensure they operate within safe, predefined boundaries.

Enabling AI Capabilities Through an Internal Developer Platform (IDP)

To remain competitive, businesses must infuse their products with AI capabilities. An Internal Developer Platform (IDP) is the vehicle for making this happen securely and at scale. The IDP should offer a catalog of services that developers can easily integrate into their applications. This includes:

- Templates & SDKs: Pre-built components for common AI features like chatbots, recommendation engines, or intelligent search.

- APIs: Secure and well-documented Application Programming Interfaces that expose curated AI models and data sources.

- Guidance & Training: Comprehensive documentation and support to help developers effectively use the platform’s AI capabilities.

By providing these resources, an IDP democratizes AI development, empowering any developer in the organization to innovate rapidly while adhering to centrally managed standards.

2. Integration and Composability: Building with Intelligent Blocks

The fundamental nature of coding is changing. As AI handles more of the line-by-line code generation, developers are elevated to the role of architects and integrators. Their primary task becomes composing complex applications by “stitching together” smaller, independent, and intelligent components. This modern paradigm is only possible with an architectural foundation built on integration and composability.

To prepare for this future, leaders must champion a shift towards an API-first, composable architecture. This means moving away from monolithic systems and towards a landscape of loosely coupled microservices and components that communicate through well-defined APIs.

The Power of Composable Architecture

A composable architecture treats business capabilities as interchangeable building blocks. Each block, or service, is self-contained and exposes its functionality through an API. This “Lego-like” approach provides immense agility. When AI agents or developers need to create a new workflow or application, they don’t have to build everything from scratch. Instead, they can discover and assemble existing components, dramatically reducing development time and effort.

APIs as the Lingua Franca for AI

In this new world, APIs are not just a technical detail; they are the essential connective tissue that allows AI to interact with the enterprise. AI agents rely on a rich ecosystem of APIs to:

- Consume Data: Access real-time information from various systems.

- Analyze Information: Send data to specialized models for processing.

- Act on Data: Trigger actions in other applications, such as creating a support ticket or updating a customer record.

A strong integration strategy is therefore non-negotiable. This involves implementing robust API management tools, ensuring clear and consistent API design patterns, and creating rich metadata that makes APIs easily discoverable by both humans and AI agents.

3. AI-Ready Data: The Fuel for Intelligent Systems

The most sophisticated AI model in the world is useless without high-quality, relevant data. Generative AI delivers its greatest value when an LLM is paired with context-specific enterprise data—a technique known as Retrieval-Augmented Generation (RAG). However, the reality in most organizations is that this data is messy, siloed, and far from ready for AI consumption. Preparing this data is one of the most urgent and critical tasks for any organization serious about AI.

AI-ready data is not just about having data; it’s about having data that is clean, organized, accessible, and contextually rich. Software engineering leaders must collaborate with data teams to build the architecture and processes necessary to deliver this.

The Role of Model Context Protocol (MCP)

An emerging standard called Model Context Protocol (MCP) is poised to become a cornerstone of AI-native systems. MCP is designed to create a seamless and standardized way for AI models, especially LLMs, to connect with external data sources, APIs, and tools. Think of it as the universal translator and plumbing system that allows an AI model to safely and efficiently access the vast knowledge stored within an enterprise’s databases and applications.

Combining Data Mesh and Data Fabric

To manage enterprise data at scale, a modern architecture that combines two powerful concepts is needed: Data Fabric and Data Mesh.

- Data Fabric: This is the foundational technology layer. It acts as a virtual, unified data plane that abstracts away the complexity of the underlying data sources. A data fabric provides the tools for data integration, governance, and cataloging, making it easier to connect to and manage data regardless of where it resides.

- Data Mesh: This is an organizational and architectural approach that complements the data fabric. It treats data as a product, with clear ownership assigned to specific business domains. Instead of a centralized data team becoming a bottleneck, domain teams are empowered to own, clean, and serve their data products via the data fabric.

By combining these two approaches, organizations can create a scalable and resilient data ecosystem where high-quality, AI-ready data is treated as a first-class citizen.

4. Rapid Software Development: Accelerating Innovation with AI

AI introduces a supercharger into the engine of software development. It can generate code, write unit tests, and create documentation in seconds. However, this newfound speed can break traditional, slow-moving development processes. To truly capitalize on the productivity gains from AI, organizations must adopt and refine rapid, iterative software development practices like Agile, DevSecOps, and a product-centric operating model.

The focus must shift from optimizing individual tasks to optimizing the entire value stream.

Evolving Agile and Product-Centric Practices

The principles of Agile—iteration, customer feedback, and adaptability—are more important than ever. With AI accelerating the “build” phase, teams need equally fast feedback loops. This means strengthening the connection to the customer and embracing a product-centric model where engineering teams have deep ownership and understanding of the business value they are delivering.

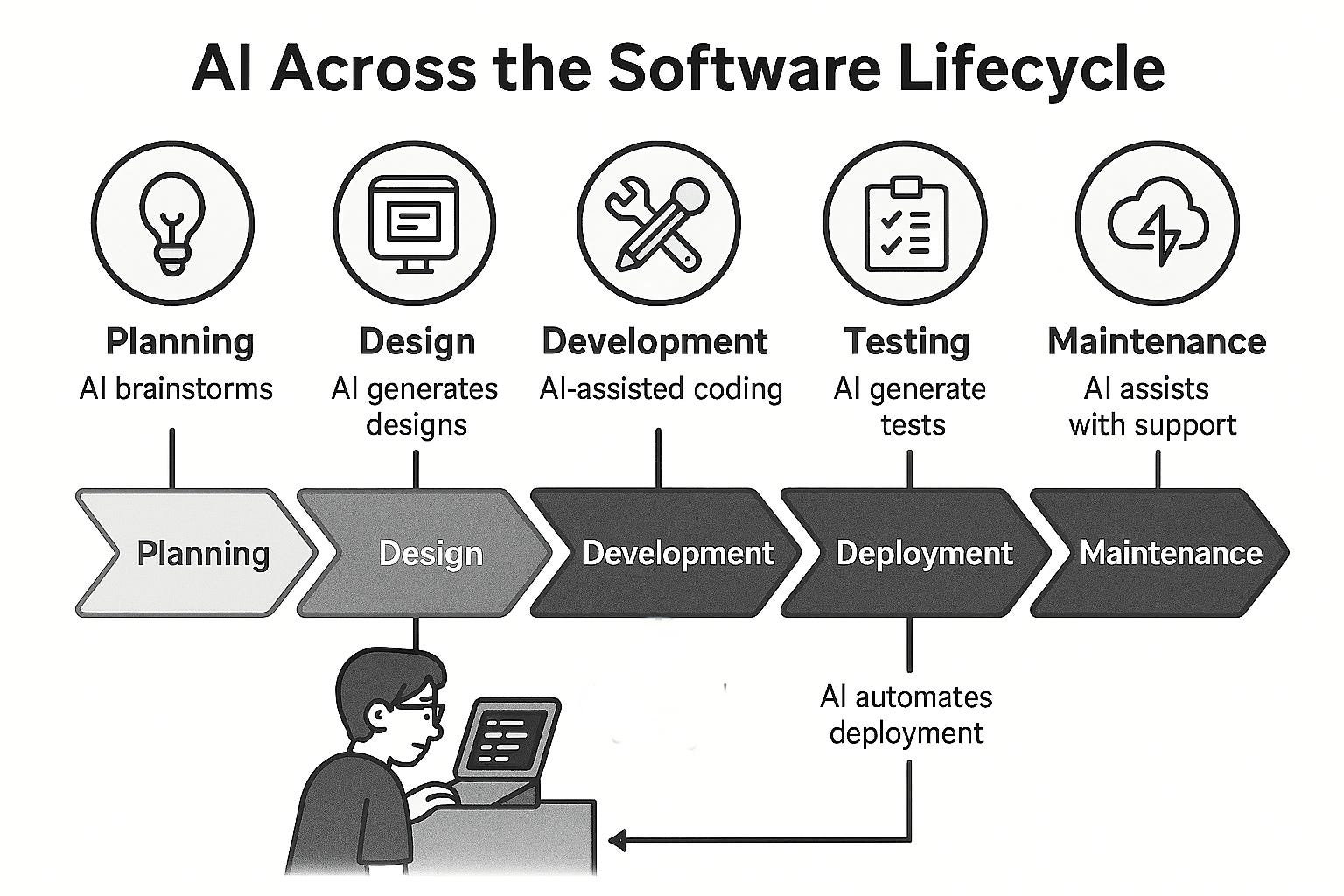

The Transformation of the SDLC with AI

| Traditional SDLC Phase | AI-Augmented SDLC Phase |

|---|---|

| Ideation | Manual brainstorming sessions, slow market research. |

| Design/Prototyping | Manual wireframing, mockups created over days. |

| Development | Manual line-by-line coding, boilerplate generation. |

| Testing | Manual test case writing, tedious regression checks. |

| Deployment | Manual deployment scripts, complex release coordination. |

| Monitoring/Feedback | Manual log analysis, delayed customer feedback collection. |

Measuring What Matters: Idea Lead Time

The ultimate metric for success in the AI-native era is idea lead time: the total time elapsed from the conception of an idea to its deployment in production where it can generate customer feedback and value. Engineering leaders must challenge their teams to measure and relentlessly improve this metric. AI is not just about writing code faster; it’s about shrinking the entire innovation cycle, enabling the business to test ideas, learn from the market, and pivot more quickly than ever before.

5. A Culture of Innovation: Empowering Teams to Experiment

The final and most crucial foundation is not technological but cultural. All the advanced platforms, composable architectures, and clean data will amount to nothing if the engineering team is risk-averse and afraid to experiment. AI is a new frontier, and navigating it requires a culture of curiosity, psychological safety, and a relentless drive for innovation.

Leaders are responsible for cultivating this environment. It starts with creating a compelling vision that inspires teams to embrace the changes brought by AI, framing it as an opportunity for growth and impact rather than a threat.

Fostering Psychological Safety

Psychological safety is the bedrock of innovation. It is the shared belief that team members can take risks without fear of punishment or humiliation. In an AI-driven context, this means:

- Engineers can experiment with a new AI coding assistant and admit when it produces flawed output without being blamed.

- Teams can try a novel approach to building an AI-powered feature and have it fail, with the failure being treated as a valuable learning opportunity.

- Individuals can voice concerns about the ethical implications of an AI model or ask “stupid” questions without fear of judgment.

Without this safety net, developers will retreat to familiar, less risky methods, and the transformative potential of AI will remain untapped.

Incentivizing Innovative Behavior

To make innovation a reality, it must be actively encouraged and rewarded. Leaders can implement several concrete strategies:

- Establish Exploration Teams: Create small, dedicated teams tasked with rapidly prototyping new ideas in key business areas using lean startup methodologies and cutting-edge AI tools.

- Provide Dedicated Innovation Time: Allocate a certain percentage of developers’ time (e.g., one day every two weeks) for unstructured exploration and experimentation with new AI technologies.

- Reward the Process, Not Just the Outcome: Recognize and reward teams for smart risk-taking, insightful experiments (even failed ones), and sharing their learnings with the broader organization.

Software engineers will only invest their time and energy in innovation if it is explicitly valued and championed by leadership as a core objective.

The transition to an AI-native organization is a journey, not a destination. By building these five foundational pillars—platform engineering, composability, AI-ready data, rapid development practices, and a culture of innovation—leaders can equip their teams not just to survive the AI revolution, but to lead it.

Comments