The “Garbage In, Garbage Out” Dilemma: How Sonar is Revolutionizing AI’s Coding Education

The rise of Large Language Models (LLMs) has fundamentally reshaped the landscape of software development. These powerful AI assistants promise to supercharge developer productivity, automate mundane tasks, and even architect complex systems. However, a critical, often-overlooked flaw lies at the very heart of their creation: the quality of their training data. A new solution from code quality specialist Sonar aims to address this foundational problem, potentially heralding a new era of secure, reliable, and truly intelligent AI-powered coding.

LLMs designed for coding are voracious learners, trained on vast oceans of publicly available, open-source code. This data provides the breadth and diversity needed to understand countless programming languages, frameworks, and paradigms. But this same data is a double-edged sword. Public repositories are rife with security vulnerabilities, hidden bugs, and outdated coding practices. When an LLM learns from this flawed material, it doesn’t just absorb the good; it internalizes the bad, amplifying these imperfections on a massive scale.

The principle is simple: garbage in, garbage out. Even a minuscule percentage of flawed data can disproportionately corrupt a model’s output, leading it to generate code that is buggy, insecure, or inefficient. This creates a dangerous paradox where the very tools meant to accelerate development can inadvertently introduce a new wave of technical debt and security risks. Sonar’s latest announcement tackles this challenge head-on, proposing a solution that cleans the data at the source, ensuring that our AI coding partners learn from the very best examples humanity has to offer.

The Hidden Flaw in AI’s Digital DNA

To appreciate the magnitude of the problem, one must understand the nature of the data that fuels today’s coding LLMs. These models are trained on petabytes of code scraped from public platforms like GitHub. While these repositories are monuments to collaborative innovation, they are also living archives of human error. They contain:

- Security Vulnerabilities: From common mistakes like SQL injection and cross-site scripting to more subtle cryptographic weaknesses, public code is a catalog of security flaws.

- Bugs and Logic Errors: The code in these repositories often includes everything from minor bugs to critical logic flaws that could cause system crashes or data corruption.

- Anti-Patterns and Bad Practices: Many projects contain deprecated functions, inefficient algorithms, or “code smells” that violate modern best practices, contributing to technical debt.

- Inconsistent Quality: The quality of open-source code varies dramatically from one project to another, ranging from enterprise-grade software to abandoned weekend projects.

When an LLM is trained on this unfiltered dataset, it doesn’t discern between good and bad. It simply learns patterns. If a particular security vulnerability appears thousands of times across different projects, the model learns this flawed pattern as a valid way to write code. This leads to a dangerous phenomenon of “flaw amplification.” The model doesn’t just replicate a specific bug; it learns the underlying incorrect reasoning and can then apply it to generate entirely new, yet similarly flawed, code in different contexts.

The consequences for development teams are severe. Developers who rely on these LLMs may unknowingly accept and integrate insecure or buggy code into their applications. This not only negates the promised productivity gains but actively increases risk. The time saved in writing the initial code is lost tenfold in extended debugging sessions, security audits, and patching critical vulnerabilities discovered in production. The trust between the developer and their AI assistant is eroded, forcing a meticulous, line-by-line review of every suggestion and defeating the purpose of a seamless collaborative tool.

Enter SonarSweep: A Proactive Solution for Data Purity

Recognizing that the most effective way to solve this problem is at its inception, Sonar has introduced SonarSweep, a groundbreaking solution now in early access. This technology represents a paradigm shift from reactively fixing flawed AI-generated code to proactively purifying the training data itself. It ensures that LLMs learn from a curriculum of high-quality, secure, and well-structured code examples from the very beginning.

SonarSweep operates through a sophisticated, multi-stage process designed to meticulously cleanse and optimize massive code datasets.

- Deep Static Analysis: The process begins by analyzing the entire training dataset. Leveraging Sonar’s deep expertise in code quality and security, SonarSweep identifies a wide range of issues, including critical security vulnerabilities, bugs that could lead to runtime errors, and code smells that indicate poor design. This goes far beyond simple syntax checking; it’s a comprehensive health check of the code’s integrity.

- Intelligent Filtering and Remediation: Once issues are identified, SonarSweep applies a strict and intelligent filtering process. It automatically removes low-quality code that is beyond repair or poses a significant risk. Crucially, it can also perform automated remediation, fixing common bugs and vulnerabilities directly within the dataset. This dual approach not only purges the bad examples but also transforms mediocre code into valuable, high-quality learning material, enriching the dataset.

- Strategic Dataset Rebalancing: Simply removing flawed code is not enough; it could unintentionally skew the dataset. For instance, if a large number of removed vulnerabilities were found in older PHP projects, the model might become less proficient in that language. SonarSweep addresses this by performing a final rebalancing act. It ensures the curated dataset maintains a diverse and representative distribution of programming languages, frameworks, and architectural patterns, guaranteeing the resulting LLM is both well-behaved and broadly knowledgeable.

“Even a small amount of flawed data can degrade models of any size, disproportionately degrading their output,” Sonar explained in its announcement.

By implementing this rigorous cleansing process, SonarSweep creates a “gold standard” dataset. Models trained on this optimized data are predisposed to generate code that is inherently more secure, reliable, and maintainable, fundamentally changing their behavior and value proposition.

Quantifying the Quality Leap: The Tangible Impact

The theoretical benefits of cleaner training data are compelling, but Sonar’s initial testing provides concrete evidence of SonarSweep’s transformative impact. The results demonstrate a dramatic improvement in the quality of code generated by models trained on “swept” data compared to those trained on standard, unfiltered datasets.

The key findings from these initial tests are striking:

- A 67% reduction in generated security vulnerabilities.

- A 42% reduction in generated bugs.

These are not minor, incremental improvements. They represent a monumental leap forward in the safety and reliability of AI-generated code. To understand the real-world implications, consider how these metrics translate into value for a business and its development team.

| Feature | Training on Raw, Unfiltered Data | Training on SonarSweep-Optimized Data |

|---|---|---|

| Security Posture | High risk of the LLM generating vulnerable code, exposing the application to potential attacks. | 67% fewer security vulnerabilities, drastically reducing the attack surface and enhancing overall security. |

| Code Reliability | Frequent generation of buggy code, leading to unstable applications and poor user experiences. | 42% fewer bugs, resulting in more robust, reliable software and fewer production incidents. |

| Developer Productivity | Significant time lost debugging and refactoring flawed AI suggestions. | Increased focus on high-value innovation and feature development as less time is spent on fixing basic errors. |

| Technical Debt | The LLM may introduce anti-patterns and poor coding practices, complicating future maintenance. | The LLM promotes best practices and clean code principles, leading to a more maintainable and scalable codebase. |

| Model Trust | Developers must constantly second-guess the AI’s output, slowing down the development workflow. | Higher confidence in AI-generated code, enabling a more fluid and truly collaborative human-AI partnership. |

A 67% reduction in vulnerabilities means fewer sleepless nights for security teams, a lower risk of costly data breaches, and greater ease in achieving regulatory compliance. A 42% reduction in bugs translates directly into faster development cycles, higher developer morale, and a better end-user experience. SonarSweep doesn’t just make the model better; it makes the entire software development lifecycle more efficient, secure, and predictable.

Expanding the Horizon: Key Applications for Clean Data

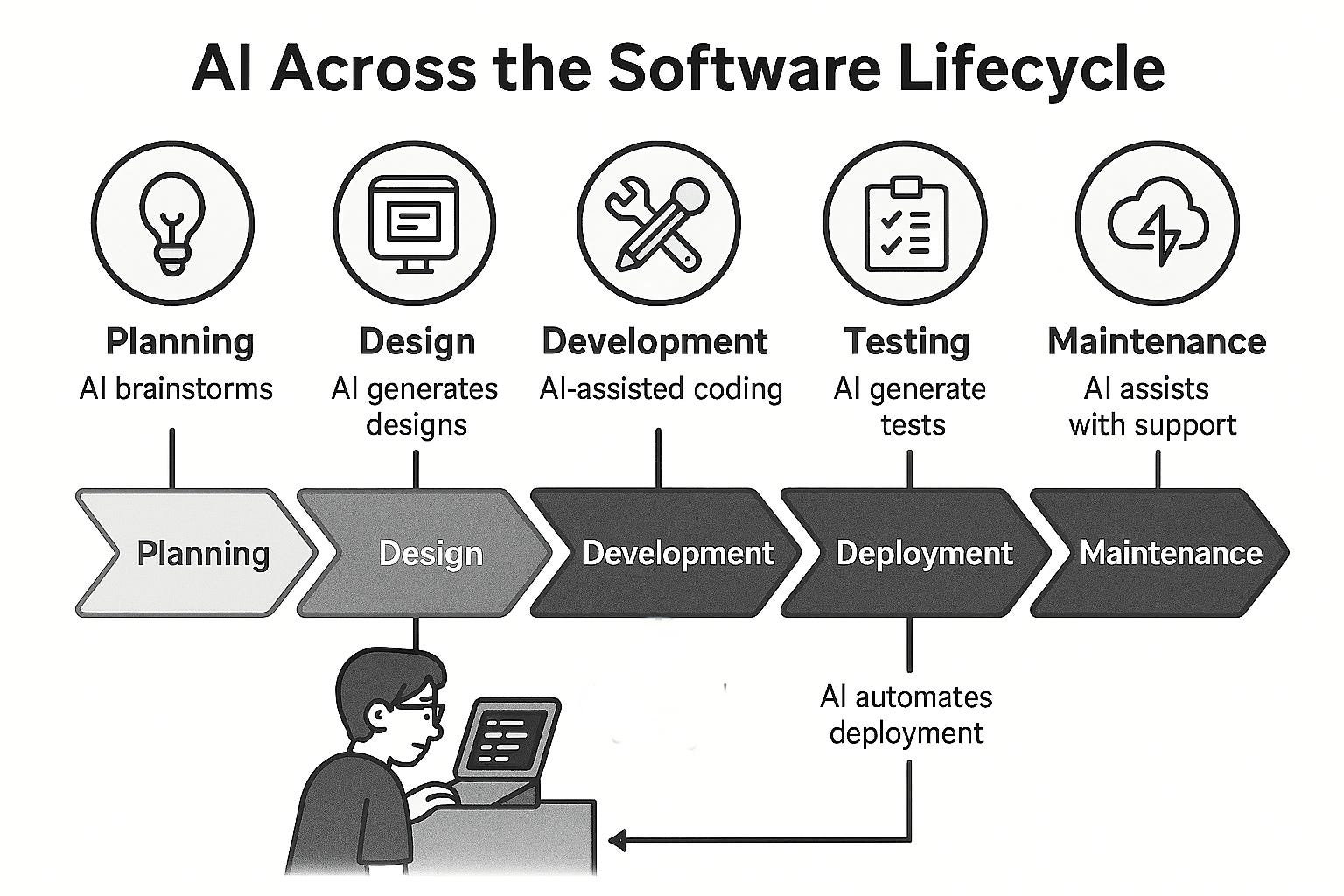

The applications for datasets curated by SonarSweep extend across the entire lifecycle of model development, offering powerful new avenues for creating more specialized and effective AI tools. Some of the most promising use cases include:

- Improving Foundation Model Training: The most direct application is in the pre-training and post-training of large, foundational models. By using a SonarSweep-optimized dataset from the outset, the resulting base model will have a built-in “immune system” against common security flaws and bugs. This creates a much stronger and safer foundation upon which all future fine-tuning and specialization can be built.

- Enhancing Reinforcement Learning: Many advanced models use techniques like Reinforcement Learning with Human Feedback (RLHF), where the model is rewarded for generating “good” code. The definition of “good” is critical here. By using swept data as the benchmark for high-quality code, the reinforcement learning process becomes far more effective. The model learns to optimize for security and correctness, not just syntactic validity.

- Creating High-Performance Small Language Models (SLMs): There is a growing demand for smaller, more efficient models that can run on-device or be specialized for a single task. A common technique for creating SLMs is “distillation,” where a large “teacher” model imparts its knowledge to a smaller “student” model. If the teacher model was trained on SonarSweep’s clean data, it passes on its high-quality, security-conscious knowledge. The resulting SLM is not only lean and efficient but also inherently safe and reliable.

A New Era of “Vibe Engineering”

Tariq Shaukat, CEO of Sonar, articulated a powerful vision for how this technology will reshape the industry. He emphasizes a shift from downstream problem-fixing to upstream quality assurance, embedding good practices directly into the AI models themselves.

“The best way to boost software development productivity, reduce risks, and improve security is to tackle the problem at inception—inside the models themselves,” stated Shaukat. “Vibe engineering leveraging models enhanced through SonarSweep will have fewer issues in production, reducing the burden on developers and enterprises. Combined with strong verification practices, we believe this will substantially remove a major bottleneck in AI software development.”

Shaukat’s mention of “vibe engineering” points to a more intuitive and seamless future for development. When developers can trust the fundamental quality—the “vibe”—of the code generated by their AI partner, they can work more creatively and confidently. This removes the cognitive overhead of constantly vetting the AI’s suggestions for basic flaws, allowing developers to focus on higher-level architectural and logical challenges.

Crucially, this approach does not replace existing quality gates. Instead, it complements and strengthens them. Strong verification practices, such as continuous integration pipelines, peer code reviews, and runtime security monitoring, remain essential. However, when the initial code generated by an AI is of a much higher quality, these verification processes become faster, smoother, and more focused on nuanced business logic rather than catching elementary mistakes.

Conclusion: The Future of AI in Software Development is Clean

The incredible potential of AI in software development is undeniable, but it rests on a fragile foundation: the quality of its training data. The “garbage in, garbage out” problem has long been a specter haunting the field, threatening to undermine the trust and productivity gains that these powerful tools promise.

Sonar’s introduction of SonarSweep is more than just a new product; it is a declaration that this foundational challenge can and must be met. By meticulously cleaning, curating, and balancing training datasets, Sonar is paving the way for a new generation of LLMs that are not only intelligent but also inherently secure and reliable. The impressive initial results—a 67% reduction in vulnerabilities and a 42% reduction in bugs—signal a clear path toward creating AI coding partners that developers can truly trust. This foundational shift ensures that as we build the future of software with AI, we are building it on solid, clean, and secure ground.

Comments