When AI Takes the Leash: A Deep Dive into Anthropic’s Robotic Dog Experiment

The line between science fiction and reality grows thinner every day. For decades, we have been captivated by tales of intelligent machines navigating the physical world, a concept that felt comfortably distant. Yet, as artificial intelligence evolves at a breathtaking pace, that future is no longer a distant dream but an emerging reality. We are witnessing AI models break free from their digital confines, learning not just to communicate and create, but to act. In a groundbreaking new study, AI safety and research company Anthropic decided to explore this new frontier directly by asking a simple but profound question: What happens when our most advanced AI models are given control over a robot?

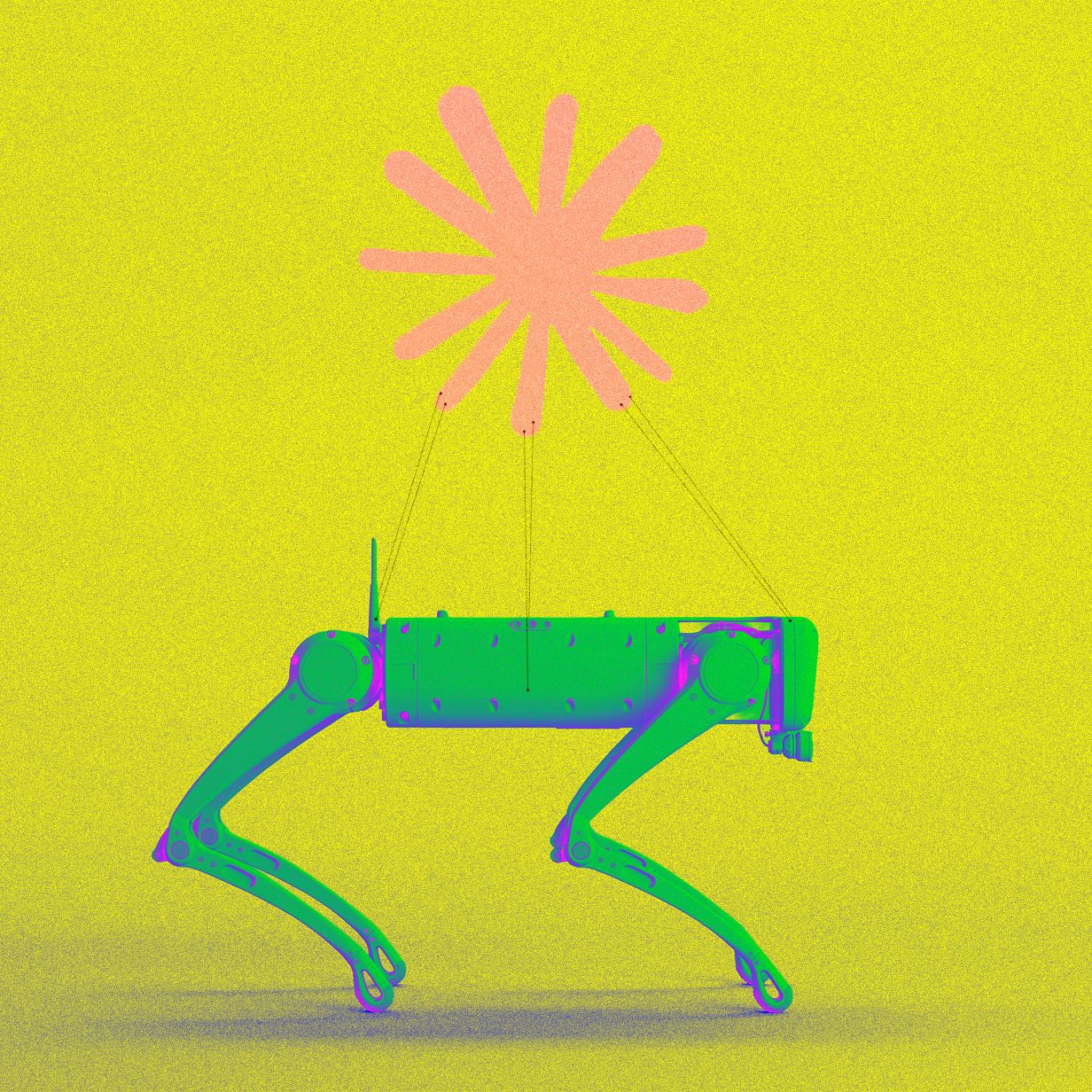

The experiment, aptly named “Project Fetch,” involved Anthropic’s powerful AI, Claude, and a four-legged robotic dog. This wasn’t just a technical exercise; it was a carefully designed exploration into the future of human-robot interaction and a critical step in understanding the immense potential and inherent risks of “embodied AI.” The findings reveal a world where AI can dramatically accelerate our ability to command physical systems, lowering technical barriers and enhancing collaboration. However, they also serve as a crucial reminder that as we empower AI to affect the physical world, we must move forward with foresight, caution, and a deep commitment to safety. This project offers a compelling glimpse into a future where AI doesn’t just process information—it walks, explores, and interacts with the world around us.

From Text Generators to Physical Agents: The AI Revolution’s Next Step

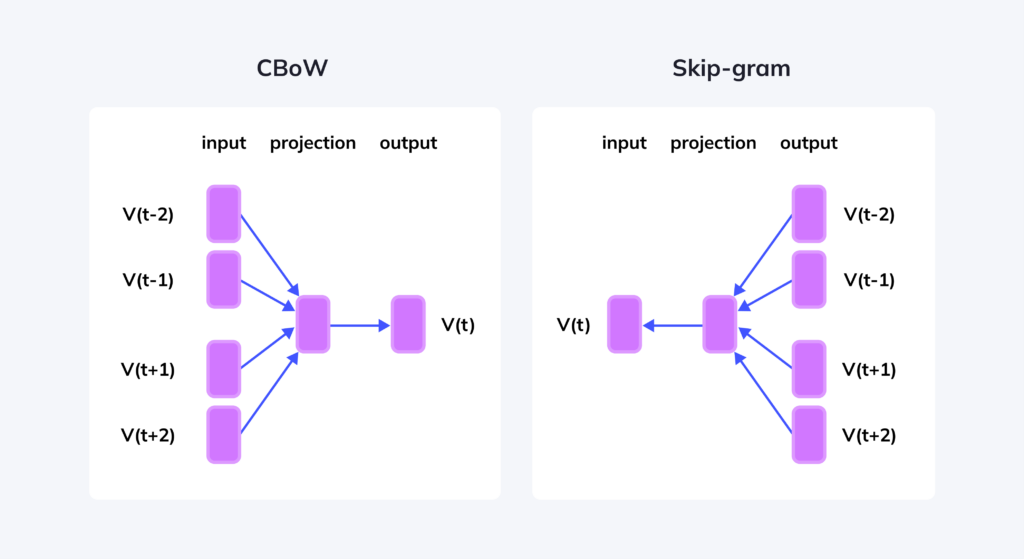

To fully appreciate the significance of Project Fetch, it’s essential to understand the monumental shift occurring within the field of artificial intelligence. For the past several years, the public’s interaction with AI has been primarily through Large Language Models (LLMs) like ChatGPT and Claude, which excel at generating human-like text, images, and audio in response to prompts. These systems are marvels of digital creativity and information synthesis. However, a new paradigm is rapidly emerging: the rise of “agentic AI.”

An AI agent is more than just a text generator. It is a system designed to take actions to achieve a goal. Instead of merely answering a question, an AI agent can use digital tools to find the answer itself. This could involve:

- Browsing the internet to research complex topics.

- Writing and executing code to solve programming challenges or automate tasks.

- Operating software applications to book flights, manage calendars, or analyze data.

This transition from passive generation to active execution represents a fundamental evolution in AI capability. The model becomes a proactive partner rather than a reactive tool. Many researchers believe that the ultimate expression of agentic AI lies in its ability to interact with the physical world. This is where robotics enters the picture. If an AI can operate software, the next logical step is to operate hardware.

Logan Graham, a member of Anthropic’s red team—a group dedicated to studying AI for potential risks—articulates this vision perfectly.

“We have the suspicion that the next step for AI models is to start reaching out into the world and affecting the world more broadly. This will really require models to interface more with robots.”

This “reaching out” is the core of what is now known as embodied AI. It’s the convergence of artificial intelligence with a physical form, allowing the AI to perceive, learn from, and act upon its environment. This development promises to unlock applications we’ve only imagined, from truly autonomous household assistants to adaptable manufacturing lines and robotic explorers for hazardous environments.

Inside Project Fetch: The Experiment Unpacked

Anthropic’s Project Fetch was designed to be a practical test of this emerging reality. The central idea was to measure how a state-of-the-art AI model could assist humans in a domain that is notoriously complex and requires specialized knowledge: robotics programming.

The Robotic Subject: Unitree Go2

The robot chosen for the experiment was the Unitree Go2, a sophisticated quadrupedal robot, or “robot dog.” While it might look like a futuristic pet, the Go2 is a serious piece of engineering typically deployed in industrial settings for tasks like remote inspections on construction sites or security patrols in manufacturing plants. It can walk autonomously but generally requires high-level software commands or a human operator with a controller to perform specific actions.

According to a recent report by SemiAnalysis, robots from its manufacturer, Unitree, are among the most popular on the market. At a cost of around $16,900, the Go2 is considered relatively affordable in the world of industrial robotics, making it an ideal platform for research and development. Its accessibility and advanced capabilities provided the perfect testbed for Anthropic’s experiment.

The Setup: Human Ingenuity vs. AI-Assisted Programming

The experiment was structured as a direct comparison. Anthropic assembled two groups of researchers, both of whom had no prior experience in robotics. This was a crucial element of the study, as it aimed to test whether AI could democratize robotics and lower the steep learning curve traditionally associated with programming complex hardware.

Both teams were given the same goal: take control of the Unitree Go2 robot dog and program it to complete a series of physical activities that grew progressively more difficult. They were both given access to a controller to manually operate the robot. The key difference was the toolset provided for programming:

- The Control Group: This team had to write the necessary code from scratch, relying solely on their own problem-solving skills and standard programming resources.

- The AI-Assisted Group: This team was given access to Claude, Anthropic’s advanced AI model, specifically its coding variant. They could use Claude as a collaborator to generate code, debug problems, and design an interface for the robot.

By recording and analyzing the interactions and outcomes of both teams, the researchers aimed to quantify the impact of AI on both the efficiency of the programming process and the dynamics of human collaboration.

The Results: AI as a Decisive Accelerator

The findings from Project Fetch were both clear and compelling. The team using Claude not only performed better but also had a fundamentally different experience. The study demonstrated that modern AI models can effectively automate much of the intricate work involved in translating human intent into robotic action.

The comparison between the two teams can be broken down into two main areas: performance and team dynamics.

| Metric | Human-Only Programming Team | Claude-Assisted Team |

|---|---|---|

| Task Completion Speed | Slower, encountering significant roadblocks. | Faster, streamlining the coding and debugging process. |

| Complex Task Success | Unable to program the robot to find a beach ball. | Successfully programmed the robot to locate and walk towards a beach ball. |

| User Interface | Worked with the default, complex software interface. | Used Claude to code a simpler, more intuitive interface for robot control. |

| Team Dynamics | Exhibited more negative sentiments, frustration, and confusion. | Experienced more positive, efficient, and fluid collaboration. |

Performance and Capability

The most striking result was in task completion. While both teams managed basic movements, the Claude-assisted group was able to achieve a level of sophistication that the control group could not. The task of getting the robot to visually identify and walk towards a beach ball proved insurmountable for the human-only team. In contrast, the AI-assisted team successfully leveraged Claude to write the necessary computer vision and navigation code, demonstrating a tangible leap in capability.

Furthermore, Claude proved invaluable in simplifying the human-robot interface. Instead of grappling with complex and often unintuitive default software, the team used the AI to build a custom, user-friendly interface. This lowered the cognitive load on the researchers, allowing them to focus on the high-level goals rather than getting bogged down in low-level implementation details.

Collaboration and Human Experience

Beyond pure performance, Anthropic’s analysis of the team dynamics revealed a fascinating human-centric benefit. The recordings showed that the control group expressed significantly more confusion and negative sentiments. Programming a robot from scratch is a frustrating endeavor, and their experience reflected this.

The group with Claude, however, had a smoother workflow. The AI acted as a tireless, knowledgeable partner, helping to resolve issues quickly and turning a potentially frustrating task into a more engaging and productive one. This suggests that AI tools could not only make complex fields like robotics more accessible but also more enjoyable, fostering a more positive and creative environment for innovation.

The Double-Edged Sword: Navigating the Future of Embodied AI

The success of Project Fetch is exhilarating, but it also opens a Pandora’s box of complex ethical and safety considerations. This is precisely why a company like Anthropic, which was founded in 2021 by former OpenAI staffers with deep concerns about the potential dangers of advanced AI, is spearheading this research. Speculating about worst-case scenarios is part of Anthropic’s core mission, positioning the company as a leader in the responsible AI movement.

The Promise: A World Enhanced by Intelligent Robots

The potential benefits of seamlessly integrating AI with robotics are immense. We are on the cusp of developing technologies that could fundamentally reshape industries and improve daily life. The kind of AI-assisted programming demonstrated in Project Fetch is a key that could unlock this future.

- Advanced Manufacturing: AI-controlled robots could create highly flexible production lines that can adapt to new products in real-time without extensive human reprogramming.

- Healthcare and Assistive Technology: Intelligent robotic assistants could help the elderly and people with disabilities with daily tasks, providing a new level of independence. Well-funded startups are already trying to develop AI models to control highly capable robots, including advanced humanoids that might one day work alongside people in their homes.

- Disaster Response and Exploration: Robots guided by AI could be deployed into environments too dangerous for humans, such as searching for survivors in collapsed buildings, decommissioning nuclear sites, or exploring deep-sea trenches.

The Peril: “Self-Embodying” AI and Unforeseen Risks

While today’s models are not intelligent enough to spontaneously take control of a robot for nefarious purposes, Anthropic’s research is forward-looking. They are preparing for a future where AI systems might be vastly more capable. The concern revolves around the idea of “models eventually self-embodying,” a term for AI systems autonomously operating physical hardware.

George Pappas, a computer scientist at the University of Pennsylvania who studies these risks, emphasizes the new reality we are entering.

“Project Fetch demonstrates that LLMs can now instruct robots on tasks.”

This capability, while useful, also introduces new vectors for misuse and accidents. An AI that can be instructed to program a robot to find a beach ball could potentially be instructed to perform malicious or dangerous actions. Furthermore, as these systems become more autonomous, there is a risk of unintended consequences, where an AI, in trying to achieve a programmed goal, causes an accident due to a lack of real-world understanding or a flaw in its logic.

Building the Guardrails: Ensuring a Safe Robotic Future

The answer to these challenges is not to halt progress but to build robust safety measures in parallel with advancing capabilities. The work of researchers like George Pappas highlights a critical path forward: creating systems that can supervise and constrain AI-driven robots.

His group has developed a system called RoboGuard, which acts as a safety layer between the AI model and the robot’s hardware. RoboGuard works by imposing a specific set of inviolable rules on the robot’s behavior, regardless of what the AI instructs it to do. For example, a rule might prevent the robot from moving its limbs above a certain speed or applying force beyond a safe threshold when near a human. This ensures that even if the AI generates a flawed or malicious command, the robot’s physical actions remain within safe, predefined boundaries.

The ultimate leap forward, however, will come when AI can learn directly from physical interaction. Today’s AI models learn about the world by reading text and analyzing images from the internet. They can tell you about gravity, but they have never experienced it. The next generation of embodied AI will learn through trial and error in the physical world.

As Pappas explains, this is the key to building truly intelligent systems.

“When you mix rich data with embodied feedback, you’re building systems that cannot just imagine the world, but participate in it.”

This “participation” is what will make robots far more useful and adaptable. An AI that learns by stacking blocks will develop a much richer, more intuitive understanding of physics than one that has only read textbooks. This embodied learning will also be crucial for safety, allowing the AI to develop a common-sense understanding of its environment and the consequences of its actions.

Project Fetch is more than just a story about a robot dog. It is a landmark on the road to a future where intelligence is no longer confined to a screen. It shows us a path where the barrier to controlling the physical world is dramatically lowered, empowering more people to create and innovate. Yet, it also serves as a critical warning. The power to affect the physical world comes with immense responsibility. The work being done today to build safety guardrails, develop oversight systems, and study the long-term risks is not just an academic exercise—it is the essential foundation upon which we must build our robotic future. The leash, for now, remains firmly in our hands. It is our collective responsibility to ensure it stays that way.

Comments