The New Frontier of AI Evaluation: Why Cultural Nuance is the Next Benchmark

Artificial intelligence is rapidly becoming a global force, a ubiquitous presence in our digital lives. Yet, for all its advanced capabilities, modern AI has a significant blind spot: the world itself. The vast majority of development, training, and, most critically, evaluation of large language models (LLMs) has been overwhelmingly dominated by a single language: English. While English is a global lingua franca, it represents only about 20% of the world’s population. This linguistic and cultural bias has created a digital paradox: we are building supposedly universal intelligence on a foundation that excludes the lived experiences, histories, and communication styles of billions.

The benchmarks used to measure AI progress reflect this narrow worldview. They often test for rote knowledge, translation accuracy, or performance on multiple-choice questions—tasks that fail to capture the deep, contextual understanding that defines true intelligence. Recognizing this critical gap, OpenAI has embarked on a pioneering initiative to redefine how we measure AI capability. The company is developing a new generation of benchmarks designed to evaluate models not just on what they know, but on how well they understand and reason within diverse linguistic and cultural contexts. Their first major step is a comprehensive benchmark for India, a project that signals a monumental shift from a monolingual, Western-centric paradigm to a truly global and inclusive vision for the future of artificial intelligence.

The Saturation Point: Why Current AI Benchmarks Are Failing Us

For years, the AI community has relied on a suite of standardized tests to chart the progress of language models. Benchmarks like the Massive Multitask Language Understanding (MMMLU) have served as crucial yardsticks, pushing models to become more knowledgeable and capable. However, we are rapidly approaching a point of diminishing returns. OpenAI has observed that these existing benchmarks are becoming “saturated,” a term that describes a test that is no longer effective at differentiating between top performers. When the most advanced models all cluster near perfect scores, the benchmark ceases to be a meaningful indicator of genuine progress. It’s akin to giving a calculus exam to a group of advanced mathematicians; they would all likely ace it, but the test would tell us nothing about who is making groundbreaking discoveries in the field.

This saturation highlights a deeper, more fundamental flaw in our evaluation methods. The problem isn’t just that the questions are becoming too easy, but that they are the wrong kind of questions altogether. The overwhelming focus has been on tasks that are easily quantifiable but shallow in their assessment of intelligence.

Current multilingual benchmarks primarily focus on: * Direct Translation: Evaluating a model’s ability to convert text from one language to another, often without regard for idiomatic expressions or cultural context. * Multiple-Choice Questions: Forcing a model to select a single correct answer from a predefined list, which tests knowledge recall rather than nuanced reasoning or creative problem-solving.

These methodologies fail to measure a model’s grasp of regional context, cultural subtleties, historical perspectives, and the intricate tapestry of human values that shape communication. An AI might be able to correctly translate a proverb, but does it understand the cultural wisdom it contains? It might identify a historical figure, but can it explain their significance from the perspective of the people and culture they belong to? Without this deeper level of comprehension, an AI is little more than a sophisticated search engine—a tool for information retrieval, not a partner in understanding. This limitation is particularly stark when considering the 80% of the global population whose languages and cultures are underrepresented in AI training data, making the models less useful, and sometimes even nonsensical, for a majority of the world.

A Case Study in Context: Introducing IndQA

To pioneer a new standard, OpenAI turned its attention to India, its second-largest market and a region of unparalleled diversity. Home to 22 official languages and a staggering number of dialects, cultures, and traditions, India represents the ultimate challenge—and opportunity—for building a culturally intelligent AI. The result of this focus is IndQA, a new benchmark designed from the ground up to evaluate how well AI models understand and reason about topics that are deeply relevant to Indian life.

IndQA is not merely a translation of an existing English benchmark. It is a completely new framework created in collaboration with 261 domain experts from across India, including esteemed journalists, linguists, scholars, artists, and industry practitioners. Their collective expertise ensures that the benchmark is authentic, relevant, and reflective of the nation’s rich complexity.

The structure of IndQA is a testament to its depth and ambition.

| IndQA at a Glance | |

|---|---|

| Total Questions | 2,278 |

| Languages Covered | 12 |

| Cultural Domains | 10 |

| Contributing Experts | 261 |

This benchmark moves far beyond simple queries, diving into subjects that require a sophisticated understanding of local context.

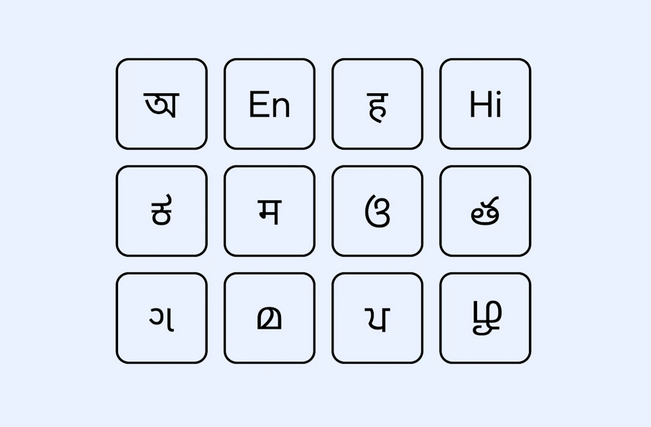

The Languages of IndQA

The selection of languages demonstrates a keen awareness of how communication truly functions in India. The benchmark includes major languages spoken by tens of millions, ensuring broad coverage.

- Bengali

- English

- Gujarati

- Hindi

- Kannada

- Malayalam

- Marathi

- Odia

- Punjabi

- Tamil

- Telugu

- Hinglish

The inclusion of Hinglish—a fluid, conversational mix of Hindi and English—is particularly insightful. It acknowledges the reality of “code-switching,” a common practice where speakers alternate between languages within a single conversation. By testing on Hinglish, OpenAI is pushing models to understand language as it is actually spoken, not just in its formal, textbook form.

The Cultural Tapestry of IndQA

The questions in IndQA are distributed across a wide array of cultural domains, ensuring that the evaluation is holistic and touches upon all facets of life.

- Architecture & Design: Understanding regional architectural styles and their historical significance.

- Arts & Culture: Knowledge of classical dance forms, folk art, and modern artistic movements.

- Everyday Life: Comprehending social customs, family structures, and daily routines.

- Food & Cuisine: Grasping the diversity of regional cuisines, ingredients, and culinary traditions.

- History: Reasoning about historical events from local and national perspectives.

- Law & Ethics: Understanding legal frameworks and ethical dilemmas relevant to the region.

- Literature & Linguistics: Interpreting poetry, prose, and linguistic nuances from various traditions.

- Media & Entertainment: Knowledge of Indian cinema, television, and popular media.

- Religion & Spirituality: Understanding the philosophies and practices of the diverse religions in India.

- Sports & Recreation: Familiarity with popular traditional and modern sports.

By building a benchmark with this level of specificity and cultural grounding, OpenAI is creating a powerful tool to expose the weaknesses of current models and guide the development of more sophisticated and worldly AI systems.

Building a Better Benchmark: The Human-in-the-Loop Approach

The creation of IndQA represents a paradigm shift in methodology. Instead of relying on automated processes or simply translating existing materials, OpenAI adopted a meticulous, human-centric approach. The collaboration with 261 Indian domain experts was not a cursory consultation; it was the foundational pillar of the entire project. These experts were responsible for crafting questions that are not only linguistically accurate but also culturally resonant—queries that an outsider, or an AI trained on generic web data, would struggle to answer.

This human-in-the-loop process ensures that the benchmark avoids the pitfalls of cultural misunderstanding and stereotypical representation that can arise from purely data-driven methods. Each of the 2,278 questions in IndQA is a carefully constructed datapoint designed for rigorous and fair evaluation.

A single datapoint in the IndQA benchmark contains:

- A Culturally Grounded Prompt: The question itself, written in one of the 12 Indian languages.

- An English Translation: This component makes the benchmark auditable and accessible to a global research community, allowing teams to understand the nature of the question without needing fluency in the original language.

- Rubric Criteria for Grading: A detailed set of guidelines that specifies what constitutes a correct, partially correct, or incorrect answer. This rubric ensures that evaluations are consistent and objective.

- An Expected Answer from Domain Experts: A model answer, provided by the experts, that serves as the gold standard against which the AI’s response is measured.

This comprehensive structure elevates IndQA far beyond a simple question-and-answer set. It is a sophisticated evaluation tool that prioritizes depth, context, and authenticity. By grounding the benchmark in the knowledge of local experts, OpenAI is ensuring that it measures what truly matters: a genuine understanding of a culture, not just a superficial familiarity with its keywords.

Beyond Translation: Measuring True Understanding and Reasoning

The real innovation of IndQA lies in the type of intelligence it aims to measure. It moves the goalposts from information recall to contextual reasoning. The questions are designed to be challenging in ways that current models are not prepared for, requiring an ability to connect disparate pieces of cultural knowledge, interpret nuance, and reason from a non-Western perspective.

For example, instead of asking a simple factual question like, “What is the Taj Mahal?”, a question in IndQA might be framed as: “Explain the symbolic significance of the ‘jali’ latticework in Mughal architecture, particularly in its function of balancing privacy and openness for women in the zenana.” Answering this requires more than just knowing a definition; it demands historical, cultural, and architectural knowledge.

Similarly, a question in the Food & Cuisine domain might not be “What are the ingredients in biryani?”, but rather, “Compare the philosophical and practical differences between the ‘dum pukht’ style of cooking used for Hyderabadi biryani and the ‘kacchi’ method used in other regions, and explain how each technique reflects local culinary history.” This probes for an understanding of process, history, and comparison—hallmarks of higher-order reasoning.

By posing these kinds of culturally grounded prompts, IndQA tests a model’s ability to:

- Synthesize Information: Combine knowledge from different domains (e.g., history, art, religion) to form a coherent answer.

- Understand Context: Recognize that the meaning of a concept can change dramatically depending on the regional, social, or historical context.

- Appreciate Nuance: Move beyond literal interpretations to grasp subtle, implicit meanings embedded in language and cultural practices.

- Avoid Stereotypes: Provide answers that are specific and accurate, rather than relying on broad, often misleading generalizations.

This new form of evaluation is essential for developing AI that can serve as a truly helpful and respectful assistant for users around the world. An AI that can navigate these complexities is one that can offer more relevant advice, generate more meaningful content, and engage in more natural and helpful conversations with people from all walks of life.

The Ripple Effect: Setting a “North Star” for Global AI Development

The significance of IndQA extends far beyond its immediate application. OpenAI explicitly states that it sees this project as an inspiration and a blueprint for creating similar benchmarks for other regions and cultures across the globe. In their own words, this approach can provide a “north star for improvements in the future.”

In a blog post, the company explained the broader vision: “IndQA style questions are especially valuable in languages or cultural domains that are poorly covered by existing AI benchmarks. Creating similar benchmarks to IndQA can help AI research labs learn more about languages and domains models struggle with today.”

This initiative effectively throws down the gauntlet to the entire AI industry. It challenges other major research labs to look beyond their saturated, English-centric evaluations and begin the difficult but necessary work of building models that are truly multicultural. The creation and open discussion of IndQA will likely have several profound effects on the field:

- Driving Competition on a New Axis: Success will no longer be measured solely by scores on English benchmarks. AI labs will be pushed to demonstrate their models’ capabilities across a diverse range of languages and cultures.

- Improving Data Diversity: To perform well on benchmarks like IndQA, companies will need to invest in curating and processing high-quality, culturally diverse training data, moving beyond simply scraping the English-dominated internet.

- Fostering Global Collaboration: The success of IndQA was built on collaboration with local experts. This model encourages AI developers to engage with communities around the world, leading to more inclusive and equitable technology.

- Enhancing User Experience Worldwide: Ultimately, the greatest beneficiaries will be the billions of non-English speaking users who will gain access to AI tools that understand their language, respect their culture, and are genuinely useful in their daily lives.

Charting the Course for a Truly Global AI

The development of IndQA is more than just a technical update; it is a philosophical statement about the future of artificial intelligence. It marks a decisive move away from a monolithic view of intelligence toward a celebration of its diverse, global manifestations. The era of measuring AI with simplistic, saturated, and culturally sterile benchmarks is coming to an end. The new frontier is one of context, nuance, and deep cultural understanding.

By building a framework that values the richness of human experience in all its forms, OpenAI is not only creating a better tool for measuring its models but is also lighting the way for the entire industry. This initiative is a critical step toward realizing the ultimate promise of AI: to create a technology that empowers, connects, and understands all of humanity, not just a fraction of it. The journey is long, but with this new “north star” to guide the way, the course is finally set for a truly global AI.

Comments