The Pentagon’s New AI Frontier: OpenAI’s Open-Weight Models Enter the Fray

The world of artificial intelligence is often defined by a stark contrast: the closely guarded, proprietary models of giants like OpenAI and the open, community-driven alternatives. For years, organizations with extreme security needs, particularly the US military, could only look on as the most powerful AI systems remained locked away in the cloud. However, a recent strategic shift by OpenAI is poised to reshape the landscape of national defense. The release of its first open-weight models in years, gpt-oss-120b and gpt-oss-20b, has unlocked new possibilities for military applications, igniting both excitement and a healthy dose of skepticism among defense contractors.

These new models represent a pivotal moment. Unlike their famous sibling, ChatGPT, which operates exclusively on OpenAI’s servers, these “open-weight” systems can be downloaded and run on private, secure hardware. This capability is not just a technical detail; it is the key that unlocks the use of cutting-edge AI in environments where an internet connection is a liability, not a feature. For the military, this means the potential to deploy sophisticated AI for sensitive operations without ever exposing critical data to the outside world. While initial tests suggest OpenAI still has ground to cover to match its open-source competitors, its entry into this domain signals a new era of competition and innovation at the intersection of AI and national security.

A New Battlefield for AI: The Push for Air-Gapped Intelligence

To understand the significance of OpenAI’s move, one must first grasp the concept of “air-gapping.” In the world of high-stakes cybersecurity, an air-gapped system is one that is physically isolated from unsecured networks, including the public internet. This isolation is the ultimate defense against remote cyberattacks, data breaches, and espionage. For military and intelligence agencies handling classified information, from analyzing foreign communications to processing battlefield sensor data, air-gapping is a non-negotiable requirement.

For years, this security mandate created a major obstacle for AI adoption. The most advanced large language models (LLMs) from leading labs were offered as a service, accessible only through a cloud connection. This model was fundamentally incompatible with the military’s most sensitive operations. As a result, defense contractors and military tech divisions had to rely on either developing their own bespoke AI models from scratch or adapting existing open source alternatives like Meta’s Llama and Google’s Gemma.

OpenAI’s new gpt-oss models shatter this limitation. The key lies in the term “open-weight.” This means that the core parameters—the “weights” that define the model’s knowledge and behavior—are publicly available. With these weights, an organization can:

- Run the model locally: Install and operate the AI on its own secure, air-gapped servers, completely disconnected from the internet.

- Customize and fine-tune: Tailor the model for highly specific tasks by training it on proprietary, classified data without that data ever leaving the secure environment.

- Maintain complete control: Eliminate reliance on a third-party provider, ensuring the AI remains operational even if external networks are compromised or unavailable.

This newfound freedom is critical for the Pentagon’s ambitions. Doug Matty, the chief digital and AI officer for the Department of Defense (referred to as the Department of War by the Trump administration), emphasized the necessity of integrating generative AI into both battlefield systems and essential back-office functions like auditing. “Our capabilities must be adaptable and flexible,” Matty stated, underscoring that many of these applications will demand models that are not tethered to the cloud.

Early Adopters and Initial Verdicts

The arrival of gpt-oss has spurred immediate interest from companies that build technology for the defense sector. Their initial experiments, however, reveal a mixed but promising picture.

One such company is Lilt, an AI translation firm that contracts with the military to analyze foreign intelligence. Their work involves handling highly sensitive documents, which necessitates the use of air-gapped systems. Previously reliant on their own models or open-source options, Lilt was eager to test OpenAI’s offering. Spence Green, Lilt’s CEO, explained a typical use case where an analyst might prompt the system: “Translate these documents to English and ensure that there are no mistakes. Then have the most knowledgeable person about hypersonics check the work.” This workflow automates translation and intelligently routes information to the correct human experts, addressing a critical shortage of language specialists.

However, Lilt’s evaluation found that the gpt-oss models had limitations for their specific needs:

- Modality: The models currently process only text, while military intelligence often includes crucial images and audio.

- Performance: They underperformed in some languages and struggled when operating with limited computing power, a common constraint in deployed environments.

Despite these shortcomings, Green remains optimistic. “With gpt-oss, there’s a lot of model competition right now,” he noted. “More options, the better.”

In contrast, other companies have found more immediate success. EdgeRunner AI is developing a virtual personal assistant for military personnel that operates without a cloud connection. According to a paper the company published, they achieved strong performance by fine-tuning a gpt-oss model on a specialized cache of military documents. This customized version is now slated for testing by the US Army and Air Force, a significant step past the demo stage.

Jordan Wiens, cofounder of Vector 35, which provides the Pentagon with reverse-engineering tools, confirmed that they have also integrated gpt-oss into their products. However, he cautioned that it’s “pretty early,” with most Pentagon-related projects still in preliminary phases.

The Great Debate: Open-Weight vs. Closed-Cloud Models

OpenAI’s entry into the open-weight field intensifies a long-standing strategic debate within the defense community: Should the military invest in customizable, self-hosted models or rely on the superior raw power of proprietary, cloud-based systems? Both approaches have passionate advocates and present a complex trade-off between control and capability.

Nicolas Chaillan, founder of the AI platform Ask Sage and a former chief software officer for the US Air Force and Space Force, is a vocal critic of over-reliance on open-source models for critical military functions. He argues that they come with serious drawbacks.

“It’s like going from PhD level to a monkey,” Chaillan claims, arguing that open models hallucinate and produce incorrect outputs more frequently than the best commercial systems. He also points out that while the models themselves may be free, “the infrastructure needed to run the biggest models may end up costing the same or more than licensing a commercial model over the cloud. If you spend more money and get a worse model, it makes no sense.”

His recommendation is for the military to focus its primary efforts on the highly capable AI platforms offered by Microsoft, Amazon, and Google through secure cloud networks developed specifically for sensitive government work.

On the other side of the argument, experts and suppliers contend that relying on closed, cloud-based models introduces unacceptable risks and limitations. Pete Warden, who runs the transcription technology company Moonshine, points to recent geopolitical events as a cautionary tale. He argues that defense leaders have grown wary of big tech dependency after seeing how Elon Musk used his Starlink satellite network to influence government leaders.

“Independence from suppliers is key,” Warden insists.

This sentiment is echoed by William Marcellino, who develops AI applications for the research group RAND. He highlights that open models, which can be more easily controlled and customized, are essential for niche military projects. For example, a general commercial model might struggle to translate materials for influence operations into specific regional dialects with the necessary precision and cultural nuance. An open model can be fine-tuned on local data to perform this task flawlessly. “It’s good to have choices,” he concludes.

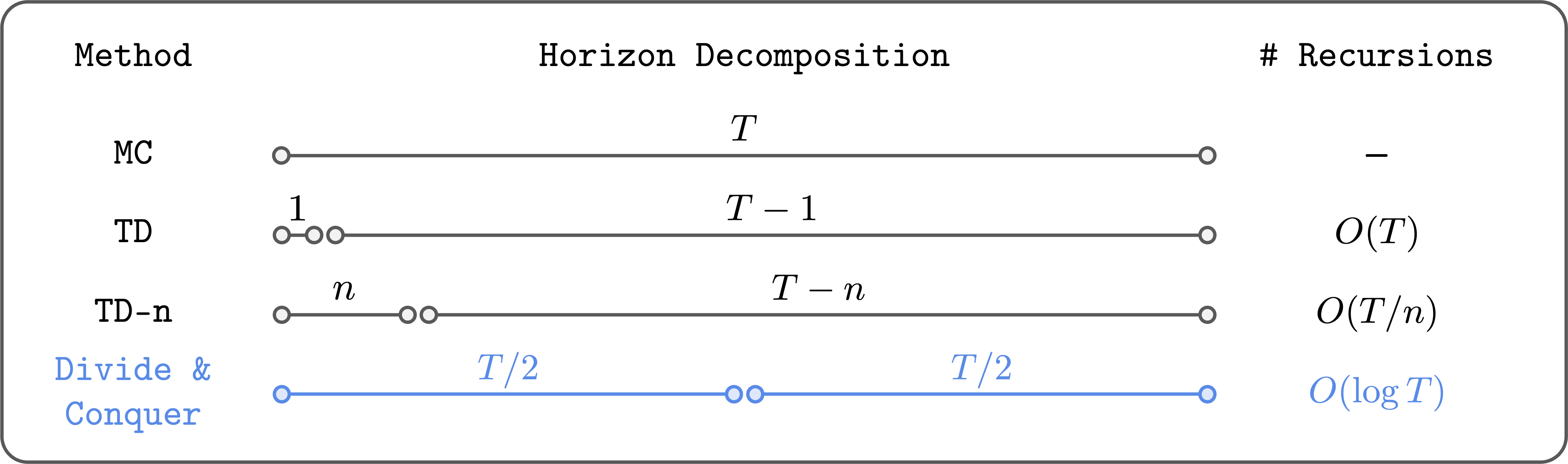

This strategic dilemma can be summarized in a direct comparison:

| Feature | Open-Weight Models (e.g., gpt-oss, Llama) | Closed-Source Cloud Models (e.g., GPT-4) |

|---|---|---|

| Control & Sovereignty | High. Full control over the model and data. Can be run on air-gapped, sovereign hardware. No reliance on external providers. | Low. Dependent on a third-party company for access, updates, and uptime. Data must be sent to their servers. |

| Customizability | High. Can be extensively fine-tuned on specific, proprietary data for specialized tasks and domains. | Limited. Customization is often restricted to API-level adjustments and cannot be trained on highly sensitive, classified data. |

| Security | Very High. Ideal for air-gapped environments, eliminating remote attack vectors. Data never leaves the secure perimeter. | Moderate to High. Relies on the security of the provider’s government cloud, which is robust but still a potential target. |

| Performance | Variable. Generally lags behind the absolute state-of-the-art but can be highly optimized for narrow tasks. | State-of-the-Art. Typically offers the highest general performance, accuracy, and reasoning capabilities. |

| Operational Cost | Potentially High. Requires significant investment in local computing infrastructure, maintenance, and expert personnel. | Predictable. Based on a licensing or usage-based fee structure, with infrastructure costs managed by the provider. |

The Pentagon’s Broader AI Strategy

The US military is not betting on a single solution. Instead, it is pursuing a diversified strategy to harness the power of AI from across the industry. Earlier this year, the Pentagon struck a series of one-year deals, worth up to $200 million each, with a roster of top AI companies, including OpenAI, Elon Musk’s xAI, Anthropic, and Google.

The objective of these partnerships is to rapidly prototype AI systems for a wide range of defense purposes, from enhancing intelligence analysis to automating war-fighting tools. OpenAI’s release of an open-weight model significantly broadens the options available within this framework, giving the Pentagon a powerful new tool for projects that demand local, secure deployment.

The strategic value of open models becomes even clearer in forward-deployed scenarios where connectivity is unreliable or nonexistent. Kyle Miller, a research analyst at Georgetown University’s Center for Security and Emerging Technology, notes that these models are particularly valuable for AI systems running directly on drones or satellites. In these edge-computing situations, the ability to make decisions in real-time without “phoning home” to a cloud server can be the difference between mission success and failure. Open models offer the military “a degree of accessibility, control, customizability, and privacy that is simply not available with closed models,” Miller says.

Charting the Future of AI in Modern Warfare

OpenAI’s re-embrace of the open-source ethos, even in a limited “open-weight” capacity, is a landmark event for the defense industry. It injects a new level of competition and capability into a market that has long been hungry for secure, customizable AI solutions. While the initial performance of gpt-oss may not yet lead the pack, the mere presence of a model from the creator of ChatGPT provides a powerful new building block for military technologists.

The path forward for AI in defense will not be a monolithic one. The future is hybrid. Elite, cloud-based models will likely continue to dominate tasks that require raw analytical power and can be performed within secure data centers. Simultaneously, a growing ecosystem of open-weight models will be fine-tuned and deployed to the tactical edge—on submarines, in cockpits, and with soldiers on the ground.

This dual approach allows the military to balance the unparalleled power of proprietary AI with the non-negotiable requirements of security, control, and operational independence. As these technologies continue to evolve, the ability to choose the right model for the right mission will become a defining feature of modern warfare, ensuring that the nation’s defense capabilities remain both potent and resilient in an increasingly complex world.

Comments