Amazon Is Using Specialized AI Agents for Deep Bug Hunting

In the relentless cat-and-mouse game of cybersecurity, the playing field is being dramatically reshaped by artificial intelligence. As generative AI accelerates the speed of software development, it simultaneously equips digital adversaries with more powerful tools for both financially motivated and state-backed attacks. For technology giants with sprawling digital ecosystems, this creates a monumental challenge: security teams must review an ever-expanding volume of code while fending off increasingly sophisticated and rapid threats.

In response to this escalating pressure, Amazon has developed a groundbreaking internal system, a silent guardian born from a company hackathon that is now a cornerstone of its defensive strategy. This system, known as Autonomous Threat Analysis (ATA), employs a novel approach to cybersecurity, using specialized AI agents to proactively hunt for vulnerabilities, analyze their potential impact, and engineer defenses before malicious actors ever get the chance to strike. It represents a paradigm shift from reactive defense to proactive, AI-driven security at an unprecedented scale.

The Genesis of a Cyber-Sentinel: From Hackathon to High-Stakes Security

Every revolutionary idea has an origin story, and ATA’s began not in a top-secret research lab, but during an internal Amazon hackathon in August 2024. A team of engineers, including Michael Moran, proposed a system to tackle two of the most persistent problems in modern cybersecurity. Steve Schmidt, Amazon’s Chief Security Officer, articulates these challenges with stark clarity.

“The initial concept was aimed to address a critical limitation in security testing—limited coverage and the challenge of keeping detection capabilities current in a rapidly evolving threat landscape," Schmidt explains. “Limited coverage means you can’t get through all of the software or you can’t get to all of the applications because you just don’t have enough humans. And then it’s great to do an analysis of a set of software, but if you don’t keep the detection systems themselves up to date with the changes in the threat landscape, you’re missing half of the picture.”

The problem is one of scale and speed. For a company like Amazon, with millions of lines of code being written and deployed daily across countless services, the idea of comprehensive manual security testing is a fantasy. Human security teams, no matter how skilled, can only cover a fraction of the potential attack surface. Simultaneously, the threat landscape evolves at a dizzying pace; a new exploit or technique discovered by hackers today could be weaponized and deployed globally by tomorrow. Traditional security methods struggle to keep up.

The hackathon project aimed to solve this by leveraging AI not as a single, all-knowing oracle, but as a diverse team of specialists—an idea that has since blossomed into the sophisticated ATA system that operates today. It has become a crucial tool, transforming the company’s approach to platform security from the inside out.

The Architecture of Autonomy: A Symphony of Specialized AI Agents

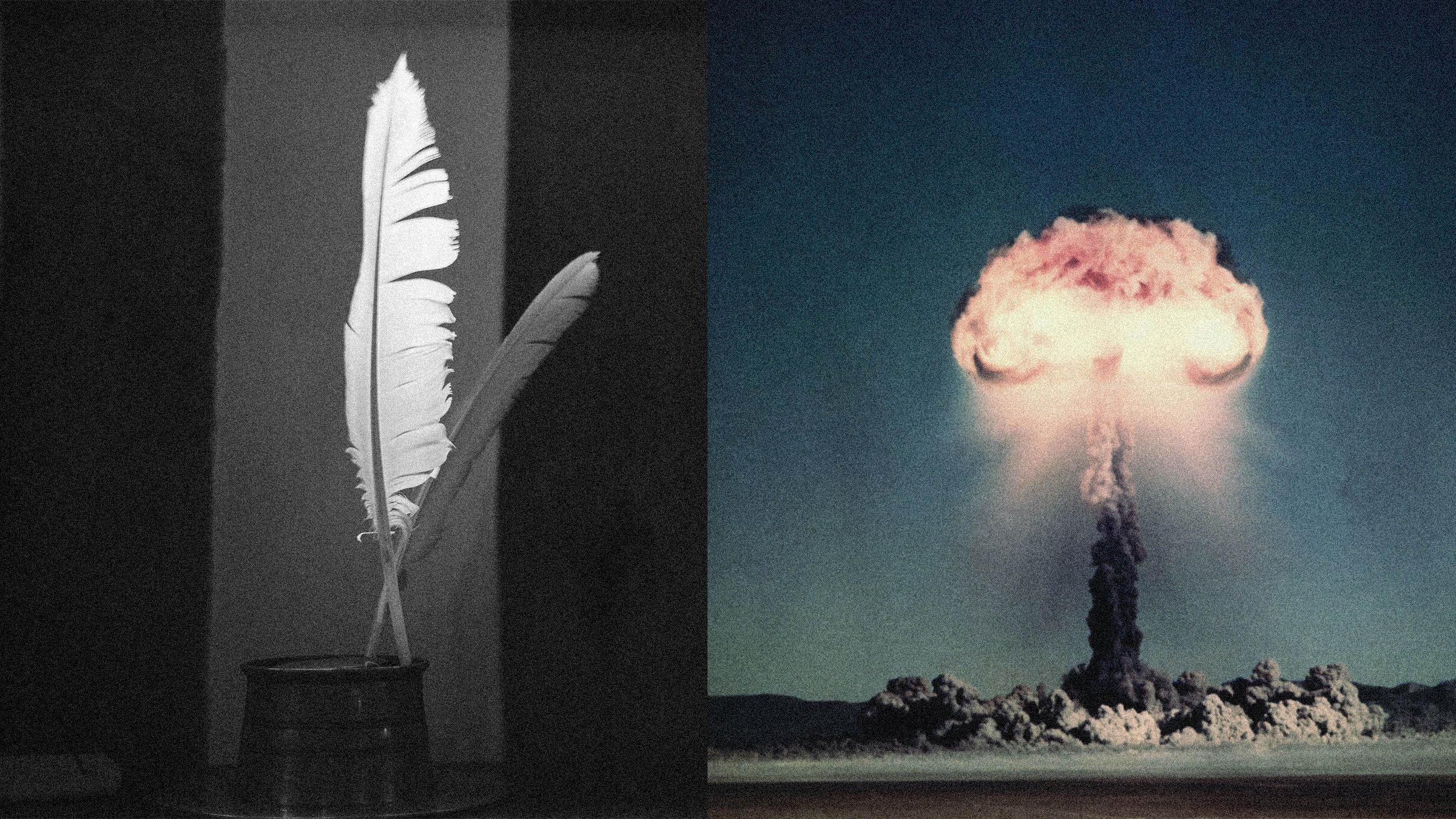

The true innovation behind ATA lies in its core architecture. Instead of relying on a single, monolithic AI model to conduct security analysis, Amazon engineered a multi-agent system. This system mimics the structure and methodology of elite human cybersecurity teams by dividing its AI agents into two competing groups: a Red Team and a Blue Team.

- The Red Team AI Agents (The Attackers): These agents are programmed to think and act like adversaries. Their sole purpose is to probe, attack, and find weaknesses within Amazon’s systems. They don’t just run through a checklist of known vulnerabilities; they creatively combine different attack techniques, search for novel ways to exploit code, and relentlessly test the boundaries of the digital infrastructure. They are the offense, constantly seeking to breach the defenses.

- The Blue Team AI Agents (The Defenders): Tasked with defense, these agents work to counter the Red Team’s efforts. They analyze the telemetry and logs generated by the Red Team’s simulated attacks, identify the methods being used, and then design and propose effective countermeasures. Their work involves developing new detection capabilities, suggesting code remediations, and creating security controls to plug the holes discovered by their offensive counterparts.

This adversarial dynamic is the engine of ATA’s success. The two teams of AI agents are locked in a continuous cycle of attack and defense, playing out countless security scenarios at machine speed. This competition rapidly exposes weaknesses and drives the creation of robust, battle-tested security solutions far more quickly and comprehensively than a human team could ever hope to achieve alone.

Building a Digital Twin: The Power of High-Fidelity Environments

For any security testing to be meaningful, it must accurately reflect the environment it is designed to protect. A vulnerability discovered in a sterile, isolated lab may not be exploitable in a complex, hardened production system. Amazon’s engineers understood this critical distinction and built ATA to operate within “high-fidelity” testing environments.

These environments are not simple sandboxes; they are deeply realistic digital twins of Amazon’s actual production systems. They are designed to mirror the complex interplay of services, configurations, and data flows that exist in the live infrastructure. This commitment to realism provides two profound advantages:

- Relevant and Actionable Insights: Because the AI agents are operating in a near-perfect replica of the real world, their findings are immediately relevant. The vulnerabilities they discover and the defenses they propose are not theoretical; they are directly applicable to the production environment, eliminating the guesswork often involved in translating lab results into real-world security patches.

- Authentic Telemetry: The agents both ingest and produce real system telemetry. This means the Red Team’s attacks generate verifiable logs just as a real attack would, and the Blue Team’s proposed defenses can be validated against this authentic data to confirm their effectiveness.

This approach ensures that ATA’s work is grounded in reality, producing security enhancements that are practical, effective, and ready for deployment.

The Quest for Truth: Architecting Against AI Hallucinations

One of the most significant concerns with modern generative AI is its tendency to “hallucinate”—to generate plausible-sounding but factually incorrect or nonsensical information. In the high-stakes world of cybersecurity, a hallucinated vulnerability or a faulty security recommendation could have disastrous consequences, wasting valuable time and potentially introducing new risks.

Amazon’s team designed ATA from the ground up to eliminate this problem. The system’s architecture enforces a strict, evidence-based workflow that Schmidt claims makes “hallucinations architecturally impossible.” This is achieved through a core principle of verifiability.

Every action and claim made by an AI agent must be backed by concrete, observable proof from the high-fidelity environment.

- When a Red Team agent claims to have found a new attack technique, it must provide time-stamped logs of the actual commands it executed to prove its success.

- When a Blue Team agent proposes a new detection rule, it must use real telemetry from a simulated attack to demonstrate that the rule effectively identifies and blocks the threat.

This relentless demand for verifiable proof acts as a built-in “hallucination management” system. It ensures that the output is not just plausible but provably true, building a deep level of trust in the system’s autonomous findings and allowing human engineers to act on its recommendations with confidence.

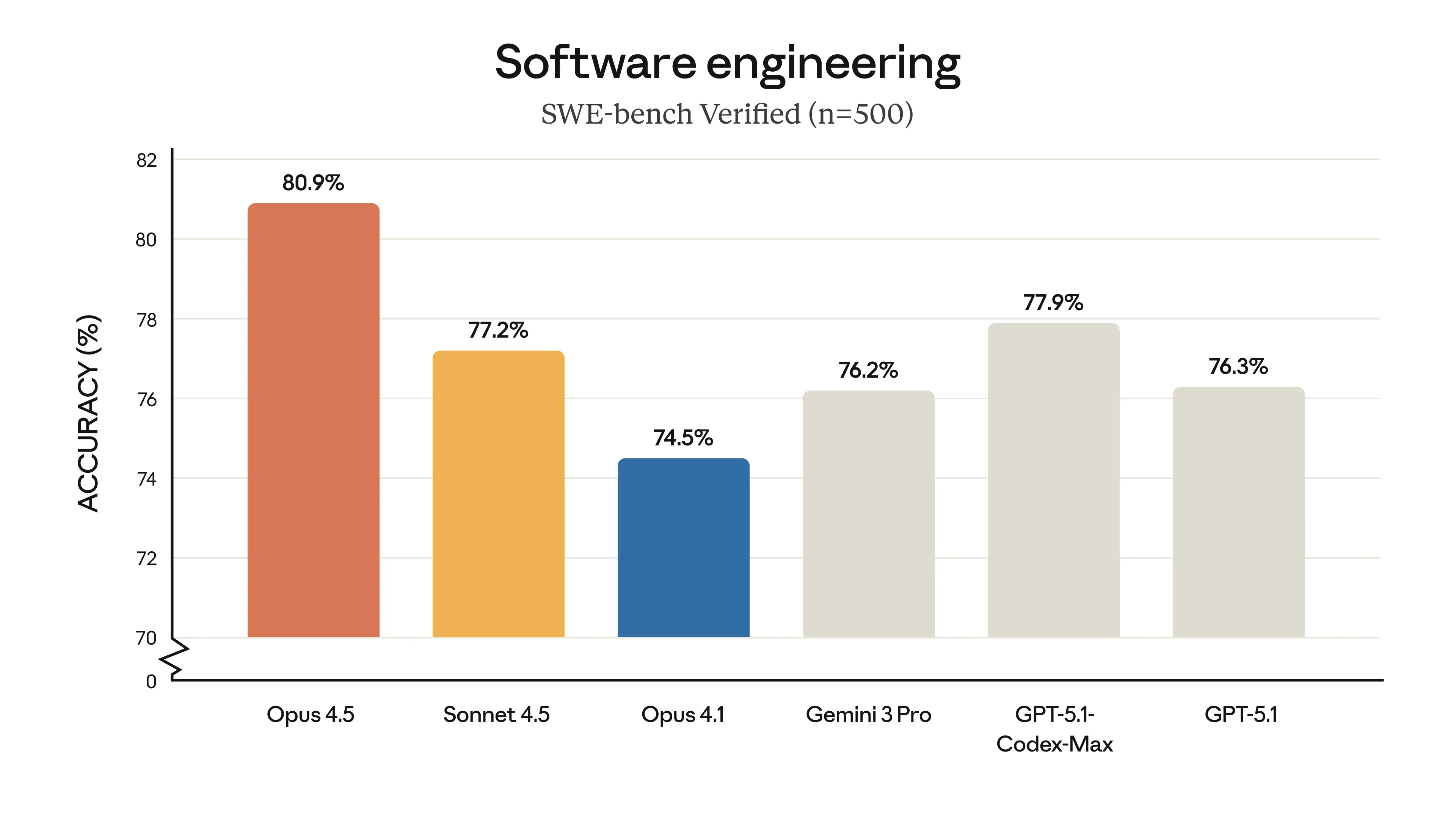

A Case Study in Speed and Efficacy: The Python “Reverse Shell” Challenge

To understand the practical impact of ATA, consider a real-world example of its application. The system was tasked with analyzing “reverse shell” techniques in Python—a common method used by hackers to gain remote control over a compromised device by tricking it into connecting back to the attacker’s computer.

A human team might spend days or weeks researching known reverse shell methods and testing them one by one. ATA, however, approached the problem at an entirely different scale and speed. Within hours, the system’s Red Team agents had not only tested existing techniques but had also autonomously discovered new, previously unknown variations of reverse shell tactics.

The Blue Team agents immediately analyzed these novel threats and proposed a suite of new detection capabilities for Amazon’s defense systems. The result was a set of protections that proved to be 100 percent effective against the identified techniques. This demonstrates a dramatic acceleration in the security lifecycle, condensing what could have been a lengthy research project into a few hours of autonomous work.

| Feature | Traditional Security Analysis | Amazon’s Autonomous Threat Analysis (ATA) |

|---|---|---|

| Speed | Days or Weeks | Hours |

| Scope | Limited to known techniques and human creativity | Generates novel attack variations at machine scale |

| Efficacy | Dependent on individual analyst skill; potential for human error | 100% effective detection proposed in the case study |

| Process | Manual testing, code review, report generation | Autonomous Red/Blue team competition, automatic detection proposal |

| Validation | Manual verification, potential for false positives | Built-in, evidence-based validation using real telemetry |

Human in the Loop: Augmenting, Not Replacing, Expertise

Despite its advanced autonomous capabilities, ATA is not designed to replace Amazon’s human security experts. Instead, it operates on a “human in the loop” methodology, serving as an incredibly powerful force multiplier for the security team. The system handles the massive volume of rote, repetitive, and time-consuming tasks involved in threat analysis, freeing up its human counterparts to focus on more complex, strategic challenges.

ATA autonomously identifies a weakness and proposes a fix, but a human engineer provides the final review and approval before any changes are implemented in Amazon’s live security systems. This partnership combines the speed, scale, and computational power of AI with the nuanced judgment, intuition, and ethical oversight of human experts.

Michael Moran, one of the engineers who first envisioned the system, highlights how it enhances his role rather than diminishes it.

“I get to come in with all the novel techniques and say, ‘I wonder if this would work?’ And now I have an entire scaffolding and a lot of the base stuff is taken care of for me," Moran says. “It makes my job way more fun but it also enables everything to run at machine speed.”

This sentiment is echoed by Schmidt, who sees ATA as a tool for empowerment. “AI does the grunt work behind the scenes. When our team is freed up from analyzing false positives, they can focus on real threats,” he states. “I think the part that’s most positive about this is the reception of our security engineers, because they see this as an opportunity where their talent is deployed where it matters most."

The Future is Autonomous: What’s Next for ATA and Cybersecurity?

The success of ATA in proactively identifying and mitigating threats has paved the way for its next evolutionary step: real-time incident response. Schmidt says the goal is to begin using ATA to help manage and remediate actual attacks on Amazon’s systems as they happen.

Imagine a scenario where a novel attack begins to unfold. Instead of a human team scrambling to manually analyze logs and trace the intrusion, ATA could be unleashed. Within minutes, its agents could identify the attack vector, perform variant analysis to see if the same weakness exists elsewhere across Amazon’s vast infrastructure, and propose a comprehensive remediation and containment strategy to human responders. This would slash response times from hours to minutes, dramatically reducing the potential damage of a breach.

The development of ATA signals a broader shift in the cybersecurity landscape. The model of using specialized, competing AI agents within high-fidelity environments could become the new industry standard for large-scale enterprise security. As attackers continue to incorporate AI into their own toolkits, defensive systems like ATA will become not just advantageous, but essential. It represents a critical advancement in the ongoing AI arms race, giving defenders a powerful new weapon to protect the digital world.

Redefining the Digital Battlefield

Amazon’s Autonomous Threat Analysis system is more than just an innovative tool; it’s a new philosophy for cybersecurity. By combining the adversarial dynamics of Red and Blue teams with the speed and scale of specialized AI agents, all grounded in a verifiable, evidence-based framework, Amazon has created a system that is proactive, predictive, and perpetually learning.

It tackles the core challenges of modern security—scale, speed, and complexity—by automating the grunt work and empowering human experts to focus on the most critical threats. What started as a creative idea at a hackathon has evolved into a sophisticated cyber-sentinel, demonstrating that in the future of cybersecurity, the best defense may just be a brilliant, autonomous offense.

Comments