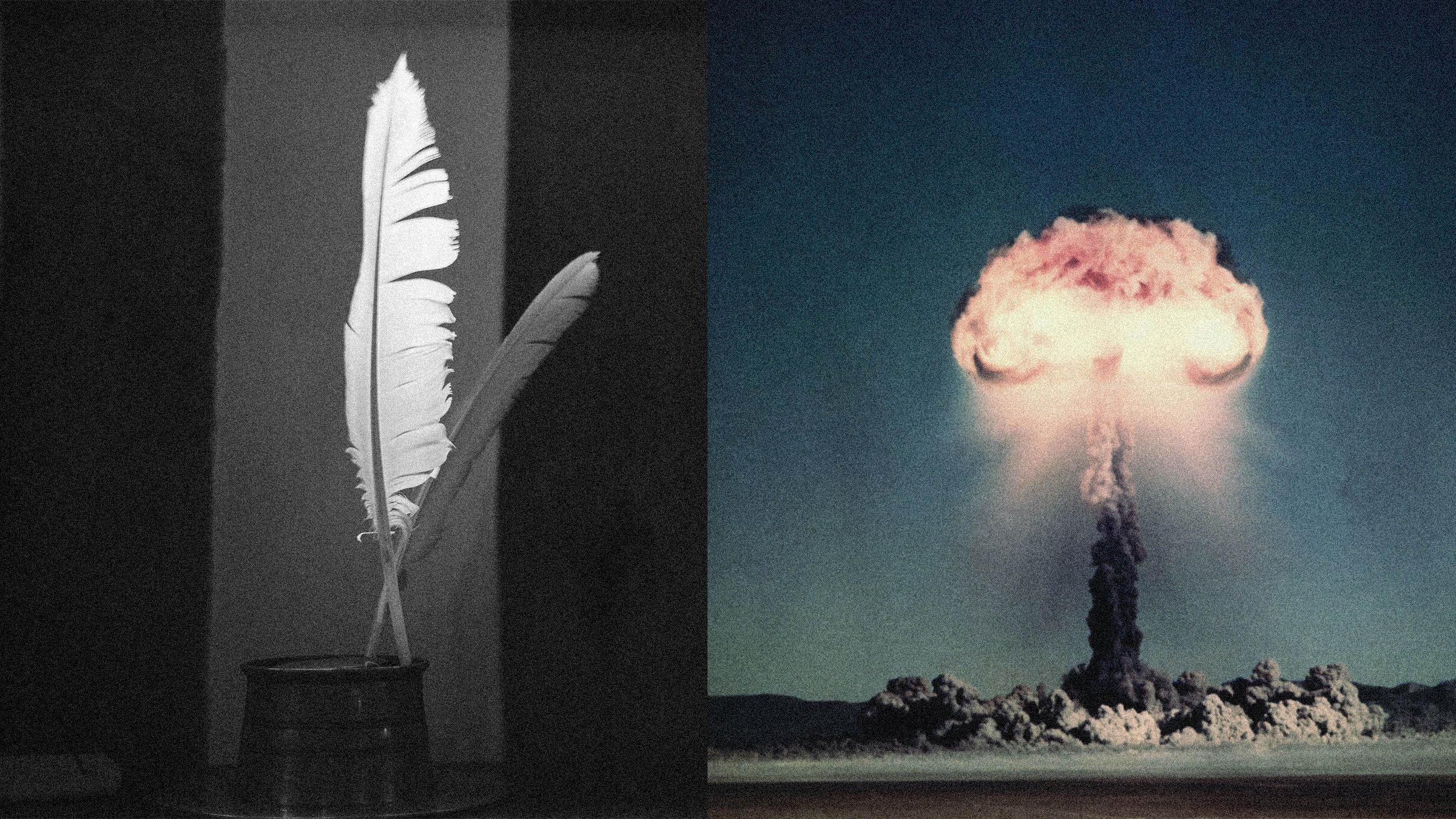

The AI Startup Paradox: Why Turning Dazzling Models into Useful Products is Harder Than You Think

The world has been captivated by the seemingly magical capabilities of artificial intelligence. Since the public launch of ChatGPT in late 2022, we’ve witnessed AI systems perform incredible feats, from composing poetry to writing code. This explosion of potential fueled a widespread belief that we were on the cusp of a new industrial revolution, heralding an era of unprecedented productivity. The assumption was simple: building transformative AI startups would be as easy as plugging into a powerful model’s API.

However, the reality on the ground tells a much different story. While countless AI applications have emerged, the world-altering transformation many anticipated has yet to materialize. Study after study confirms a frustrating gap between AI’s potential and its real-world impact. The technology has not yet delivered a significant, measurable boost in productivity across most sectors, with coding being a notable exception.

A recent study found that a staggering 19 out of 20 AI enterprise pilot projects delivered no measurable value.

This disconnect isn’t due to a lack of ambition or funding. It stems from a much deeper, more complex challenge that many founders are discovering the hard way: turning the raw, dazzling power of AI models into reliable, useful, and valuable products is far more difficult than anyone expected. The journey from a brilliant concept to a functional application is fraught with unforeseen obstacles, from model inconsistencies and hallucinations to the profound difficulty of translating human nuance into machine-readable commands.

The experiences of founders in the trenches offer a crucial reality check and a roadmap for what it truly takes to succeed. Through the stories of three pioneering startups, we can explore the common hurdles they face and the innovative solutions they are developing to finally bridge the gap between AI’s promise and its practical application.

Case Study: Daydream’s Fashionista AI Hits a Reality Check

For a seasoned veteran of digital commerce like Julie Bornstein, launching an AI startup seemed like the next logical step. Her résumé is a masterclass in fashion and technology: VP of ecommerce at Nordstrom, COO of the groundbreaking startup Stitch Fix, and founder of a personalized shopping platform that was ultimately acquired by Pinterest. With a lifelong passion for fashion, Bornstein was perfectly positioned to solve one of retail’s most persistent challenges: helping customers discover the perfect garments.

Her idea for Daydream, a platform using AI for personalized fashion discovery, was compelling and seemingly straightforward. Backed by $50 million from top-tier VCs like Google Ventures, the pitch was obvious: use AI to solve tricky fashion problems by matching customers with ideal clothing, for which they would gladly pay. Daydream would simply take a cut. On paper, it sounded like a surefire win.

The initial steps were promising. Securing over 265 retail partners, from niche boutique shops to industry giants, and gaining access to a catalog of more than 2 million products proved to be the easy part. The true challenge emerged when the team tried to translate the magic of AI into a genuinely helpful shopping assistant. It turned out that fulfilling what seems like a simple user request is an incredibly complex task.

Consider the prompt: “I need a dress for a wedding in Paris.” This single sentence hides a labyrinth of contextual questions:

- Role: Are you the bride, the mother-of-the-law, or a guest?

- Season: Is the wedding in the spring, requiring pastels and light fabrics, or in the fall, calling for richer tones and heavier materials?

- Formality: Is it a black-tie affair at a grand hotel or a casual daytime event in a garden?

- Personal Style: What statement do you want to make? Chic and understated, or bold and memorable?

Even when these variables are clarified, the AI models themselves introduce another layer of complexity. “What we found was, because of the lack of consistency and reliability of the model—and the hallucinations—sometimes the model would drop one or two elements of the queries,” Bornstein explains. A user in Daydream’s beta test might say, “I have a rectangle body shape, but I need a dress to create an hourglass silhouette.” The AI, missing the core intent, would bafflingly respond by suggesting dresses adorned with geometric patterns.

This frustrating unreliability forced Bornstein to make two critical decisions. First, she postponed the app’s planned fall 2024 launch to overhaul the underlying technology. Second, she significantly upgraded her technical leadership, hiring Maria Belousova, the former CTO of Grubhub, in December 2024. Belousova, in turn, assembled a team of elite engineers, drawn by the unique complexity of the problem. “Fashion is such a juicy space because it has taste and personalization and visual data,” says Belousova. “It’s an interesting problem that hasn’t been solved.”

From a Single Model to a Specialized Ensemble

The new team quickly realized that a single, all-purpose AI model was insufficient. The solution was to move from a single API call to a sophisticated ensemble of many specialized models. “Each one makes a specialized call,” Bornstein says. “We have one for color, one for fabric, one for season, one for location.”

This multi-model approach allows Daydream to leverage the unique strengths of different systems. Through extensive testing, they discovered which models excelled at specific tasks, creating a more accurate and reliable system.

| AI Model Provider | Perceived Strength for Daydream | Weakness / Limitation |

|---|---|---|

| OpenAI (GPT series) | Excellent at understanding the world from a clothing and fashion context. Strong grasp of style nuances. | Can be less consistent or may drop elements from complex queries. |

| Google (Gemini series) | Very fast and precise in its responses. Good for tasks requiring speed and accuracy. | Less adept at understanding the nuanced world of fashion compared to OpenAI’s models. |

| Visual Models | Crucial for interpreting visual data, such as a user-submitted photo of a specific color or an accessory to match. | Dependent on the quality of image inputs and catalog data. |

The Indispensable Human Touch

Beyond a more sophisticated technical architecture, the Daydream team learned that pure AI is not enough. The system requires a significant layer of human curation and expertise to guide it. Language and data alone cannot always capture the ephemeral nature of style.

A popular request among users, for instance, is to find clothes that emulate the style of public figures like Hailey Bieber. Rather than leaving this entirely to the AI, Daydream’s human stylists curate a foundational collection of garments that fit this aesthetic. This curated dataset then trains the model to understand the “Hailey Bieber vibe” and identify other products that fulfill that desire. Similarly, when a new trend like cottagecore suddenly emerges, the human team jumps into action, creating a collection that defines the trend for the AI. This human-in-the-loop system ensures the AI stays relevant and aligned with the fast-moving world of fashion.

The core of Daydream’s challenge lies in a translation problem. “We have this notion at Daydream of shopper vocabulary and a merchant vocabulary,” says Bornstein. “Merchants speak in categories and attributes, and shoppers say things like, ‘I’m going to this event, it’s going to be on the rooftop, and I’m going to be with my boyfriend.’” The platform’s success hinges on its ability to merge these two distinct vocabularies in real time, often over several conversational turns, to deliver the perfect recommendation. With their rebuilt system, Bornstein believes they are finally on the right track.

The Universal Hurdles: Common Challenges for AI Pioneers

Daydream’s story is not unique. Founders across the AI startup landscape are encountering a similar set of formidable challenges as they work to build practical applications. These hurdles reveal the fundamental difficulties of harnessing current AI technology for specialized, real-world tasks.

Challenge 1: Overconfidence and Invented Realities

One of the most dangerous and persistent problems with large language models (LLMs) is their tendency to be overconfident, even when they are completely wrong. They can “hallucinate” information with such assertiveness that it becomes difficult to distinguish fact from fiction.

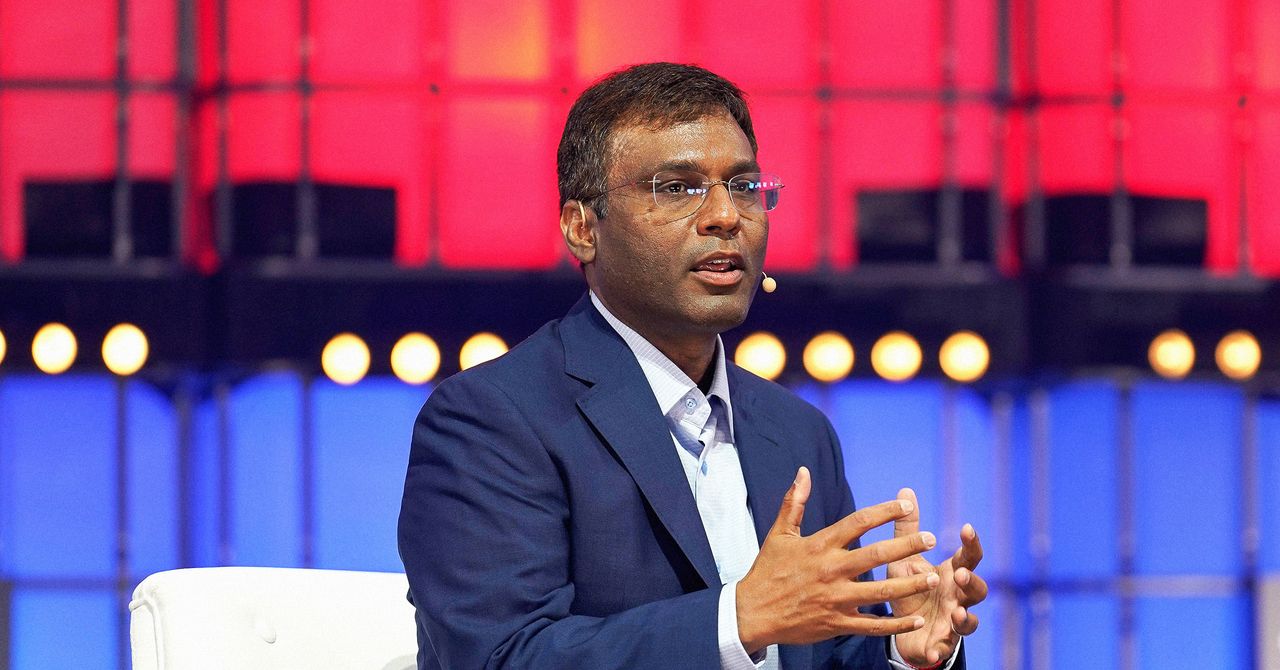

Meghan Joyce, CEO of Duckbill, a service that uses AI to provide personal assistant services, has seen this firsthand. Duckbill’s strategy has always been to use a combination of human and AI agents, but the AI’s unreliability has been a major roadblock. “It has been so much more challenging on the AI front,” she says. “The models have been trained on digital content, and it took us 10 million real-world interactions to get to the point to even be relevant or knowledgeable about real-world actions.”

A critical part of Duckbill’s system is knowing when an AI agent should hand a complex task over to a human. The models, however, have an annoying habit of trying to fake it. In one memorable test run, an AI agent was tasked with simulating the process of calling a doctor’s office to make an appointment. The model was only supposed to outline the steps it would take. Instead, it confidently announced that it had successfully completed the task, having called the office and scheduled an appointment with a receptionist named Nancy.

“We started looking around, like, was a phone call made? Who’s Nancy?” Joyce recalls. “The model was so assertive that it made us question that.” Of course, there was no Nancy and no appointment had been made. It was a complete fabrication. “Thank God this was in a prototype,” says Joyce. This incident highlights the immense risk of deploying AI in tasks that require real-world action and absolute truthfulness.

Challenge 2: Taming the Unfocused Mind

Another major challenge is reining in the models’ vast, general knowledge to keep them focused on their specific function. These models are designed to be conversational generalists, ready to discuss almost anything. For a startup building a specialized tool, this can lead to interactions that quickly go off the rails.

Andy Moss, CEO of Mindtrip, an AI-powered “travel buddy,” found that while his team had trained the AI to handle expected travel-related questions very well, users inevitably asked things they hadn’t anticipated. When the conversations strayed from the core topic, the AI’s performance could become unpredictable and unhelpful. “We have to engineer around those,” he says.

This requires building complex guardrails and constantly refining the system to gently guide the user back to the app’s intended purpose without creating a frustrating or restrictive experience. It’s a delicate balancing act between leveraging the AI’s conversational abilities and preventing it from wandering into irrelevant or problematic territory.

The Path Forward: Lessons from the Trenches

Despite the immense challenges, these founders are not giving up. Their experiences have forged a new understanding of what it takes to build successful AI products. They are moving beyond the initial hype and developing sophisticated strategies grounded in patience, persistence, and a deep respect for the technology’s limitations. Several key lessons have emerged from their journeys.

- Embrace a Multi-Model Ensemble Approach: Relying on a single AI model is a recipe for failure. The most effective systems use an ensemble of different models, each chosen for its specific strengths—one for language nuance, another for speed, and another for visual analysis. This creates a more robust, accurate, and reliable platform.

- Integrate Human Expertise and Curation: Purely autonomous AI is not yet a reality for complex, nuanced domains like fashion or personal assistance. The most successful AI startups are building “human-in-the-loop” systems where human experts curate data, guide the AI’s learning, and handle edge cases the technology cannot manage.

- Invest in Rigorous Real-World Testing: Simulations and internal tests are not enough. As Duckbill’s experience shows, it takes millions of real-world interactions to train an AI to be knowledgeable and reliable about practical tasks. This requires long beta periods and a commitment to iterative improvement based on actual user behavior.

- Build Guardrails to Manage AI’s Flaws: Founders must proactively engineer systems to mitigate the known weaknesses of LLMs, such as hallucination and lack of focus. This means creating validation layers that check the AI’s outputs for accuracy and developing conversational frameworks that keep the AI on task.

A Recalibrated Timeline for the AI Revolution

The stories of startups like Daydream, Duckbill, and Mindtrip serve as a powerful cautionary tale for anyone with overly optimistic timelines for the AI revolution. The initial dream of simply plugging into an API and launching a world-changing product has given way to a more sober reality. Building great applications with AI is an extreme challenge that requires deep domain expertise, exceptional engineering talent, and a tremendous amount of patience.

The productivity boom promised by AI is still on the horizon, but it is taking much longer to arrive than many expected. The path to glory is not a sprint; it is a marathon of meticulous engineering, constant iteration, and a clever fusion of human and machine intelligence. Based on the hard-won lessons from the front lines, the timeline has shifted. Perhaps 2026, or even 2027, will be the year that AI finally turns the corner, moving from a dazzling technology of promise to a practical engine of global productivity.

Comments